This article, prompted by a panel discussion at the RIPE 61 Meeting, describes the most significant implications of network complexity and suggests ways to deal with it. This is a topic that requires more work and a lot of input from network operators. We hope you’ll work with us to advance this subject.

Introduction

While there is extensive research on complexity in some specific areas such as graph theory or software design, the complexity of a real-life network is not very well defined or understood today. We're trying to define complexity, look at the different components that influence it and discuss how we can better deal with it.

What is complexity?

Many aspects of networking are continuously growing: the number of features, the length of routing table configurations, firewall configurations, etc. The entire system is growing which makes it difficult for network operators to understand and manage the entire network they are responsible for. Many operators only fully understand the parts of the network that they specialise in.

Increasing complexity makes network behaviour unpredictable, which is a bad thing in networking (and other systems). Look, for example, at filtering lists: the more complex the lists, rules and exceptions, the more difficult it is to understand all the filtering rules. That makes it hard to predict the effects of changes.

How to classify complexity?

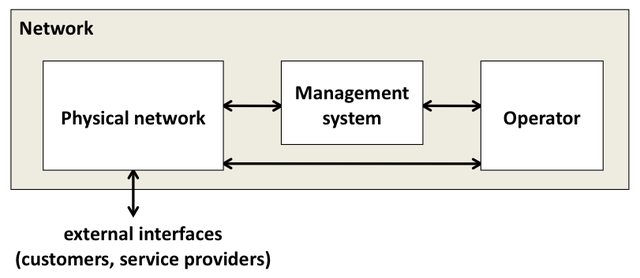

Complexity can be defined as a function of state and rate of change of all network components. The most obvious element of a network is the physical network itself. But often there is also a network management system in place. And the human operator is another element that influences network complexity and needs to be considered.

Figure 1: Elements that influence the overall complexity of a network

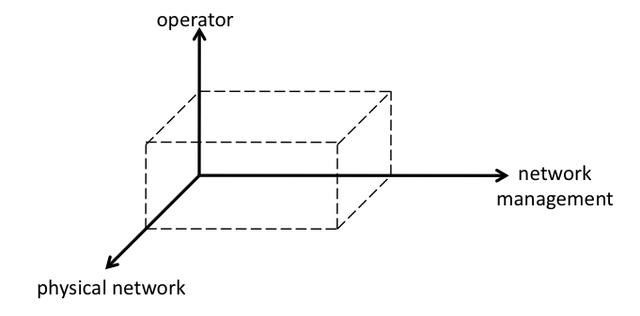

Network management systems have their own complexity, but they typically

reduce human intervention and therefore human error and

unpredictability. Therefore the overall complexity may shift from the

human operator to the network management system, potentially reducing

overall complexity. The following diagram illustrates the main component

of system complexity. You can shift complexity from one component to

others; this may have an impact on the overall system complexity.

So far, we’ve only described a high-level model of network complexity. In order to provide useful quantitative guidance on how to deal with complexity, we would have to look at the details and break down each of the elements that influence complexity. The physical network, for instance, consists of a number of elements that are highly inter-linked and dependent.

How to deal with complexity?

There are a number of mechanisms how to deal with complexity:

- Divide and Conquer: The most common technique. In this context this means that you break up the system into smaller, more manageable parts.

- Shift complexity or hide complexity: A good user interface can hide the complexity from the operator and can therefore reduce human error.

- Defining Meta-Languages: Another way to reduce human error, is to provide simplified meta languages, hiding complexity in simplified commands. Examples of such meta languages are the Common Information Model (CIM), NetConf and the Routing Policy Specification Language (RPDSL).

- Structural Approaches: This is a mechanism to search analytically for dependencies and try to find ways to reduce them. This has been research primarily for software. More work is needed before this can be applied to real-life networks.

Security considerations

It is commonly stated that “complexity is the enemy of security". In a complex network, security rules are derived from many different inputs. For example, an organisation is likely to have a corporate security policy, each department may have specific security rules and human resources may apply certain access rules for classes of users. In the overall network, all these rules have to be combined which can lead to inconsistencies and conflicts.

Predictability is important to ensure security. The more complex a

network is, the less predictable it becomes, and the more likely it is that an

oversight leads to security vulnerability. While a certain level of complexity

is inevitable to reach a reasonably secure state, too much complexity

can result in the opposite: A less secure network.

Conclusion and future work

As you can see, it is difficult to define network complexity; so far most work has been theoretic (see the wiki page and paper in the reference section at the end). We rely on empirical evidence from operators and would appreciate input from this community. In addition to the complexity survey sent out before RIPE 61, we will distribute a more detailed questionnaire prior to the next RIPE meeting in May 2011. We plan to organise a workshop at RIPE 62 so this topic can be discussed in more detail. In the meantime, we would like to encourage you to leave comments under the article or contact us at labs@ripe.net .

References

RIPE 61 Network Complexity Panel webcast recordings

Network Complexity Panel Presentation by Michael Behringer

Network Complexity Panel Presentation by Geoff Huston

Background paper by Michael Behringer: Classifying Network Complexity

For more details on this topic, please refer to http://networkcomplexity.org

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.