Last month we covered the 2015 leap second ahead of the insertion of a leap second at the very end of 2016. As stated previously, leap seconds can trigger poorly-tested code paths; leap second handling always unearths bugs and issues. This one was no exception!

Cloudflare's blog carried an excellent review of how the leap second impacted their service. In short, 0.2% of CNAME queries and 1% of HTTP requests were affected, non-trivial numbers given the size and visibility of their services. Additionally, Cisco posted a bug report (note: registration required) detailing specific versions of their IOS-XE platform that were affected by the leap second, with a fault manifesting in the form of a crash and a reboot. This is obviously undesirable behaviour for network infrastructure!

This post reviews various measurements we made from around the 2016 leap second. We're interested in what the RIPE Atlas platform measured in the NTP pool this time around, and other behaviour we observed by looking closely at other data sources such as routing state and server configurations.

NTP Pool Behaviour

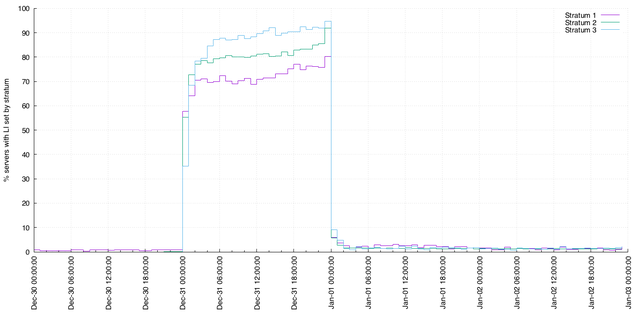

Last time around, we observed that not all NTP pool servers indicate that a leap second was to be inserted at the end of the month. This time, we observed very similar behaviour:

The key points here are:

- Generally, the pattern is the same as the last leap second, but with a higher proportion of stratum 1 NTP servers indicating the impending leap second.

- This time, a smaller proportion servers (in particular, in strata 2 and 3) indicate any impending leap second prior to 31 December.

- Immediately following the leap second, some servers continue to indicate that a leap second will be inserted in the future (i.e., they indicate a leap second at the end of January 2017). This proportion, of around 2% of all servers, is lower than the leap second 18 months ago but the behaviour is still erroneous.

Visualising the NTP Offset

As noted in the previous post, most of our RIPE Atlas anchors are configured to skew system time towards NTP time as opposed to making one discontinuous step. In doing so, we sacrifice some accuracy (in the astronomical sense) in favour of stability (time is not discontinuous, and we avoid repeating timestamps in our measurements or triggering unpredictable bugs).

As before, the skewing process takes a few hours to fully converge, up to around 18 hours in many cases. Each of the anchors has a reasonably accurate clock, and the set of RIPE Atlas anchors is large enough to help us visualise what time "looks" like immediately following the leap second as hosts try to accommodate upstream changes (click to animate):

Noting that the distribution of offsets above is a side-effect of choosing to skew system time towards NTP time after the leap second, one advantage of smearing the leap second becomes intuitively clear: leap smear would elongate or shorten seconds for a few (predictable) hours across the leap boundary. Thus, hosts will skew their time towards the smeared clock repeatedly during the smear, accommodating multiple small deltas instead of one large delta. The result should be a clock which is not astronomically accurate during the smear but which is more stable than we observe above, and which is not so jarring as an abrupt jump.

(Some of our) RIPE Atlas Anchors

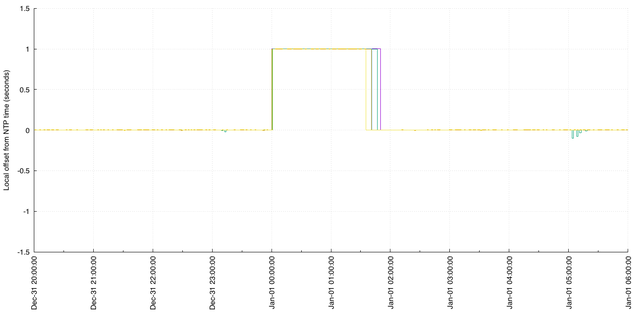

While many of our RIPE Atlas anchors are configured to skew time back towards UTC when a change is detected (as above), some of the older anchors are configured to observe the leap indicator in NTP responses and respond to it by stepping system clocks backwards. For those anchors, we observed this step pattern by measuring system time relative to another NTP server:

The step-up reflects the moment the NTP clock pauses for one second, to accommodate the leap; after this point, these system clocks are +1 seconds out of sync from NTP. The subsequent steps down are when the anchors modify their system clocks and step backwards by one second.

The erroneous behaviour is that the step backwards didn't happen on the leap second; the problem here is that it took two hours for the anchors to respond to the event. They should have observed a leap indicator in responses from NTP servers and, having done so, should have inserted a leap second to represent 2016-12-31T23:59:60. If that had been the case, the one-second offset would not have been observable in the plot above. This behaviour runs contrary to our expectations.

To understand this glitch, we were able to review the logs on these RIPE Atlas anchors. The logs suggest that the ntpd daemons did not observe any leap indicators from NTP servers, but instead observed a discrepancy between local time and the configured NTP servers more than one hour after the leap second had happened. Some time passes between ntpd first observing the discrepancy, and deciding to step the system clock back by the difference to compensate.

That doesn't explain all of the delay, however. We also inspected the logs on the NTP servers, and they show similar behaviour: they did not observe leap indicators from their upstreams, and so they did not automatically insert a second either. Therefore, some of the delay seen in the plot comes from the NTP servers these anchors were configured to use: some time passes between their scheduled NTP queries and the first observance of a discrepancy followed by time taken to act on the discrepancy, before any clients (in this case, a small set of anchors) can observe a discrepancy. In this case, the leap second cascades downstream as if each client were reacting to an error condition, rather than responding to a pre-planned (and well-known!) event. The whole process took up to two hours to converge rather than happening very close to the actual leap second.

By measuring this, we're able to understand how our systems actually operate and this helps us modify their behaviour for next time. In this case, the time servers affected are not public time servers, and those servers were configured to use the NTP pool. For future leap seconds we will be able to inspect the behaviour of these servers, or modify their time sources completely.

Relatedly, it's not always the case that a late clock change in an NTP server suggests it's unaware of the leap second. There are cases in the NTP pool where servers indicate a forthcoming leap second after the leap second took place, and where the server is apparently in reasonable sync with NTP, but eventually inserts the leap second minutes or hours after the event and only then resets the leap indicator flag. These present an interesting (and, again, erroneous) edge case where the server indicates the leap second and clients will insert it, but the server does not until much later.

BGP Behaviour

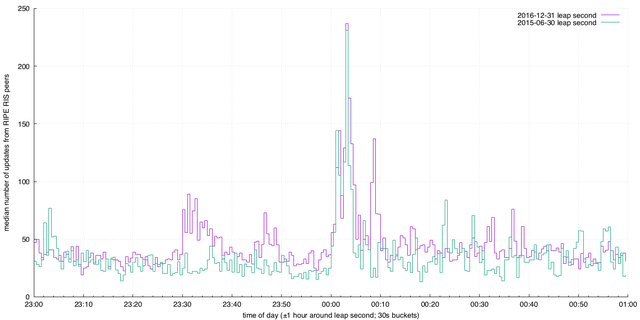

Inter-domain routing traffic is inherently noisy. Networks frequently modify their peering relationships, advertise new space, withdraw old space, or modify how they carve up their address space, and this collective noise, or churn, is quite typical of the system. Noise around a particular event, however, can be interesting.

We were interested in whether the leap second led to a change in observable behaviour if we measured Border Gateway Protocol (BGP) updates. As a first cut, we took the number median number of updates per RIS peer in 30 second buckets across the leap second boundary. We added 2015 to correlate, and in both cases we notice a spike in activity:

We're interested at the moment in the set of origins that appear to cause the spike, and we're going to focus on this event as an example of possible software glitches affecting global routing and reachability.

Notably, we don't spot quite the same pattern on prior leap seconds, suggesting the behaviour is at least not consistent or that new hardware deployments have introduced a new bug. Given the bugs that Cisco publicised earlier, it's not unreasonable to assume that people are buying products that do not handle leap seconds gracefully. We intend to investigate this further.

Summary

NTP configuration is hard. Handling leap seconds is hard! Not least because they happen rarely enough that they trigger systems and applications in ways that nobody thought to test.

Here, we've observed multiple behaviours around the leap second event:

- Infrastructure configured correctly that relied on external sources (which did not behave as anticipated). In most cases, systems will rely on external time sources, whether it's the NTP pool, another well-known managed NTP service, or NTP servers inside cloud deployments (notably, these are normalising around smearing the leap second to avoid stepping clocks backwards and to avoid triggering untested leap indicator code).

- Services and products that relied on time always stepping forwards. Cloudflare's failure rate is small, but non-trivial. Router bugs could be catastrophic.

- Other tertiary effects: an uptick in BGP churn is rarely a problem in itself, but it's emblematic of unintended behaviours elsewhere in the network: routers rebooting and BGP sessions resetting, for example.

As for future leap seconds, the most we know is that there won't be one at the end of June. There may, or may not, be one at the end of December, but we won't know for some time.

If you run infrastructure and you were affected, consider how to avoid these problems now rather than later! If you run anything that relies on sub-second timer granularity, consider how the leap second impacted your data, and how to avoid this in future.

Comments 0

Comments are disabled on articles published more than a year ago. If you'd like to inform us of any issues, please reach out to us via the contact form here.