RIPE Atlas and Routing Information Service (RIS) are highly valued services provided by the RIPE NCC to our members and overall community. Due to the very large amounts of data we collect and store, it is imperative that these services are built on a cost-effective platform, allowing us to provide them in a financially sustainable way for many years to come.

Some historical perspective

Since their inception, both RIPE Atlas and RIS have provided access to all historical data ever collected. Currently, RIPE Atlas holds nearly one petabyte of data, while RIS contains approximately one hundred terabytes. This data holds immense value for various stakeholders, offering insights into the Internet's history in terms of routing (since 1999 for RIS) and Internet connectivity and reachability (since 2011 for RIPE Atlas).

A fundamental assumption behind providing full historical data for these services was that storage costs would decrease at the same rate as data volume increased. The idea was that, once the core infrastructure was established, we could expand data storage at a consistent cost by upgrading to newer, more powerful servers. The underlying technology storing the data, Hadoop and HBase, were designed with the premise of utilising inexpensive, commodity hardware. Therefore, this assumption seemed reasonable given our knowledge at the time.

However, this assumption didn’t stand the test of time. Currently, we operate a significant data centre infrastructure, comprising approximately 46 racks spread across two data centres in Amsterdam. Half of this infrastructure, roughly 23 racks, is dedicated to storing RIS and RIPE Atlas data. These servers are nearing full capacity and require lifecycle replacement. If we continue to provide access to full historical data, significant additional investment will be needed. Consequently, we faced a choice: either cease providing access to historical data and opt for a smaller, more efficient storage solution or seek an alternative technical approach that enables sustainable access to historical data. We are currently in the process of implementing the latter solution, which I will describe in the following sections.

Vision for data centre usage

Our data centre costs currently approach nearly a million euros annually, covering housing and power expenses and excluding equipment and engineering costs. Our goal is to substantially reduce these costs: from 46 racks to 23 by the year's end, and further down to 10 by the end of 2025. This reduction will result in saving more than 70% of our current data centre expenses.

These anticipated savings will not only stem from relocating RIS and RIPE Atlas out of our data centres but also from other efficiencies achieved by our IT department in managing the existing infrastructure. While this article primarily focuses on RIS and RIPE Atlas, we're open to detailing these additional savings in a separate article if there's interest.

However, there's a caveat: some of these savings will be offset by increased cloud costs, as our proposed solution, outlined below, will leverage these technologies. Nonetheless, the new costs overall are anticipated to be significantly lower, less than half of the current expenses, while also being future-proof.

Our vision is as follows: to maintain the data centre infrastructure for our crown jewels, such as registry and membership data, RPKI, DNSSEC and the RIPE Database. Less critical services, without personal, sensitive or confidential information, can be stored in the cloud. The specific cloud provider selection will depend on the requirements of each service, but we prioritise European cloud providers whenever feasible. Additionally, we aim to adhere to industry standards (e.g. Kubernetes) to facilitate seamless transitions between providers should a superior option emerge.

New storage for RIPE Atlas

In the middle of last year, we set a goal to reduce our data centre footprint by half by the end of 2024. In order to achieve that, we examined various options, including both cloud and in-house solutions and the usage of different technologies. Ultimately, we opted for a hybrid approach, combining cost-effective object storage in the cloud with building a new cluster using rented bare metal. This solution offers two major advantages: firstly, it enables us to store the majority of our data at a significantly lower cost, and secondly, it provides flexibility to adjust parameters for further cost optimisation, albeit with some trade-offs.

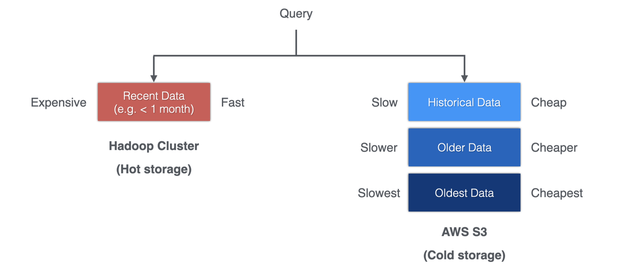

The concept behind this solution is simple: implementing tiered storage where frequently accessed data is stored in a faster, more expensive solution, while older, less accessed data is stored in a slower, more economical solution. For hot storage, we opted to build a new cluster using rented bare metal. For cold storage, we chose AWS S3, an affordable object storage solution that allows us to store large volumes of data at minimal cost - with the flexibility to change to a different provider if needed. Additionally, we modified the RIPE Atlas code to seamlessly access data from either source without the need for users to know about the specific solutions.

As I write this, any queries to RIPE Atlas seeking data older than 2021 are served from our new S3 storage. While in some cases, it is slightly slower than our current cluster, most queries should still be resolved within a few seconds, even under worst-case scenarios. In specific instances, the response from S3 was actually faster than the current solution.

Many of the parameters within our new solution are easily configurable. For example, AWS S3 offers automatic tiering for stored data, which gradually moves less frequently accessed data to even colder storage tiers. The specific tiers we utilise are customisable; we have the option to transition data all the way to Glacier storage, where it is stored at an extremely low cost but may take hours to retrieve. That is something we chose not to do at the moment but have kept as an option.

Our aim is to have the new solution fully implemented by July 2024, with the old cluster decommissioned by August. This timeline will enable us to substantially reduce our data centre footprint and realise significant cost savings.

Next steps

The primary focus for us right now is to successfully migrate the RIPE Atlas storage from our data centres to the new solution. Currently, we are in the final stages of data extraction, which involves copying the data from Hadoop into S3. We anticipate completing this task within the next few weeks.

Simultaneously, we are diligently working on setting up the new cluster. Once this is completed, we can transition production entirely from the old cluster to the new one. This will free up space for our IT department to embark on the labour-intensive task of clearing out racks in our data centres and reorganising servers to accommodate the new, reduced space requirements.

Once these steps are successfully completed, we will proceed to the next phase: migrating the RIS backend. While the approach will likely be similar to that used for RIPE Atlas, there may be necessary modifications given the unique requirements of RIS.

We are proud to have found a solution that offers much more cost efficiency without compromising the quality of our data services. We look forward to hearing your feedback and comments on this significant undertaking.

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.