A new repository of social audits is more similar to the RIPE Database than you might think. We've documented some lessons learned and also what processes we've reused from the RIPE Database.

Background to SLCP

Each year the fashion industry is spending vast amounts of resources on factory audits, which are duplicative and not necessarily leading to improved working conditions. It is quite common for a factory to perform several similar audits per year, which take several weeks to complete, and then the data is distributed to one organisation which requested the audit.

To improve labor conditions in global supply chains, there needed to be a major change in the way that audits were done. Part of this change was to build a repository of factory verified assessments. A facility can then re-share their verified assessment data with any organisation that they want to, rather than completing a new audit. This will save time, effort and money. By 2023, this efficient redistribution of social data from one repository will save the fashion industry $134 million; money that will be redistributed to the factories to improve working conditions.

Each verified assessment is, in essence, a very large questionnaire made up of up to 3,000 questions, which is completed by the facility and then verifiers, approved by the Social & Labor Convergence Program (SLCP), visit the facility and verify and correct the assessment based on what they actually see. In total, each assessment can have up to 12,000 data points.

Why is this (a social labor repository) interesting to the RIPE community?

As the RIPE Database has been around for many years, we've used some solutions from the community-led decisions to help us with our repository which is a lot newer.

In light of the recent RIPE Database Requirements Task Force discussions on "What is accuracy?", I thought I should share our experiences and solutions, which might help the discussion.

It's important to remember that at the technical core of both the RIPE NCC and SLCP is a repository, and there are a lot of similarities in how these organisations and the communities work (see Table 1 below).

Some similarities and differences between the two setups

| RIPE/RIPE NCC | SLCP | |

|---|---|---|

| Community involvement by | Working Groups (WGs) |

Technical Advisory Committees (TACs); small TAC made up of 9 people Tech Group made up of larger set of interested parties including end users and technical service providers |

| Discussions and presentations |

Mailing lists WG sessions at RIPE Meetings Decisions made by consensus |

Bi-monthly Tech Group calls and Bi-yearly Technical meet-ups TAC calls every six weeks Decisions made by consensus |

| Formal documentation of processes and procedures | RIPE policies | Business rules |

| Name of the database | The RIPE Database | The Gateway |

| Community organisations include | ISPs, IXPs, Enterprise organisations, Governments etc. | Facilities, Manufacturers, International Brands and Retailers, Audit forms, Governments and Civil Society organisations |

| Database mission (high-level) |

Providing accurate registration information of Internet number resources Publishing routing policies by network operators (RIPE IRR) Facilitating coordination between network operators |

Securely store verified data that is collected from facilities through SLCP assessments Provide a transparent interface for end users to see basic information about all facilities and the status of their most recent assessments Securely distribute approved data to technical service providers |

| Database structure | Object related database in SQL setup | Non-structured database, each single dataset (a verified assessment) is made up of 3,000 questions and can contain up to 12,000 data points |

Table 1: Similarities and difference between the RIPE Database and SLCP

Experiences we learned from RIPE and solutions which might help the RIPE Database

Gateway profile accounts

Like with the registration of new RIPE NCC members, we take the creation of Gateway profiles very seriously. This is the starting point for a facility with SLCP. We ask for organisational details such as Company Name, Business License information and the geo-location details of each facility. One thing that we're fortunate with is that we have onsite verifiers visiting the facility, which means all of these details are checked and validated in-person. Currently, we run monthly reports which show any differences between what was put in by the facility and what was verified in-person. At the moment this is not a problem, however in the future we will automatically update profile data with the true verified data, if necessary.

Governance and change management

Having two virtual meetings per month really helps with agreeing on, and rolling out changes. During these meetings with our technical stakeholders/community, we discuss user issues and requests, upcoming releases, the backlog, and sometimes we demo changes. These calls are vital for our governance and transparency of changes to the database. Recently, a survey of our technical stakeholders showed that they found these calls to be extremely valuable as they "increase awareness" and "align development efforts". If any major changes are required to the registry or the APIs, then the functionality is discussed in this technical group and signed off by the TACs or Council (similar to an executive board).

Additionally, the calls allow us to perform some final User Acceptance Testing. If we can't get consensus on any of the topics from one call, discussions, and decisions will be continued on the next call due to the frequency of the meetings.

All calls are minuted, recorded, and hosted online for future reference. The calls are also manageable at the moment as the number of people who join the calls remains around 20-40, but having that regular touchpoint with the users, and the technical community, is very effective.

What is accuracy and how can a community design and agree data quality levels?

Accuracy is just a word and it’s a word which is used frequently when people talk about the RIPE Database. We are similar in that regard. It’s instinctive to say that all data should be accurate, but this is difficult to do when you have millions of records; as things grow, it'll be hard to enforce accuracy. Additionally, it's extremely important to make sure that the data in the system is of a high quality as business decisions are made from this dataset. We have to be very mindful that there could be some serious financial consequences for all users of the system if the data is incorrect.

To help define accuracy, we use a Data Quality Framework. Part of the Data Quality Framework are the Data Quality definitions. There are many Data Quality Framework tools which can help but as our dataset is relatively simple, we settled for a simple matrix to get us going (examples of which are shown below). As a community, we decided the accuracy levels as well as the processes surrounding them. This was done via two in-person meetings, a lot of online calls, and five changes to our business rules regarding data quality. All of this happened over several months. Despite the dataset being simple, it wasn't an easy operation. Collaboration was key, and it was important to have the end users and technical operators in the meetings and on the calls to discuss what data is accurate (to each individual) and how the data is actually used.

Once we defined what data needed to be accurate, we then broke down the definition of accuracy per data item. We simply did this by looking at the data structure of the questionnaire and then applied the quality levels in a table.

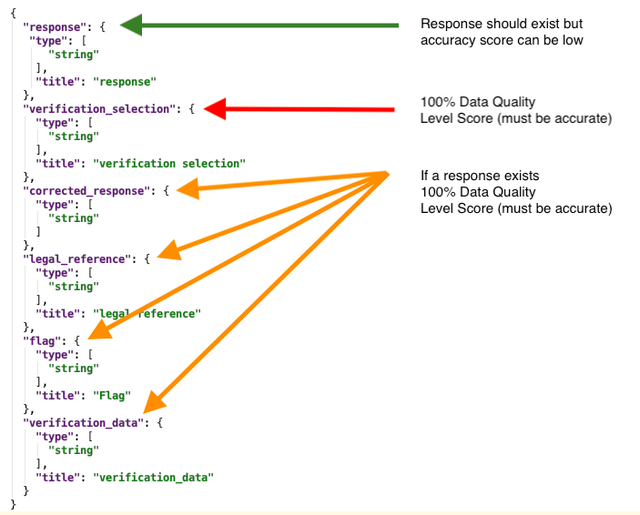

Below you an see a sample data structure of one of the questions:

Figure 1: Sample data structure of a single question with the data accuracy levels defined

In the table below you can see an example of data quality and accuracy definitions which are set to all of our questions.

| Data Item | Data Quality Level | Accuracy Metric - What makes this accurate? Definition of logic to enforce the accuracy |

|---|---|---|

| All question values | 100% | Response should be under 1,000 characters. |

| Verification selection (per question) | 100% | The Verification selection input should always have an answer of Accurate, Updated During Verification, Inaccurate. This is handled by the JSON Validation Schema. |

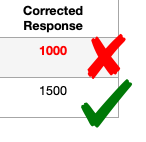

| Corrected response (per question) | 100% | If Verification Selection of a given question is updated during verification, the corrected response should not be null and should be different from the response object value (see Figure 2). |

Table 2: Sample of data quality and accuracy definitions

More complicated data quality and accuracy metrics

As some of our questions are conditional, and the responses to some questions trigger more questions, we also have more specific data quality definitions for specific question types (see Table 3)

| Data Item | Data Quality Level Score | Accuracy Metric - What makes this accurate? Definition of logic to enforce the accuracy |

|---|---|---|

| Question key wh-5-2. (Each of the 3,000 questions has a unique ID, for example wh-5-2 or wh-5-1) | 100% |

If question wh-5-1 is validated as accurate and has the value True then the verified response of wh-5-2 should also be True and verified as accurate Also - must be a boolean response |

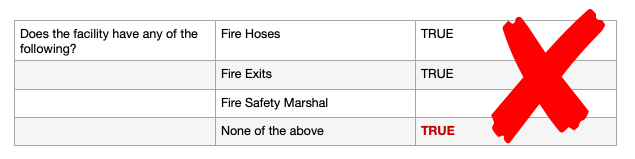

| Any multiple choice question | 100% |

Any multiple point questions shouldn't have "None of the above checked" while having more than one additional checkbox checked in the same question set (see figure 3) Also - array of strings response. Array can't be empty |

| String-field questions - shouldn't contain non-ASCII characters | 80% | It was decided to have a simple ASCII set up to the question responses. But as the database supports UTF-8 characters, no alerts of the data will be raised unless over 20% of the answers contain non-ASCII characters. |

Table 3: Sample of some more specific data quality definitions

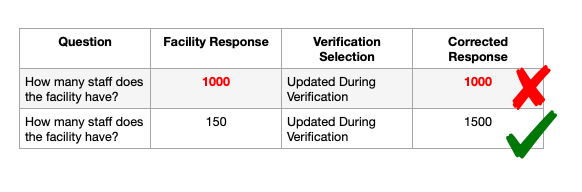

Below you can see an example of data quality failings:

Figure 2: Example of data quality failings. In the first row you can see that the facility response and the corrected response match, which should never happen. Either the verifier has entered the data incorrectly or there has been a data transfer issue in the submission to the database. The second row shows what a valid response should look like.

Figure 3: Example of data quality failing. This failing is due to the fact that you can't have "None of the above" selected with other valid inputs in the same question set

Once the data quality levels and accuracy metrics were defined by the community, we updated the business rules. At this point we turned this into Quality Assurance (QA) code. Currently there are 22 data quality checks which are run over each assessment. Most of the QA checks affect each question and verified response. This provides our end users (the brands) with really good accurate data and at low cost.

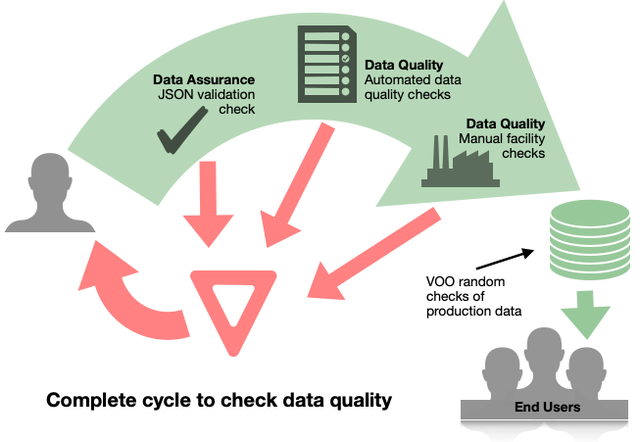

Automated data assurance and data quality checks

Data assurance

When the data is initially posted to the system it is validated by a JSON validation schema. The schema basically checks if the data points which should be string, boolean, arrays and integers, are as they should be. If any errors happen here, then the assessment is not published and will have to be fixed by the submitting user. The JSON validation schema is created and managed in the Gateway and is version-able, making it backwards compatible with older datasets.

Data quality

Compared to the RIPE Database, the Gateway has the luxury of data processing time. Live updates aren't queried in real time, but should be online within a short period, this means that we can hold up assessments from being published if they fail the data quality checks. This is handled by a simple setup. We use Jenkins to poll any new assessments and trigger the Python QA scripts, which contain the business/QA logic. If a new assessment is posted, it is held in a pre-publication state while these automated checks are run. If the QA checks pass, then the assessment is pushed to the next stage and is ready for publication. This whole process takes under 5 seconds. If the QA checks fail then the assessment is sent back to the submitter to fix. We allow the submitter to have up to two weeks to resolve the issue(s) before another system flag is triggered.

Another quality check is performed by the data owner - the facility. Before the assessment is available to the desired end users, the facility can do a 'once-over' of all of their data. If they are happy with the data and want to publish their content quickly, they can push it live immediately.

External review

Another interesting part to the data quality cycle is the Verification Oversight Organization (VOO). Predominantly the VOO oversees the quality and integrity of the verification process, remaining relatively uninvolved in the tech process. However, they do give us great insight into common quality errors in the verification data, which can then be added to the business rules and subsequently (QA) coded. They are an important part of upholding our data quality and are also part of our technical calls and meet ups.

In the future we can see whether machine learning can be used to analyse the data quality of our full dataset or to flag common (or new) quality errors which we will have to address as a group.

Figure 4: All stages of our data quality cycle

Reducing the scope of the database

Like any organisation, once you have a rich dataset it is very easy to keep adding things to it, especially if you have a noSQL database. But even if it's easy to do, we don't really want to. We keep reflecting on the core goals of the dataset and once a year we analyse what data should go into the repository. We ideally want to reduce the number of questions that facilities are asked. Currently, 3,000 questions are a lot of questions to answer. A smaller question set will improve the user experience. Also, the more data you have to store the harder it is to maintain, which could result in a reduction of data quality.

We have one TAC actively looking into reducing the question set for 2021. We're doing this in a data-driven way and are looking at how many questions are actually being answered per assessment. To help manage this we built a reporting system where we can see what questions are never answered. On average we can see that 1,500 questions are answered and this will help us reduce the question set to a much more manageable level.

Summary

Although this process took some time, it was important to make sure that the foundations of the technical setup have been initiated to a high standard, which we can then develop upon in the future. Similar to RIPE, it was important to get everyone's input and feedback, and then build consensus. This is a database that will be used for critical work decisions for a vast array of organisations. All of these end users will use the data in a slightly different way, therefore their insight and input is extremely important especially defining what data quality is.

I think it's important that even though the RIPE Database is in its own field of storing Internet number resources, there are lessons that can be applied to any field. Large repositories of data are not just isolated to the tech industries anymore, and the RIPE community can show the way on how to handle large datasets.

Equally, I am not suggesting that the RIPE Database should undertake any of the solutions we've implemented, in some cases the RIPE NCC/RIPE have implemented better solutions that we will work towards. I thought I should share our setup and findings. Although the industries are very different, we seem to be trying to solve the same technical problems.

Comments 1

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.

Theodoros Polychniatis •

Great article Adam, thanks for sharing!