This year’s Symposium on Computers and Communications (ISCC) touched on many topics such as edge and fog, new challenges related to smart computing and networking, big data computing and communications performance. Read on to see a few highlights.

I try to attend at least one new-to-me academic conference every year in order to broaden the mix of academics I reach and attract to RIPE Meetings and RIPE NCC Regional meetings via RACI. I get to meet new people and hear about new ideas, approaches and techniques. IEEE, the Institute of Electrical and Electronics Engineers, has organised the Symposium on Computers and Communications (ISCC) since 1995. Despite the event usually taking place around the Mediterranean region, I was surprised to find a great geographical representation, with speakers from all over Europe but also good numbers from Asia, the Americas and the Middle East. With four sessions running in parallel it was impossible to attend everything, but I hope to give you snippets of a few presentations that grabbed my interest and let you dig in further, should something peak your curiosity. And lastly, please tell me in the comments below if you found this useful. I’d like to use your feedback to decide whether to go again next year.

Best Paper Awards

Let’s start with the papers acknowledged with the best paper award.

First, WiFi. Traditionally, in environments with a high density of access points (APs), user stations (STAs) choose the APs with the strongest signal. This leads to overcrowding of some APs and the underutilisation of others. Techniques such as Multi-Armed Bandits dynamically map and distribute STAs among the available APs. Yet STAs cannot see the whole network and therefore cannot predict the network behaviour. To address this challenge and achieve a more efficient use of the network resources, Marc Carrascosa and Boris Bellalta from Pompeu Fabra University in Spain developed Opportunistic ε-Greedy with Stickiness approach, where STAs would stop searching for APs as soon as they find one that caters to their needs and only resumes the search after several unsatisfactory association periods. Their technique reduces the network response variability and the time needed for STAs to find a suitable AP.

Moving on to something quite different. Managing smart cities requires collecting and analysing a large amount of data, often collected from wearable personal devices via mobile crowdsensing technologies (MCS). Dimitri Belli and colleagues from the University of Pisa and the University of Bologna address the synergy between mobile crowdsensing and multi-access edge computing. They explore the differences between using localised, fixed servers at the edge of the network (called Fixed Mobile Multi-access Edge Computing nodes or FMECs) and users’ devices configured by the MCS platform to act temporarily as mobile edges (called Mobile Multi-access Edge Computing nodes or M2ECs). Their results indicate that M2EC can reach users faster than FMEC, although in future studies they plan to test these results beyond the homogeneous community of students in the same town.

And finally, for a slightly more social science oriented topic, Munairah Faisal Aljeri from the Kuwait Institute for Scientific Research (KISR) analysed the spatiotemporal features of user’s geotagged tweets and revealed human mobility patterns, such as the relation between movement flow and the time of the day or week.

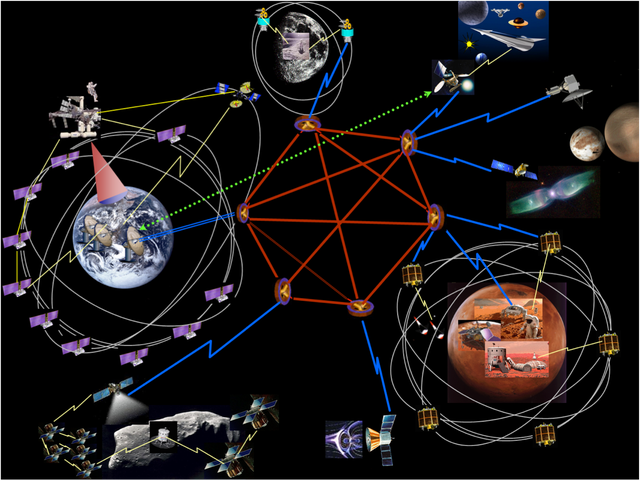

The Internet of Space

Ever felt like the speed of light is too slow? If you are on or trying to communicate with another planet, that might well be the feeling you get. Interplanetary networking requires transmitting data through vast distances, where there are frequent delays and disruption, significant loss of data, one direction links and high error rates. Enter DTN, Delay and Disruption Tolerant Network. Think of it as a network of smaller networks, which supports interoperability by accommodating long delays and disruptions both between and within networks, and by translating between their communication protocols. DTN uses automatic store-and-forward mechanisms. This means that once a packet is received, and forwarding is not currently possible, it gets stored for future transmission when forwarding is expected to be possible. Although originally designed for space, DTNs can be useful in many commercial, scientific, military and public-service applications with frequent disruptions, intermittent connectivity and high error rates, such as disaster response and wireless sensor networks. Unfortunately, you need to pay for the article presented, but here is a link to a tutorial around DTNs from the InterPlanetary Networking Special Interest Group. Speaker Vassilis Tsaoussidis, Democritus University of Thrace, cautioned that as deployment is still restricted to trusted entities, security issues might be big.

The Disruption Tolerant Network protocols will enable the Solar System Internet, allowing data to be stored in nodes until the transmission is successful (Image: NASA).

Security

Speaking of security, wouldn't it be great if data could protect itself? Standard methods for detecting data corruption typically produce, store and verify mathematical summaries of the content such as Message Authentication Codes (MACs) or Integrity Check Values (ICVs). These are costly to maintain and use and result in latency, storage and communication overheads. An alternative implicit integrity methodology detects corruption by using pattern techniques instead of processing content summaries. The basic idea behind implicit integrity is that the typical user data has patterns (repeated bytes or words), while decrypted data resulting from corrupted ciphertexts does not. Thus, eventual corruption can be detected, by examining the entropy of decrypted ciphertexts. Here is a security analysis of constructions called “random oracles according to observer functions”, which look for unusual behaviour (and establish the probability of this behaviour) to determine if a data has been corrupted or not. The results show that these constructions work well in both inputs perturbing adversary and content replay attacks.

What about network corruption? A link flooding attack (LFAs) is a type of DDoS attack, which congests critical links by using legitimate low-rate flows, making it indistinguishable from legitimate flows by traditional defence tools such as firewalls or IDSes. Meet LF-Shield, a tool detecting and mitigating these attacks using software-defined networking (SDN). LF-Shield collects flow statistics, detects congested links, identifies malicious bots by extracting end-hosts’ traffic features and afterwards, classifies the type of end-hosts based on deep ConvNet (convolutional neural network). It then blocks the identified malicious bots (with an accuracy of 96.4%) and limits the bandwidths of inactive or newly-accessed end-hosts. The experimental results show a 93.1% reduction in link degradation ratio. The authors caution the tool is somewhat vulnerable to false positives and they are working on better distinguishing normal from attacker traffic.

Let's close the security chapter with a research combatting many organisations’ worst nightmare - identity misuse, or when an attacker impersonates someone from the inside to wreak havoc. Similar to the previous research, many detection techniques try to spot anomalies to identify a compromised account. Host-based data that profiles human behaviour (how hard you press the mouse button, how fast you scroll, favourite commands or files, or action time for different things you do) can quite accurately pinpoint if someone is hijacking your account. These techniques, of course, pose quite some privacy challenges and have high deployment cost. What if you model user network behaviour using the less-sensitive and cheaper to collect network packet sketches (IP, port, protocol)? Check this research out and keep in mind that the authors caution that it is still a work in progress. They recognise that at the moment it is not very effective against smarter attackers, such as intruders hiding behind a NAT router for example, which is what they will focus on next.

Traffic Classification

Taking a few steps back, how do you keep your network secure and well managed? How do you accurately analyse, classify and measure traffic? Recent developments such as traffic encryption, the increased use of dynamic port numbers for different applications and the proliferation of applications running over HTTP/HTTPS protocols renders traditional traffic classification approaches unable to provide good visibility of user’s activities. Read about a new algorithmic classification of encrypted social media, video and audio traffic. It doesn’t rely on particular application layer header fields that can be easily modified, but uses off-the-shelf traffic flow analysers and a machine learning algorithm with an active measurement module, which has the capability to automatically train itself. Test results on different networks are promising and show that using decision trees, the trained model classifies traffic correctly with a true positive rate of 85% and a false positive of 5%. They are planning to improve the accuracy of the system by designing different ensemble classifiers to aggregate the output of the binary classifiers.

Information-Centric Networking

And now, for those of your who like to think outside the box, let me go through some next-gen research, focusing on new paradigms. Content on the Internet contains semantic information that can be used to identify and discover it. In the last decade, Information-Centric Networking (ICN) is trying to establish uniquely named data as a core Internet principle, making it independent from location, application, storage and means of transportation and leading to more efficiency, scalability and robustness. Assuming users are willing to accept information somewhat semantically different than what they requested, Big Packet Protocol (BPP) can be used to reduce latency by enabling the network nodes to match semantically locally cached content.

So when can ICN be useful? The constant connectivity to the cloud we need today to gather and process data and initiate action causes a lot of traffic and high delays. Not to mention it is sometimes unavailable at the exact moment you need it most. What if end-user and edge-network devices formed edge computing swarms to complement the cloud with their storage and processing resources spurring decentralization, application development beyond the cloud, low-latency services and new markets for storage and processing? Read about Named Functions at the Edge (NFE), a platform where arbitrary code can be executed in a network exchanging named content and data, using the resources of devices at the edge and compensating each party for this.

Last, but not least, acknowledging the multitude of clean-slate network architectures proposed recently, the authors of the next paper propose a framework for inter-network architecture mobility. The framework, doubling both as an anchor point and a gateway, converts messages between protocols allowing mobile nodes (MNs) to move across different network architectures (IP and NDN, Names Data Networking) without losing access to the contents being accessed.

Conclusion

I will leave you with this for now. Don’t worry if you didn’t read in detail and just skimmed through the interesting bits. But please do let me know what you thought. Was it interesting? If you want to see for yourself, in 2020 ISCC will be hosted by IMT Atlantique in the lovely Saint-Malo (date to be confirmed but likely beginning of July).

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.