Every day the Internet experiences failures that affect the reachability of certain zones (i.e prefixes), such as cities, countries, etc. Whenever such events happen, the routing information, which is the path that information must follow in order to get from one place to another, must be updated so that the failure is circumvented and the zone becomes reachable again.

The current resiliency of the Internet makes this problem almost unnoticeable to the common user, but the truth is that during this reconvergence process, a considerable amount of traffic is lost. This is a problem for two main reasons: first, because the revenues of ISPs depend on the amount of traffic they are able to route; and second, because every day more and more sensitive services depend on the Internet to work.

In this article, we propose a simple modification over BGP, the current algorithm for propagating the routing information, which aims to mitigate the consequences of the reconvergence process.

Introduction

The problem of Internet convergence is not new, and several research projects have proposed improvements in recent decades. These improvements, however, always aim to enhance the same two factors: the time it takes to recover and the amount of information exchanged after a failure. Clearly reducing these two factors directly reduces the impact of a failure. We, on the other hand, follow a completely different and novel approach based on the following three observations:

- Observation 1: A single failure may affect a large number of prefixes. It is very common for two routers in the Internet to exchange a great amount of information. This means that whenever there is a failure between these two routers a great number of prefixes must be updated in order to restore their reachability.

- Observation 2: The routing information is not updated at the same time for all the prefixes. The reason for this is that the reconvergence process involves a number of operations and message exchanges that are not simultaneous for the different prefixes in question.

- Observation 3: Only a small number of prefixes really matter. Some destinations, such as Youtube and Netflix, are more relevant than others due to the amount of traffic they represent, whilst others may be relevant for other reasons. The key is that usually only a few zones are really important for each Internet service provider.

The current way of recovering the routing information follows lexicographical order. This is that the reachability of zone ’A’ is going to be restored before the reachability of zone ’B’, and so on. The problem with this approach is that the numbering of the prefixes is completely unrelated to its relevance, and the traffic directed to the zone is lost until the reachability is restored.

Our algorithm, called Power Prefix Prioritization (PPP), proposes a simple change: significantly reduce the impact of a failure event by ensuring the most relevant prefixes converge first. We achieve this by using a rank of prefixes, which can be manually or automatically configured (i.e. by inspecting the amount of traffic to each zone), and determines the order to follow when propagating routing information.

Our experiments

In order to validate the benefits of our proposal we used two different approaches. The first one consisted in using real traffic traces obtained from the link of one Internet Service Provider (ISP) to its own provider and estimate the traffic losses of PPP and the current algorithm. In the second approach we simulated two of the most common configurations among ISPs, and at one point we artificially generated a failure. Since our main focus is to decrease the traffic losses, in both experiments we used the same methodology to measure the benefits of using PPP. We calculate the traffic losses obtained using PPP (using the traffic towards the zone as the ranking parameter) and the losses using the alphabetical approach. Moreover, we have developed a mathematical model to bound the minimum traffic savings that can be expected by using PPP based in the traffic distribution. The following sections detail the real values obtained with each approach.

Model

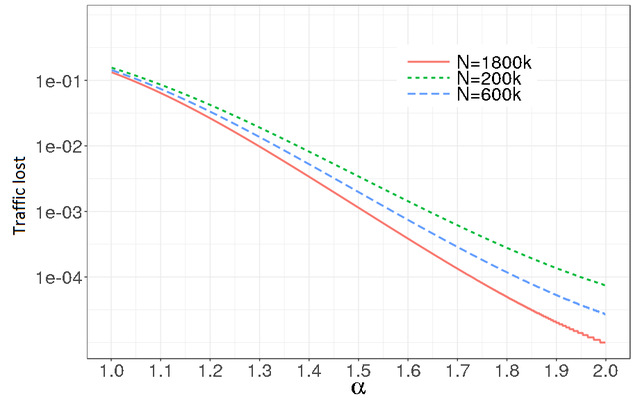

It is largely accepted that the Internet traffic follows a power law distribution for the amount of bytes or packets over the different prefixes. In other words, only a few prefixes concentrate the large majority of the packets/bytes. We used this as a basis for developing a mathematical model that uses the scaling factor of the traffic distribution, the number of packet samples, etc., to bound the benefits of using PPP.

The following image shows the bound of the benefits for different values of the scaling factor (alpha) of the power law distribution as predicted by our model. The different curves represent the amount of prefixes in the BGP table (N).

The results clearly show that even for low values of the scaling factor of the power law the traffic losses can be reduced to 10% of the original losses. Using real traces we found that the scaling factor to be around 1.3, meaning that in real scenarios we can expect to reduce this losses to less than 1% of the original.

Real traffic traces

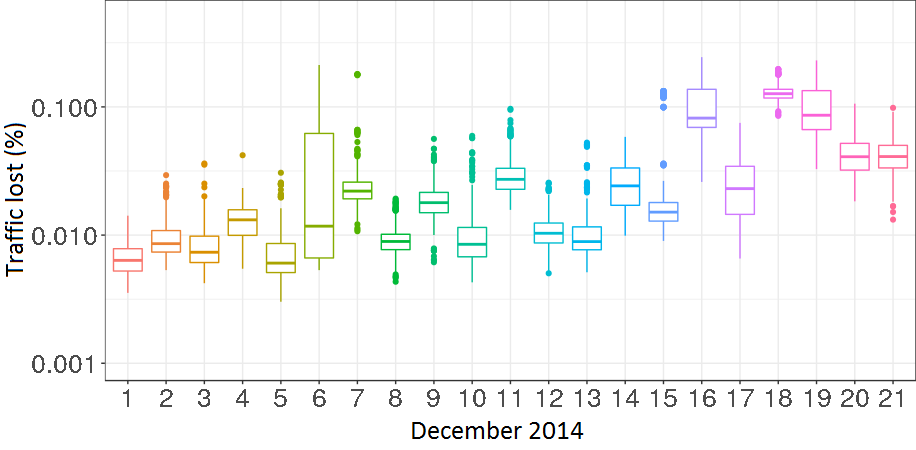

Our traffic data set consists of two month captures corresponding to December 2014 and December 2015, from the link of an ISP to its provider. Using these traffic traces we generated the ranks based on the amount of traffic towards a destination during one full day (we picked the first day of the month in both cases), and evaluated the benefits PPP would have if a failure happened at any moment of the month. The following image shows the results obtained.

It is clear to see from the previous image that even three weeks after generating the ranks the PPP loses less than 10% of the traffic compared to the current approach. What is more, for the first week after generating the rank only 1% of the traffic was lost. In our research paper, currently under review, we also proved that this process can be performed in an automatic manner without stressing the hardware resources already available (i.e. sampling the traffic, etc.), and we measured the effects of other parameters such as the traffic distribution and the time of taking the traffic samples.

Simulations

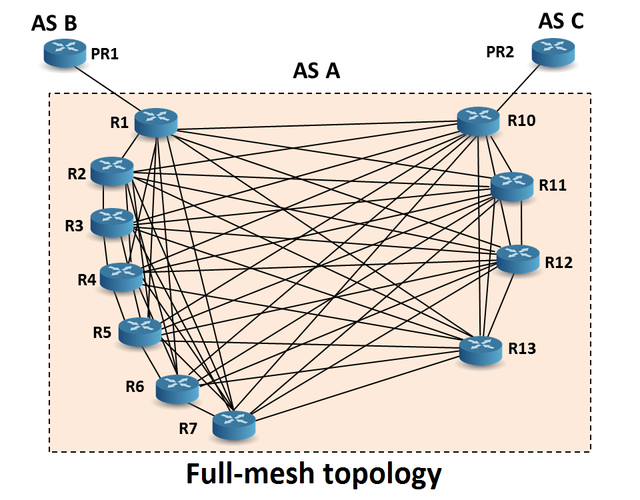

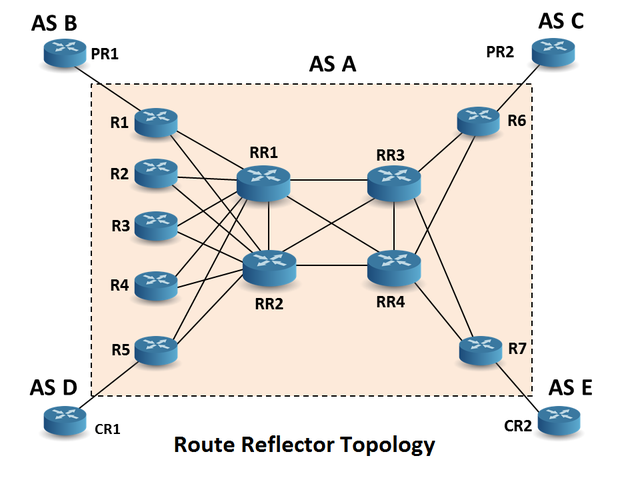

In order to verify the feasibility of PPP in real environments we modified one well-known routing daemon (i.e. Quagga), and we deployed this daemon using two typical topological configurations: Full-mesh and Route Reflector as shown in the following figures.

In this case, our experiment consisted in generating a failure at a random moment in the link connecting the router R1 to the Internet, and then measuring how much traffic would be lost in the worst case. To be as real as possible, we used the traces from the previous section to determine the traffic towards each destination and the ranks.

The results obtained showed that even in the worst case only 1% of the traffic was lost in comparison to the state of the art approach.

Conclusions

Power Prefixes Prioritization (PPP) is a new technique to reduce traffic losses during Internet reconvergence events. This technique ensures that the most relevant routing information involved in any such reconvergence event is propagated first, and we have shown the feasibility of the approach by analysing real traffic traces and by implementing the solution in a state of the art routing daemon.

Moreover, PPP does not mandate any protocol modification, so it can be deployed incrementally as a software update in any router as needed.

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.