This is the first in a series of articles related to network and security management and monitoring changes as the Internet moves toward session encryption protocols with forward secrecy that are more difficult to intercept.

At the recent RSA Conference, I had the honor of moderating an impressive panel on the hot topic of TLSv1.3 adoption. Network Monitoring is Going Away…What Now? TLS, QUIC and Beyond was the result of the panelists’ and my interest in further opening up the path forward for adoption debate outside of the Internet Engineering Task Force (IETF). The goal was to surface the concerns and solutions related to network management and monitoring as we move to an Internet with increased use of stronger encryption. Soon after the RSA Conference 2018 I also presented this topic at Dell Technologies World 2018, “Technology Advancements: The Balance Between Encryption and Management”. I gleaned additional insights from attendees who operate and manage large networks, both private and service provider networks. The feedback from these two audiences is included in this, the first of a short blog series on TLSv1.3 adoption. Subsequent blogs will dive deeper into the topics for consideration as you think about the next steps for your network and plan for the changes toward increased deployment of encryption, as well as improved protection against interception.

The panel debate was quite interesting as it surfaced both security and manageability concerns. These concerns centered around the current practice of passive session interception for some network and security management tools with the push for stronger session encryption. There’s the obvious goal of session encryption to prevent pervasive monitoring and therefore, provide privacy and human rights protections for end users. This goal is why we’ve seen a steady increase in recent years in the deployed encryption for web traffic.

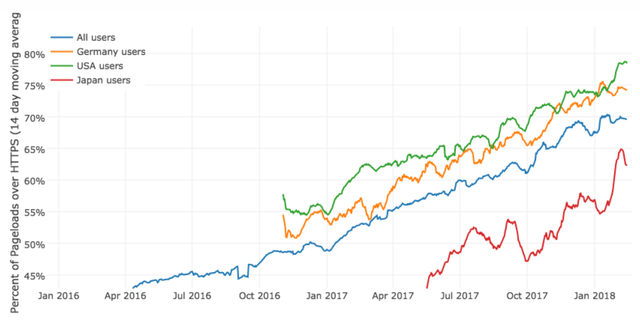

Figure 1: Percentage of pageloads over HTTPS by region. Source: https://letsencrypt.org/stats/

While the Snowden revelations led to an immediate increase in deployed session encryption in this graph (Figure 1), trends in standards development also shifted at this time. The shift in standards resulted in the recent and sharper increase in deployed encryption. The fundamental shift driven by the acceptance of less-than-perfect security in favor of deployability. We see examples of this both with Opportunistic Security (OS) and the standards used by Let’s Encrypt, further developed in the Automated Certificate Management Environment (ACME) IETF working group. Opportunistic security enables an upgrade path from cleartext sessions to sessions encrypted without authentication (which is breakable, but allows for easy automated configuration without knowledge of the other endpoint), to authenticated session encryption. Prior to this shift, such efforts would not have gone anywhere since the unauthenticated session could be intercepted, leaving you with no security. From a purist point of view, that was not acceptable in the past, but now there’s a justification. OS raises the cost for pervasive monitoring, resulting in an infeasibility to monitor all sessions passively. If nation states or malicious actors want to monitor traffic in this model, they would need to target specific sessions for decryption and observation. While we haven’t seen much deployment outside of OSs use with IPsec, ACME is enjoying huge success via the Let’s Encrypt project. Standards efforts similar to ACME to automate certificate management has previously been tried, but were stopped from a purist point of view on security. What has been reported is that sessions not previously using encryption have taken up use of ACME via Let’s Encrypt to automate the management of certificates, carving out a new ‘service’ aimed at improving privacy protections for end users. Let’s Encrypt offers certificates for free, but the ACME protocol can be used by other certificate providers who are interested in automating maintenance of certificates for other Certificate Authorities and for any type of certificate. An out-of-band process may be required up front for identity proofing of individuals and organizations for Extended Validation (EV) certificates or other certificate types. If you are not already using ACME, you should consider it as a way to ease certificate management and say goodbye to the days where an expiring certificate causes extensive server outages without anyone realizing the root cause. We’ve all heard the stories and some have experienced them. Now, with automation it can be avoided.

Going back to the panel and audience questions, it was apparent that human rights considerations were not top of mind for decision makers in attendance. Very few said human rights factored into their decision process and those that did had regulatory requirements. While not surprising, since work to improve human rights considerations in technology is fairly recent, the IETF protocol designers have studied these considerations for some time. This can be seen with some of the changes transitioned from TLSv1.2 to TLSv1.3. For the IETF, the primary focus is on protocols that run over the Internet, usage (such as protocols restricted to a data center) was considered secondary. Enterprise and data center use cases were not a priority during the initial development of TLSv1.3 as there weren’t many individuals following the development contributing this point of view and use case. The IETF is driven by individual contributions. Protocol developers need to consider the protection of end user traffic on a global scale for TLS, including concerns such as monitoring by nation states or others with malicious intent. While the enterprise and data center use cases were not top-of-mind, enterprises will benefit by being able to offer customers greater security protections for their sessions that traverse the Internet. This Internet-focused view is where human rights and privacy considerations factor into the design work. Simple health-related searches, or access to political content, could have grave consequences depending on regional laws or customs. Session protection and anonymity are key properties for consideration in protocol development specific to human rights used to protect individuals and is already considered in security and privacy measures.

It was surprising to learn that not many in either audience had near-term plans to deploy TLSv1.3 on Internet facing connections (your customer’s browser to the termination point at the edge of your network). This is likely a result of the learning curve prior to adoption. TLSv1.3 introduces security enhancements, is provably secure, and should be strongly considered to improve the security of sessions for your customers. Browser vendors and libraries supporting TLSv1.3 are ready with interoperable solutions as long as there are no findings that interrupt the final steps of the publication process. Content delivery networks (CDN) will likely be among the early adopters as it will provide quick wins for their customers’ security and many CDNs are interested in protecting sessions from interception, including multiple types of middleboxes. As an industry, we’ll gain experience from the deployments of this new version and others will begin to decide when and where to deploy TLSv1.3.

As previously mentioned, the changes from TLSv1.2 to TLSv1.3 improve session security for end users, offering additional privacy protections from interception of traffic via passive monitoring techniques. Active interception capabilities remain and the tools in browsers to detect active proxies will continue to operate as they do today – notifying users and giving them the choice of whether to trust the intercepting proxy. If you terminate sessions at the edge of your network for proxy-type inspection, that will continue to work.

What’s at the heart of the debate for the changes in TLSv1.3 for management and monitoring? There are a few important changes to provide forward secrecy recommended in the best practices for TLSv1.2, that are now inherently part of the TLSv1.3 protocol. We say that a system, or a protocol, has forward secrecy if encrypted traffic that is recorded today cannot be decrypted tomorrow if some long-term secret (like a certificate's private key) is compromised tomorrow. It is accomplished via frequent independent key exchanges, such that the compromise of one key limits the data exposure to the information protected by that compromised key. TLSv1.3 maintain forward secrecy, as does TLSv1.2 when configured according to best pratices, except when pre-shared keys are in use. The protocol update from TLSv1.2 to TLSv1.3 removed static RSA and static Diffie-Hellman cipher suites, thus removing the possibility of using these session monitoring techniques and making the protocol forward secret. TLSv1.3 supported symmetric algorithms are Authenticated Encryption with Additional Data (AEAD), also a recommended best practice for TLSv1.2. Additionally, TLSv1.3 uses a more secure key exchange based on the elliptic curve Diffie-Hellman algorithm. These changes should not have much of an impact on your Internet-based deployment if your sessions are configured withTLSv1.2 according to best practices. If you have any network based monitoring in place (firewall, intrusion prevention, etc.), and it occurs at a proxy at the edge of your network after a point of termination for the session, this will continue to function as expected with TLSv1.3. The only change here is if passive or out-of-band monitoring techniques are in use that relies upon the use of static keys, where the session encryption is not forward secret.

The panel discussion, as well as the session at DTW, discussed other emerging protocols including QUIC and TCPcrypt. While not yet approved standards, they are currently used on the Internet and are important to understand with regard to the trends for session encryption. Those in the audience aware of QUIC were outright blocking its usage on their networks. This tells me that in corporate environments, there is no business imperative critical enough to permit the use of this new protocol to date. That may change in time. As for TCPcrypt, most were unaware of this protocol.

The next blog in this series will dig deeper into the types of monitoring performed on internal and external networks to better understand considerations for your deployment plans. Then we’ll move into a deeper dive on options for network encryption and monitoring including alternate session encryption protocols.

This has originially been published on the RSA blog.

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.