I recently had the opportunity to speak at a (virtual) event organised to celebrate the 25th birthday of the Moscow Internet Exchange. A great moment to look back at how the Internet has changed, and an even better one to discuss how best to handle changes yet to come. Here are the thoughts that formed the basis of my keynote.

Technical folk will probably recognise the image here as 10-base-2 ethernet.

Those were the good old days of the ancient ethernets. Was it fast? Well, certainly not by today’s standards. And I guess reliability, like beauty, is in the eye of the beholder. Let’s just settle on the fact that it got the job done and, while it may not have been the best technology had to offer, it was cheap and abundant and somewhat easy to install.

Thinking about where the Internet is today, and what the Internet is today, there’s something I still find kind of interesting about the 10-base-2 times when the Internet, despite being a thing, was still a very little-known thing.

What the Internet Is

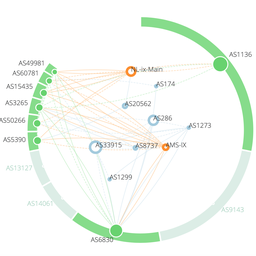

If you remember 10-base-2, you know that, although it was a network, it wasn’t really the Internet. In those days, we still had some clear distinctions between local area networks, called LAN, and the vastly expensive and much slower long-distance connections called wide-area networks or WANs. Making those WAN connections usually required dialling another computer using modems (or if you could afford and justify it, a leased circuit from that same telephone company). And it also usually involved different protocols to communicate across those WAN links than the ones you used internally on your LAN.

In fact, if you are old enough to remember those old networks, you probably also remember that IP wasn’t the only protocol around. That cable carried all sorts of different protocols - remember IPX, DECNET, maybe even a bit of AppleTalk or NetBIOS? Maybe IP wasn’t there at all, because if you didn’t need it, why bother installing it? The protocols you had on your network were usually based on the vendors you had in your network; if you were a Novell shop, you’d run IPX; if you bought into IBM you may have been running token-ring instead of ethernet. There was a lot of choice and you picked whatever you needed or simply whatever your vendor of choice delivered.

The important bit is this: you could use these totally different technologies together, at the same time and on the same wire. Your computer, communicating with a print-server, could use a completely different set of protocols than when it was connecting to the infamous “file server”. And all those different languages and dialects would quite happily work together on the same cable at the same time.

Different things working together on a single cable

Speaking of language, there’s an ambiguity in what we mean when we talk about things "working together" that matters here. In one sense, two things work together if they can both work in some shared space without getting in each other’s way. This is called compatibility. In another sense, working together means something more like working with each other, exchanging information or input to make sure the job gets done. In the present context, we can call this interoperability.

That tiny, subtle difference is really what matters here. Because back in the day, on those old 10-base-2 ethernet cables, those protocols weren’t interoperable. A system using IPX could not talk to a machine that only spoke NetBIOS. But those two protocols were compatible in that they could very well be used on the same cable, at the same time. They could be used in parallel without causing interference or getting into conflicts with each other. Conflicts could be left for the canteen, where of course there were different tables for the Netware and the Windows people.

Then and now

Sounds vaguely familiar, right? Because fast forward 25 years and here we are in a world where we have – or ought to have – two protocols coming over the same wire: IPv4 and IPv6. Two slightly different versions of the Internet protocol that are not at all interoperable with each other, but in the spirit of good design and engineering, are very much compatible with each other. You can have both and they don’t cause any interference or problems with each other, at least not at the network level.

The point here isn’t about IPv6. It’s that, from a technical perspective, the Internet isn’t the protocol. As we speak, there are two different Internet protocols. But apart from a few engineers, nobody really makes the distinction between version 4 and version 6 Internet. The user doesn’t care as long as the job gets done, and indeed, we go to great lengths to make sure they never notice the difference.

On a somewhat similar note, I still see people refer to the Internet as running TCP/IP. This ignores all those wonderful and important other protocols that exist in the transport layers. It somehow implies a connection that isn’t really there. Of course, the two (TCP and IP) go hand-in-hand, but there is a lot more happening on the networks than just TCP, and putting the two together like this makes it look a lot more solid than it is.

Which already gets me a bit closer to what I think the Internet is, or what it isn’t.

A non-static thing

The Internet isn’t static. Like a living organism, it keeps growing and evolving and adapting to changes in its surroundings. And in return, its surroundings keep changing and adapting to it. A walk down the high street isn’t what it used to be, because you already bought the shoes you needed to get there online, so you’ll get a cup of coffee-to-go instead.

The beauty of the thing is that the Internet protocol suite, as we now usually refer to it, has been flexible enough to withstand very considerable changes. Not only have we continued putting different things on top, we’ve also changed everything that happened underneath, swapping those old coaxial cables for fiber optics that pass traffic about forty thousand times as fast. And when we discovered it didn’t fit anymore, we even took the protocol itself apart and replaced it with a newer version that has enough addresses to fit everybody and everything on this crazy planet.

Some people might say that the Internet protocol lacks a fixed architecture, and that very well might be true. But given how the Internet has developed over the past fifty years, we should perhaps see this lack of a rigid structure around the IP protocol as a feature, not a bug.

As Vint Cerf put it (in a comment at an online event where I recently bumped into him), the Internet is deliberately open-ended. It was meant to be adaptable and we were supposed to modify it to our own needs.

So why did the Internet evolve into what it is today? What happened to everything else (things like IPX)? I’m speculating here a bit, but maybe it was because their more rigid architectural models meant that they couldn’t adapt as quickly to the changing environment – maybe the protocols themselves were unable to change? Of course, commercial interest may have also played a role here. Because many of those old technologies were proprietary, which meant that even if you had ways to modify them, you weren’t allowed to, lest you run the risk of being penalised by future interoperability issues.

But now we’re getting very close, I think, to what really defines the Internet: the fact that I can pick and choose from all that work that was done in the last fifty years, make use of all that experience, and pick those bits and pieces that I need to get the job done. Compatibility is at the heart of this. The Internet is a space where you can try things your way and I can try things mine. And when one way works or adapts better than the other, we keep that one and drop the other.

What you end up with is a very open and, as Vint put it, open-ended framework that forms the basis for our designs and engineering. It’s a framework that allows us to keep innovating and finding new and better ways to get the job done. Without asking for permission or waiting for a committee to vote on a particular top-to-bottom design. That openness, the liberal approach of being open to new ways, the idea that this is driven from the bottom, putting the user – hint: that’s you and me, as well – first.

While these principles are clear from a technical perspective, it’s the way that they have been embedded in the governance model that, for me, makes the Internet what it is and what it should be – which brings me to my main topic. Because these days, we see some of these principles being challenged in a variety of ways and venues.

Challenging Changes

There are certain issues dominating the landscape nowadays that force us to revisit these fundamental questions about how we define the Internet. One of these is consolidation.

Consolidation raises all kinds of worries. Among other drawbacks, a major concern is that it’s just not clear whether the user is still being put first. And of course, the very fact that most of what we, as users, see as the Internet is controlled by only a handful of companies, most of them headquartered in a single country, is a source of political discontent.

This has led to a lot of discussion, and quite rightly so. Some of the consequences of consolidation are real and might require some work from one or more stakeholder groups to mitigate. Which is a challenge in itself, as it has long been considered that technology should be kept free from politics and also often argued that political solutions such as regulation should remain agnostic towards technology. While these positions might still hold true, we also need to listen and try to accommodate and address the concerns, where possible and appropriate.

Problems with the current discussion

At the same time, we also need to be careful that we don’t throw out the baby with the bathwater. We need to make sure that changes are well thought out and that we get a chance to try them. In the spirit of everything I’ve just suggested the Internet is, we should adapt to those changes together under our own momentum, picking those solutions that we have the most confidence will get the job done.

What bothers me, in some of the current discussions, is that we can jump to conclusions, or try to jump to conclusions, on false rationales. If you say TCP/IP isn’t up to the task (though I’d file that under #fakenews), I can agree that there are situations where the Internet protocol is not ideal, because it needs and creates a lot of overhead. But the simple solution is, don’t use IP. Sounds silly, right? But if you really are in a situation where TCP or IP or QUIC does not get the job done, you can indeed go out and tinker with it. That is the beauty of the Internet model.

And considering how we got here, there’s not much use in arguing for a solution if you haven’t yet written a single line of code for it. Too much of the conversation stalls at debates about whether permission should be granted to start work on developing specific solutions. Really? Do we need permission to invent better solutions? Imagine if somebody had told Edison that, when he first had his light bulb moment, so to speak. If they had, I might well be writing you a letter by candlelight rather than this RIPE Labs article.

Ok, so I’m exaggerating a bit here. But if you really think you have a better solution, the door is open for you to come and show it to us. Let’s have a look together and see if it’s indeed an improvement on what we are now doing. Go ahead and create those standards where they’re needed, then let the collective market, with its users, be the judge and pick their winners. Just like they always have.

Takeaway

If you don’t like my methods or my model, you are, of course, free to develop your own solutions somewhere else – and perhaps keep the intellectual property rights to yourself if that’s what you’re after. But do so under the strict condition that you don’t interfere with the solutions other people are trying out. Solutions shouldn’t be forced.

And also, importantly, make a hard promise that you leave my networks and my protocols alone. I don’t expect or need interoperability – we just agreed that it’s perfectly fine if you do your own thing. But I need that guarantee on compatibility: don’t send packets my way that may very well look like the ones I’m using, but aren’t following the standards I use.

And the same principle goes for putting the user first. Make sure that whatever they see as the Internet lives up to their expectations. Not yours… theirs. When users type https://www.internet.nl in their browser, it should lead them to a single place, without them having to specify which version or flavor-du-jour of the Internet they are supposed to be using.

Be it version 4 or version 6, I’m not afraid of a bit of competition… what I am afraid of is that you or somebody else comes along and takes away my ability to pick what is best. Let me make my own choices and if I fall flat on my face, I have only myself to blame. That is what for me makes the Internet what it is; my choice.

____

Image credits:

- 10-base-2 ethernet cable: © Raimond Spekking / CC BY-SA 4.0 (via Wikimedia Commons), CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=69527036

- Vint Cerf quote: LINX/Vint Cerf

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.