We have redesigned the K-root architecture for our hosted nodes, formerly known as K-root local instances. System architecture and network setup have been simplified a lot. This will reduce our management effort for K-root and reduce costs for K-root hosts.

Changes to the K-root design

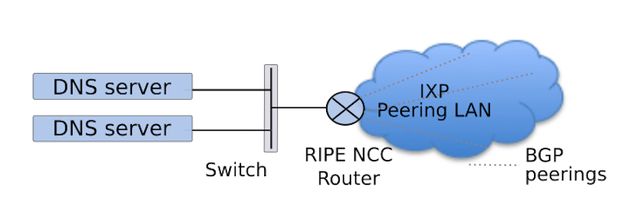

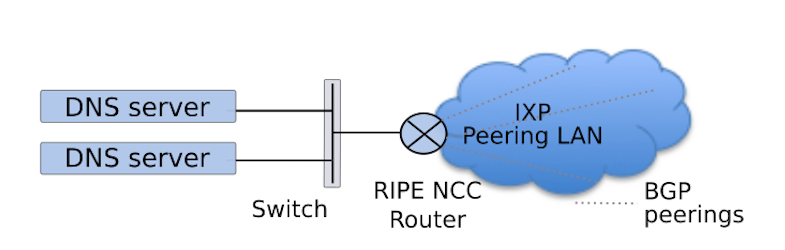

The existing K-root architecture allows for two types of nodes: the heavyweight K-root core nodes , formerly known as global nodes , consisting of multiple parallel DNS servers and separate routing and switching hardware and, secondly, the smaller scale local nodes . Local nodes are comprised of only two small servers and more modest network hardware than the core nodes (see Figure 1). In both cases, the K-root router peers with several parties, usually at an Internet Exchange Point (IXP), and advertises the K-root anycast prefix to all its peers.

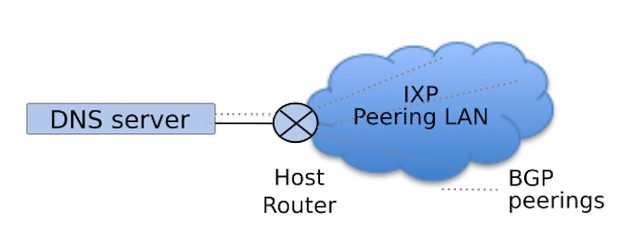

We are now introducing a new design for these smaller scale nodes, which we refer to as K-root host ed nodes . The new model is based on a single rack-mounted Dell server . There is no separate K-root networking equipment in this new design. The server will, next to running the required DNS software, also take care of the BGP session and advertising of the K-root anycast prefix.

Another important change in the new model is in the routing arrangements. As mentioned above, in the old model, the K-root router of a local node establishes BGP peerings with many parties on the local IXP. In the new model, the K-root hosted node will only peer with one party, the local host, and the local host is responsible for further propagation of the K-root prefix 1 , making every hosted node "global" in terms of their anycast capabilities. This is illustrated below in Figure 2.

We have developed and tested this new setup of K-root hosted nodes with some of the current K-root hosts and we already have three instances in service that have been rebuilt under this new model as part of their life-cycle renewal.

Other developments in RIPE NCC DNS services

In the meantime, we have also worked on other aspects of our DNS services. We will only mention these briefly in this article, but you can expect to see more detailed articles about the below topics to appear here soon on RIPE Labs.

Systems management improvements

We have significantly improved the flexibility of our systems management tools, while at the same time reducing the complexity of our setup. Configuration management of our K-root server platforms is now automated almost entirely, using a customised tool set based on Ansible . This eases the day-to-day operations effort for our servers, for K-root as well as other services.

Software diversity

As a side effect of our use of Ansible for configuration management of our platforms, we have been able to make the deployment of different (DNS) software quite easy. This means that we have been able to deploy three different DNS software flavours on our production DNS service with great ease. We now have BIND, NSD and KNOT in production use on K-root. This increases software diversity and reduces potential impact of, for example, a Zero Day exploit against the K-root servers.

Internal routing

Previously, we were internally using Open Shortest Path First ( OSPF ), to allow for load balancing between the physical servers in each node. We have recently migrated a subset of our DNS service nodes, though not the K-root servers, replacing OSPF with an iBGP configuration, where we can achieve load balancing over the servers using BGP load balancing 2 . This is another simplification of our design that reduces the management load on the engineering team.

Preparing for additional hosted nodes

A larger number and (even) better distribution of K-root instances would increase the stability and availability of K-root operations and DNS Root services globally. Also, there is a large demand for local K-root instances from IXPs and ISPs. With the new design described above and the improved efficiency, we are now confident to support a larger number of K-root systems globally, with the same management and engineering effort as today. We will soon publish another RIPE Labs article with more details on the plans that we have in this area.

Added on 18 May 2015: In the meantime these plans were discussed with the RIPE community at the RIPE 70 Meeting on 13 May 2015. You can find more information on our web site about hosting a K-root node .

Conclusions

We have been able to improve efficiency in the hardware design, routing model and management environment of K-root. This allows greater flexibility and lower engineering effort for the same footprint of K-root services and allows for further distribution of K-root instances.

1/ For an old-style local node, the anycast prefix was advertised with the "no-export" community string set, in order to limit the scope of the prefix propagation. This is no longer the case in the new design .

2/ So far this has only been applied to clusters where we have a Juniper router.

Comments 12

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.

Konstantin Bekreyev •

Please write a detailed howto for IXPs: how set up a local node of K-ROOT?

Konstantin Bekreyev •

can you combine K-ROOT and RIPE Atlas Anchor at one server?

Nick Hilliard •

Although I can see why this would make the RIPE NCC's life a whole pile easier, I'm not sure this is a good deployment model for IXPs. It's not exactly clear how you plan to handle this but I suspect the deployment model will be one of the following: 1. if the plan is to use the IXP management ASN, then beware that most IXPs run tiny management routers which are intended for ixp management traffic only and may not be able to withstand the high pps rate of a root dns server. There may also be encumbrances on the IXP management whereby they are not permitted to carry this traffic by policy. This will also require the creation of a route: object for the k root prefix to tie it to the host ASN so that routing will work well. 2. if the plan is to connect the root server instance to the IXP and use a third party organisation on the peering exchange with the k root /24 prefix originated from third party's ASN, then this will cause problems with next-hop propagation around the IXP; if the third party router is configured to allow ip redirects (which it shouldn't be), then the third party router may suffer from performance problems relating to high levels of redirect traffic from the router's CPU. 3. if it's similar to #2 except that you're using a RIPE NCC ASN to get around the route: object problem, then the as path via the ixp will be the same length as any other transit connection, so this may affect the routing path used to reach the k root instance. This won't make any difference to the RIPE NCC query load, but it will affect the quality of service from the organisations connecting to the IXP.

Romeo Zwart •

Thanks for the comments so far. Konstatin: to answer your concrete questions: no, it will not be possible to combine a K-root hosted node and an RIPE Atlas anchor. These are separate devices with different requirements. We will provide more details soon, including more detailed instructions on how the application and setup process will work. Nick: The server will originate the route with our AS, and the host AS can propagate the prefix as far as they choose to. But in the new 'hosted' model, the server will not attach directly on an IXP. If the host wishes to advertise the route to peers or route servers on an IXP, they can do so if they want, assuming they are aware that their network is capable of handling the traffic. Also, although the example above does suggest this, it is not necessarily the case that an IXP is the host AS. We are receiving many requests from ISP's too. And this new model will also enable us to serve ISP's who wish to have a local instance of K-root. With an increase in the number of instances, the goal is to improve the overall global reachability of K-root. One thing that I'd like to emphasise is that we are not forcing existing IXPs into this new model. We propose this as a new way forward for those interested. Most of the existing K-root hosts that we talked to until now appreciated the leaner approach. Those that do not can stick to the old model.

Andrei Safonov •

I think we would be interested in hosting a node at St. Petersburg ru.rascom

Romeo Zwart •

Hi Andrei, Thanks for your interest. We will soon have follow up articles to detail more of the registration procedure. So please keep an eye out for this space. :)

Robert Martin-Legene •

About software diversity, I would rather prefer each letter of the root to dedicate itself to running one specific software. While you see a bug being able to affect many of your instances if all software is the same, at least it would shield you from many bugs in "all the others" name server softwares. If every root-letter follows a software diversity plan like the one you suggest, then eventually some user will sit somewhere, where all the root-servers he can reach will be of the same version and that would expose that user fully to the impact of a bug and possibly a non-working root zone. If on the other hand, K always used, say NSD, and another letter "X" used Knot, then the user would always be able to get to an NSD, if there was a bug in Knot. I believe K should be seen as one part of the software diversity of the root-zone and not pursue software diversity for K itself.

Romeo Zwart •

Hi Robert, Thanks for your comment. I understand your concern with, for example, zero-day exploits against the root servers. However, without a very thorough modeling of root-server and software type distribution, it is difficult to determine how the risks of both approaches compare. Also, as mentioned in the article itself, we can support a much more fine-grained distribution of root servers with our new hosted node model. My assessment is that with such a finer distribution the theoretical case of your end-user only being able to connect to root-servers with a specific flavour of DNS software, becomes very small.

Kostas Zorbadelos •

Hi Romeo, Anand, thanks for the article and the info provided. Just read it and my question is, how do you handle the case of "more than one" hosted node (in a single AS)? Suppose a host requires a couple of servers to handle the load or provide redundancy. Regards, Kostas

Romeo Zwart •

Hi Kostas, Based on our current experience with K-root local and K-root hosted nodes, we don't expect this to be necessary to cover the local query load. Each individual server is capable of handling a relatively large load, so having two servers in the same AS for capacity reasons should be unnecessary. However, if the AS covers a large geographic area, then we can place two servers in different areas, but within the same AS, to help with routing traffic appropriately. Finally, we don't see local resiliency as a big issue, because if a server fails, the hosts will generally still be able to reach one of several other K-root nodes with a good response time. Kind regards, Anand & Romeo

Adrian Almenar •

We are interested in hosting a k root instance, but we are unable to finish the application due to a failure with the field Proposed Node Location that no matter what we put there always says it’s invalid.

Romeo Zwart •

Hi Adrian, Thanks for this feedback. We are following up with you out-of-band to investigate this further. Romeo