As Ph.D. students, our typical encounter with the IETF community and their work is by stumbling over RFCs and Internet-Drafts that either relate to or are a fundamental basis for our research. Beyond that, for us, the Internet Engineering Task Force (IETF) has been a vague entity focused on standardisation. Therefore it was a great experience to attend the IETF 101 meeting in London earlier this year and to present our measurement results for TCP, HTTP/2 and QUIC at the Measurement and Analysis for Protocol Research Group (MAPRG)

Experience

We were both impressed by the professional organisation before (registration process) and during the meeting (agenda), compared to some of the research conferences we attended before. Thanks to the newcomers meet and greet with experienced members of the IETF community, we quickly realised that IETF standardisation is fueled by an open-minded community, similar to the research community.

During the actual sessions, we witnessed passionate technical as well as ethical discussions. We were especially impressed by the audience’s broad knowledge with respect to consequences for existing systems, future considerations, and lessons learned from the past. Despite the level of detail or heated debates over, for example, a single bit, we were surprised that if a decision is to be made, the goal is to reach rough consensus within the session, thereby pushing the overall progress forward.

In contrast to the very technical discussions and (instant) solutions of IETF work, the Internet Research Task Force (IRTF) provides a broader forum for evaluating new ideas and to discuss the current state of protocols in the Internet. Compared to typical research conferences, the IRTF has the unique property that the audience features people actually operating, implementing, and maintaining the systems and protocols under discussion.

Measurement studies on QUIC, TCP Initial Windows, and HTTP/2 Server Push

In the scope of the IRTF’s MAPRG, we presented measurement results for IETF-standardised protocols, TCP and HTTP/2, and the QUIC protocol which is currently making its way through the IETF standards process.

Measuring TCP

For TCP, we investigated the use of its performance-critical startup parameter: the initial congestion window (IW) that governs the amount of unacknowledged data in the first roundtrip of a connection. Small windows lead to suboptimal performance, while too large windows can lead to losses. We found (see our IMC 2017 paper for further details) that for IPv4 most people adhere and adopt the current experimental standard of an initial window of ten segments from RFC 6928. Yet, we also found some hosts that use much larger windows. We extended this investigation to Content Delivery Networks (CDNs) in a follow up paper (published at TMA 2018) and found that CDNs customise their IWs and utilise values beyond the current recommended standard. These are typically below 30 segments of data but we also found instances of up to 100 segments.

Measuring HTTP/2

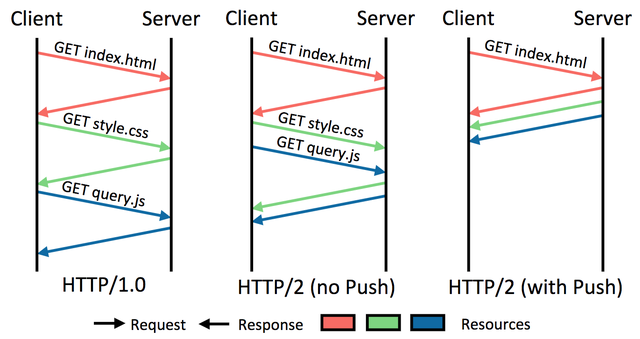

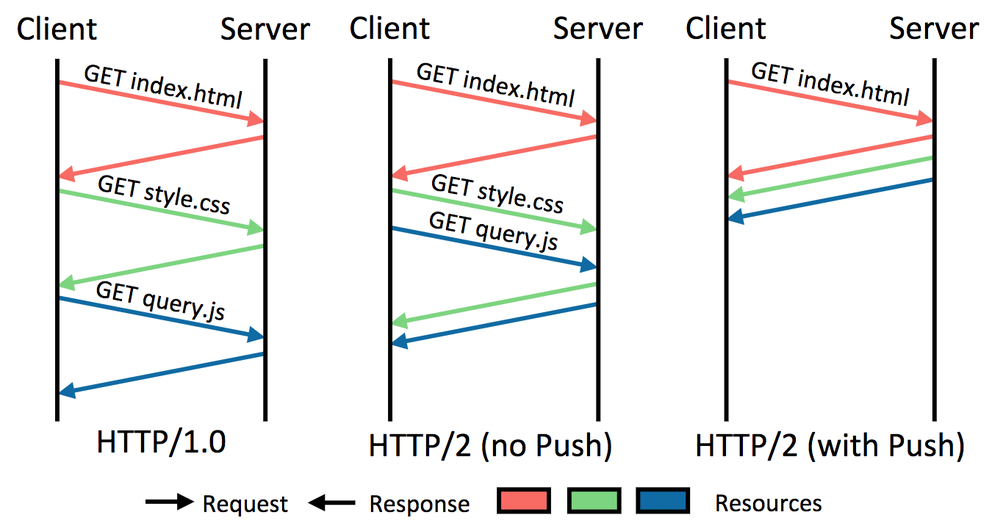

Regarding HTTP/2, we focused on the server push feature that allows a server to proactively push content without an explicit client request, thereby promising to save round trips and thus enabling faster page loads (see our IFIP Networking 2017 paper for further details).

Figure 1: H2 Server Push compared to H1 and H2 without push. In H1, requests are served sequentially. As H2 offers multiplexed streams, multiple requests can be issued in parallel. When server push is active, it enables servers to preemptively push embedded objects without explicit client requests. This procedure can optimise page load times by saving round trips.

While we observe that, for example, already 11.8 million out of 155 million .com/.net/.org websites are HTTP/2 enabled, only ~5,000 use server push (as of January 2018, for up-to-date numbers, see https://http2.netray.io). We attribute this to the fact that server push requires active configuration, whereas HTTP/2 can be enabled by simply updating a web server. By comparing websites in different configurations (HTTP/1, HTTP/2, HTTP/2 without server push) and using Page Load Time and Google’s SpeedIndex as performance metrics, we observe that, although some websites benefit from using HTTP/2 and server push, some websites perform worse. To grasp the impact of HTTP/2 server push on human perception, we conducted a follow up study (published at SIGCOMM Internet-QoE 2017). In this study, we show side-by-side videos of the loading process of websites in different protocol configurations, and observe similar results as before: some websites are perceived to load faster, while others are perceived to load slower. Moreover, we see that performance metrics do not necessarily correlate with human perception and that voting decisions are highly website specific. At IRTF MAPRG, we concluded that HTTP/2 server push is no silver bullet and its usage for websites should be handled with care and a lot of testing before actual deployment, which was also confirmed in discussions with web engineers and developers after the talk.

Measuring QUIC

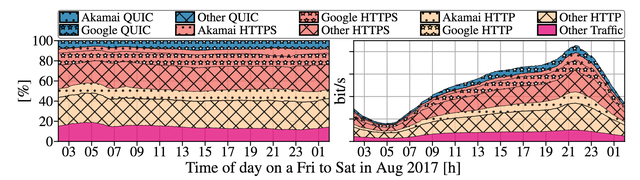

Finally, we examined the prevalence of QUIC on the Internet (see our PAM 2018 paper for details). Since IETF-QUIC is still in standardisation process, we focussed on the original Google proposal. In October 2017, we found around 600,000 QUIC-capable IPs in IPv4 which has massively increased since then to over 5 million (as of July 2018, see https://quic.netray.io for up to date numbers). In August 2017, we analysed network traces from a European Tier-1 ISP, a major European IXP and from a University’s uplink and found that QUIC on average already accounts for 6%-9% of the Internet traffic.

Figure 2: QUIC traffic share in a major European ISP (up- and downstream). Left, relative share of QUIC. Right, total traffic compared to HTTP(S), y-axis has been anonymised at the request of the ISP. Nearly all QUIC traffic is served by Google.

More recently, we observed peaks of up to 30% QUIC traffic. Our findings backed the discussions in the QUIC working group that discussed the SPIN-bit proposal which should tackle the protocol’s challenging manageability and measurability for network operators.

Summary

Overall, being part of the IETF 101 meeting was a fantastic experience, as we both gained insights into the standardisation process within in the IETF, received valuable and professional feedback regarding our research, and got to know a lot of interesting people.

From our perspective as Ph.D. students, we highly recommend taking part in IETF meetings, as it provides a unique opportunity to gain a view on real-world technical problems that are often not considered in the university environment.

This article was originally published on the IETF blog.

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.