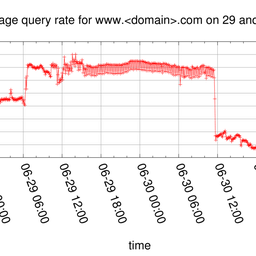

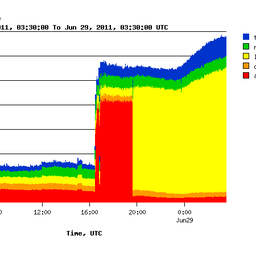

The RIPE NCC operates various data intensive services. As part of our DNS operations we have been operating K-root since 1997. A key to the stable operation of this service is a solid understanding of the traffic it responds to and how it evolves over time. With the success and growth of the Internet, traffic to the DNS root servers has increased and K-root produces terabytes of raw packet capture (PCAP) files every month. We were looking for a scalable and fast approach to analyse this data. In this article I will explain how we use Apache Hadoop and why we open-sourced our PCAP implementation for it.

In 2009, ICANN announced that it would start development for a signed root zone and intends to have DNSSEC in the root zone operational within a year. The RIPE NCC has been supporting the adoption of DNSSEC from the beginning. As the operator of K-root, we had to ensure that it was ready for the signed root zone. We partnered with NLNetLabs to analyse the changes that would be necessary for our infrastructure ( whitepaper ) and made sure we deployed the capacity needed for the rollout of the signed root zone.

We performed a lot of analysis of real-world K-root traffic as part of this research. We noticed that our regular use of libpcap was too slow for us to iterate on the results. At the same time, Apache Hadoop had its early releases and got a lot of positive feedback. Amazon released Elastic MapReduce , which runs on their EC2 cloud, so we could test our idea without a major upfront investment in infrastructure.

The only downside was that there was no support for PCAP in Apache Hadoop. Within a week we hacked up a basic proof-of-concept to test the idea. Our initial results were encouraging. Our native Java PCAP implementation was able to read 100GB of PCAP data spread out over 100 EC2 instances within three minutes. What followed was the introduction of our own Apache Hadoop cluster for both storage and computation at the RIPE NCC. To date, this cluster has a capacity of 200TB and 128 CPU cores for computation. We use this cluster for the storage of our research datasets and do ad-hoc analysis on these.

So, that's how we got to where we are today. We believe that this piece of software is useful to many. I am proud that we have released the current status of our development as version 0.1 on 7 October 2011 and have open-sourced it on GitHub. We choose the LGPL as license to ensure that you can use it within your organisations as you see fit, but keep true to the spirit of our initial idea to open-source it.

The software can be found here: https://github.com/RIPE-NCC/hadoop-pcap

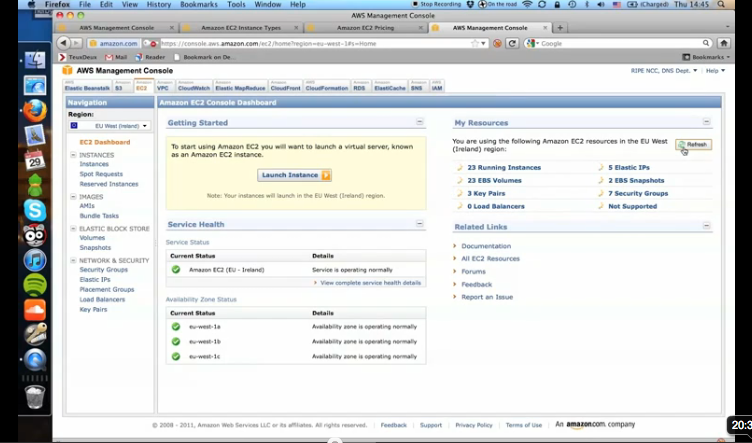

To allow you to quickly test this, we have also compiled a Screencast which shows you step-by-step instructions on how to use this library in Amazon ElasticMapreduce (click on the image to start the video):

I hope that you find this useful. If you have any feedback, we encourage you to comment under the article. If you have any feature requests, please file them as an issue on our GitHub tracker .

Comments 17

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.

Anonymous •

OK, seriously; why would you use one of the most bloated, low-performing high-level languages known to man to process PCAP-data when you have close to perfect C-libraries (like libtrace) available? What you gain by doing this your way, unless I'm missing something fundemental, is:<br />1. Extremly poor performance (100GB data in 3 minutes is OK with 1 thread, you have 100 threads?!?! That is /1 GB DATA PER 3 MINUTES/!!! You could use Excel to achieve this...)<br />2. You have to support your own bloated code, whilst libtrace is free to use.<br />3. Losing face by telling people you can process 1 GB data in 3 minutes when this is something that could be done 10 years ago in a lower level language.<br /><br />Sorry guys but, wtf are you doing here... ;p<br /><br /> /Anonymous, been using libpcap and libtrace for long enough to realize how utterly silly this article was. ;p

Anonymous •

Hi Anonymous, <br /><br />Thank you for your feedback - although it would be beneficial to us and the community to know who you are. <br /><br />I'd like to avoid starting a programming language war here. I do agree with you that Java is bloated and would like to use something else to gain more performance for this. However the distributed computing Hadoop offers is implemented in Java and the benefits that this brings to the table far outweigh the performance penalty. <br /><br />The power of this system for us really comes at scale - we use libtrace, libpcap, etc. extensively and will continue to do so. For us it is very useful to be able to iterate over TBs of data within a reasonable amount of time and being able to lower that time by simply adding computing capacity. With libtrace our only way of scaling was vertical and if we reached that limit we had to compute batches on different machines and later merge those results into one which was an error-prone process. <br /><br />I cannot agree, however, with your claim that you can do 100GB of data in a single thread with libtrace in three minutes. As I mentioned, we use it extensively on modern hardware and have never reached performance levels anywhere close to this. I just ran a 1GB PCAP (uncompressed) and it took 1.5 minutes to read it. This was discarding all the output and therefor not producing any IO. <br /><br />Regards, <br />Wolfgang

What will you do with that •

Buddy 1 GB of data in 1.5 min!! My god thats too much. I think you have not heard about 10 gbps systems means round about 1.5 GB of data per sec. I was reading about RIPE NCC thinking it will help me, but I think I can help you guys more as I have achived analytis for 10 gbps and was thinking of going for more. Why Hadoop have you guys not heard of anything else than Hadoop? You can better build your own data structure than going for hadoop, I believe it will perform faster Contact me on rakesh.sinha@netafore.com

Anonymous •

Did you base your views on Java's performance on empirical data or some article you once read in 1998? Here's some real data to correct your misinformation:<br /><br /> <a href="http://shootout.alioth.debian.org/u64q/which-programming-languages-are-fastest.php" rel="nofollow">http://shootout.alioth.debi[…]g-languages-are-fastest.php</a> <br /><br />PS Excellent article and an excellent project. Well done RIPE NCC!

Kostas Zorbadelos •

Very nice job. I especially appreciated the screencast / tutorial on Hadoop and Amazon EC2 services.

215LAB •

Excellent project! And thank you for releasing hadoop-pcap libraries, I look forward to playing with this extensively. Well done fellas.

sally •

I already announced Pcap inputformat module for large pcap trace file distributed into more than one block. The paper,"A Hadoop-based Packet Trace Processing Tool" also pronounced in TMA 2011. To handle large pcap trace file, I devised heuristic algorithm and implemented packet trace analysis tool, which benchmarked CAIDA's CoralReef tool and source code was also released publicly. But, my concern is that my pcap input format module name is PcapInputFormat which is same with yours. In my opinion, the functionality is different. Yours just make the pcap file do not splitable and process whole file with one thread. That's the reason why performance is not good.

Mandoman •

Great work on this. I have been using tools like libnids to serialize the pcaps of specifically HTTP data into text for use with hive/hadoop. Interesting stuff, a native implementation is obviously better on a number of fronts. A couple of questions, is there any dev going on for other protocols other than TCP/UDP level and DNS? I'd love to see some SMTP, HTTP, etc Even other protocols. If you see SSL serialize the cert information on the connection, if you see SSH, grab some banners or what ever information you can. Proxy unwrappers etc. Great stuff here.

Wolfgang Nagele •

Hi Mandoman, Thanks for the feedback. We currently don't plan to implement other protocols ourselves. We did anticipate such demand and have written the library to enable the addition of additional protocols easily. Please check out the instructions to 'Write a reader for other protocols' here: https://github.com/RIPE-NCC/hadoop-pcap/tree/master/hadoop-pcap-lib Regards, Wolfgang Nagele, RIPE NCC

sri •

At first I thought Anonymous's comment was just flame bait, but man this stuff is slow. It takes 6 minutes to go through a 2GB DNS file, libtrace+some custom DNS dissectors can chomp through that in 27 seconds. The slowdown is ridiculous.

john bond •

Hello Sri, Thank you for the response. We acknowledge that using the Hadoop framework adds some latency to processing data. The benefit of using Hadoop is its ability to scale, allowing one to process data at the terabyte/petabyte scale. As demonstrated in the article one can process 100G of data in in half the time it took to process a 2GB by simply adding more machines. Regards John Bond RIPE NCC

chris •

nice article on why the elsa log parsing engine doesn't parse pcaps etc with hadoop http://ossectools.blogspot.co.uk/2012/03/why-elsa-doesnt-use-hadoop-for-big-data.html

zoltan[dot]gelencser[at]gmail •

Hi Anonymus, Drop me a mail, if your read this (after years) I have a project for you. Thanks!

Mayank •

Hi, I am new to Hadoop and want to test Hadoop-pcap on simple HTTP pcap files. I have single node cluster installed in my PC. I need to know how to use this library and what are the functionalities. It will be beneficial for me if I have an example program which I can run on my hadoop.

Romeo Zwart •

Hi Mayank, If you check the github link that Wolfgang provided in the article, you will find (in the ReadMe file) a link to an example using Hive that will get you started. Kind regards, Romeo

Yimi •

hello, I have found that there is a problem with class of CombinePcapInputFormat about the version on github,maybe the constructor function is wrong.The version 1.1 is right. Now I need to change some code to fit my application.So that I need the right source code.Could you help me? would you put the version 1.1 on github ?Or would you email me the source code of version 1.1?My email adress is yxm1348@163.com. Thanks a lot. Yimi

Mirjam Kühne •

Dear Yimi, the author informs me that the version 1.1 is here in source: https://github.com/RIPE-NCC/hadoop-pcap/releases/tag/1.1 and as binary in the repository as described here: https://github.com/RIPE-NCC/hadoop-pcap I hope this helps.