When blackouts become a frequent reality, keeping network sites online requires more than ad-hoc fixes. This article outlines a power management system, tested in practice, with design notes and an operator playbook.

This article was winner of the RIPE Labs article competition for RIPE 91.

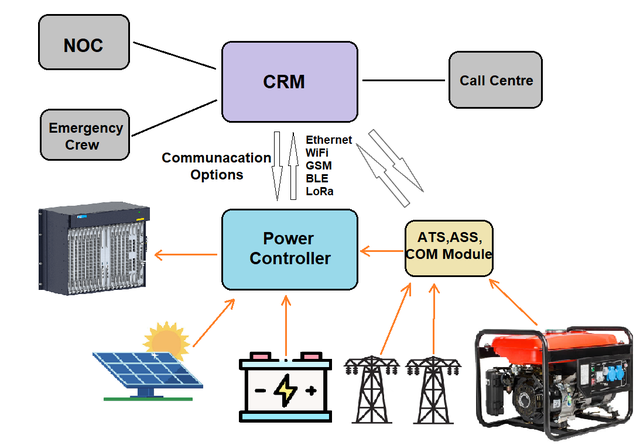

Keeping networks online during repeated blackouts comes with a number of challenges. To address this, I built and deployed a fast-charge, cloud-aware power management system for network nodes. It adds thermal control, per-cell battery monitoring, cell balancing, support for multiple battery types, multiple energy sources (mains, battery, generator, solar), and telemetry suitable for a Network Operations Centre (NOC). This article shares the architecture, algorithms, an operator run-book, and KPIs you can reuse.

Why network nodes fail first in a power crisis

When the grid becomes unstable, network nodes are often the first critical points to fall out of SLA. There are a number of reasons for this. Optical Line Terminal (OLT) stations feed entire neighbourhoods and define customer-visible uptime; core/aggregation determines regional packet loss; and DWDM/EDFA transport is unforgiving to power and thermal excursions. Conventional UPS configurations also struggle because recharge windows are short, battery stress accumulates, and visibility into real battery health is poor or absent. Keeping the Internet up under these conditions starts with site-level energy resilience.

In Ukraine, where power outages have become frequent due to war and damaged infrastructure, network nodes are particularly vulnerable, making resilience against blackouts a critical priority for maintaining Internet access.

Designing resilient power systems for network sites

Let's start by highlighting the main considerations and objectives for resilient power management at network sites.

Objectives for a production-grade power management system

- Safe, fast recharge between outages via adaptive CC/CV with realtime feedback

- Thermal discipline (active heating/cooling; safe temperature windows for charge)

- Multi-chemistry support (Li-ion / LiFePO₄ / lead-acid) with software-selectable 12-80V output

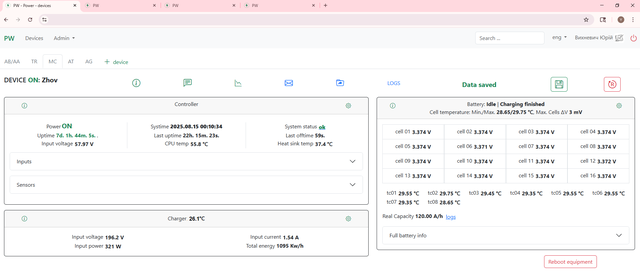

- Granular insight (per-cell V/T, state-of-health, internal resistance, capacity tracking; cell balancing)

- Cloud-aware ops (real-time telemetry, alarms, remote parameter changes, logs, automation)

- Site information (load policies; remote control of ventilation/AC; safe reboots of edge gear)

System architecture (network site variant)

- Battery pack + cell monitor: Per-cell voltage and temperature, cell balancing, SoC/SoH/ESR, periodic capacity audit

- Power stage: CC/CV/Adaptive charging with hardware limits; real time control; over/under-V/T safeguards

- Thermal Subsystem: Preheating to ~30–35°C before fast charge in cold climates and active cooling when above safe thresholds.

- I/O & comms: Ethernet, RS-485, CAN, LoRa; digital/analogue I/O for ATS/relays/sensors

- Cloud service: Live metrics, alarms, remote configuration changes, historical logs, anomaly detection, and automation scenarios

- Site control: A local policy engine prioritises core/aggregation → OLT → optical transport; controls fans/AC; performs safe reboots of devices at remote POPs

Fast-charge, but safe (the algorithmic core)

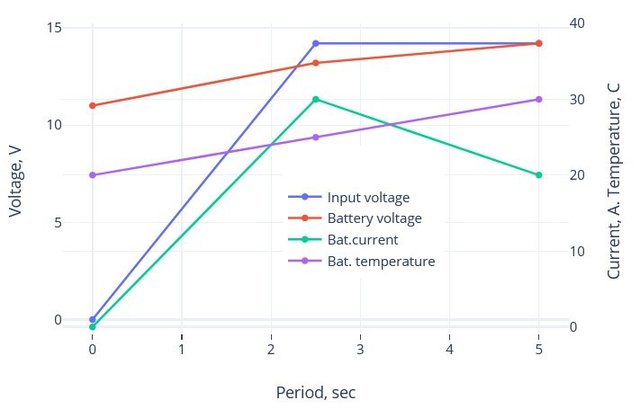

I extend classical CC/CV with pulsed energy delivery and feedback loops. Short charge pulses and brief rests let the controller observe voltage/temperature dynamics and adapt current to the pack’s real condition, as shown in the figure below:

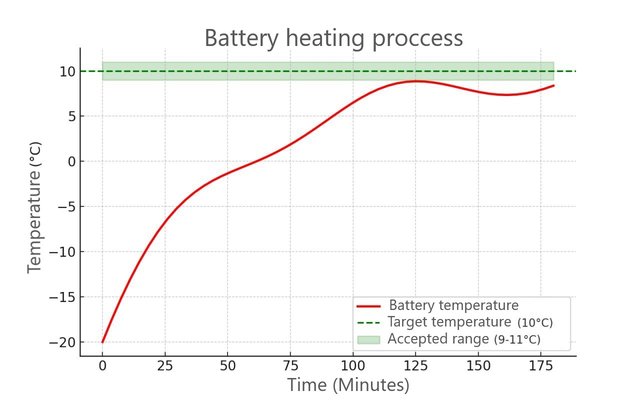

Before fast charge at low ambient temperatures, the BMS preheats the pack into a safe thermal window. The next graph (below) illustrates the heating process, showing how the BMS raises the battery temperature from -20°C to 10°C over 175 minutes, staying within the safe range (9–11°C) for charging.

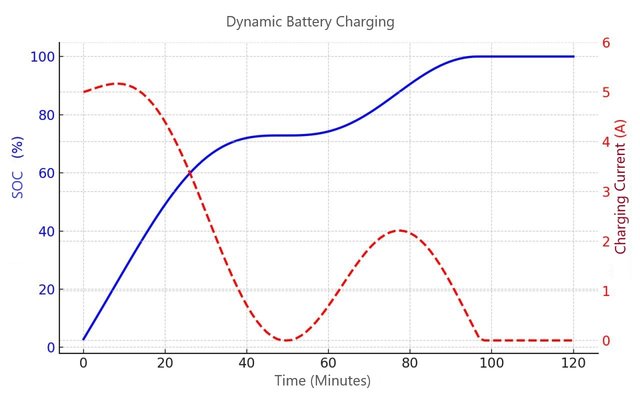

This last graph demonstrates dynamic charge regulation, showing the charge level (blue line) rising from 0% to ~100% over 120 minutes and the charge current (dashed red line) varying from 0 to 5 A, reflecting the adaptive CC/CV algorithm with pulse-based control.

The controller tracks internal resistance and cell variance to avoid over-stress, and performs active balancing near the end of charge to prevent chronic overcharge of “stronger” cells.

Operational effect: Recharge windows remain usable even with frequent outages while staying inside safe electrical and thermal envelopes. When a blackout is scheduled or grid instability is detected, the controller can boost-charge shortly beforehand to increase runtime without keeping cells at 100% for long periods.

Cloud-aware NOC integration (no vendor lock-in)

The system streams telemetry (voltages, currents, temperatures, SoH/ESR, capacity estimates, etc), raises alarms for critical conditions, and allows operators to change parameters remotely (charge limits, thermal thresholds, chemistry profiles, etc). A logbook records outages, configuration changes, and abnormal events for post-mortems. The same cloud layer controls site peripherals (ventilation, AC) and can reboot frozen devices in remote cabinets. Integration uses open physical interfaces, so each operator can connect it to their own NMS.

Deployment notes for network sites

- Voltage rails: software-select 12-80V to match OLT/EDFA/transport gear without hardware swaps

- Chemistry profiles: Li-ion, LiFePO₄, and lead-acid with per-chemistry charge/temperature limits

- Thermal layout: outdoor cabinets overheat in summer and underperform in winter; pair the controller with heaters and fans/AC and let it to manage them.

- Safety: multi-level protection (over/under-V, over/under-T) and hooks for fire suppression

- Fleet scale: firmware-driven configuration for hundreds of similar nodes; low BOM enables scale-out

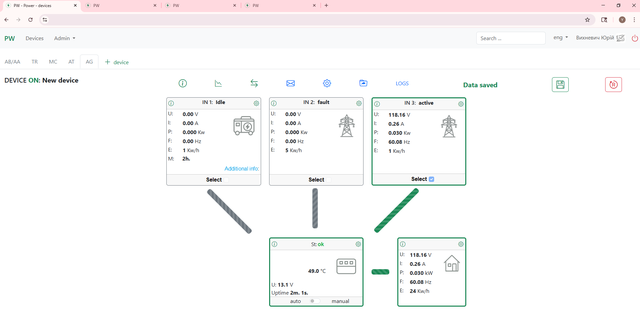

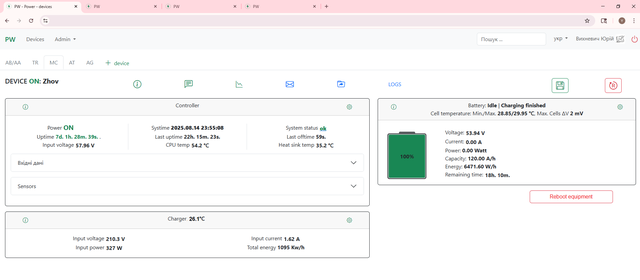

Web interface

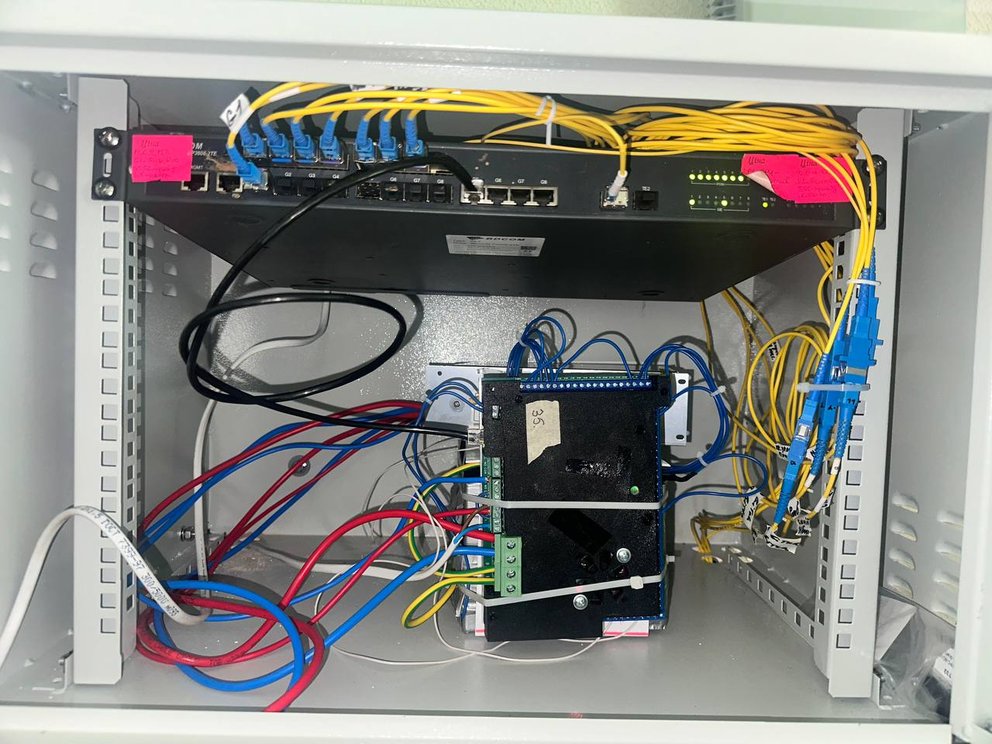

Having a clear, NOC-friendly web interface ensures the system isn’t just technically sound inside the box but that it's also practical for operators to monitor and manage in the broader project.

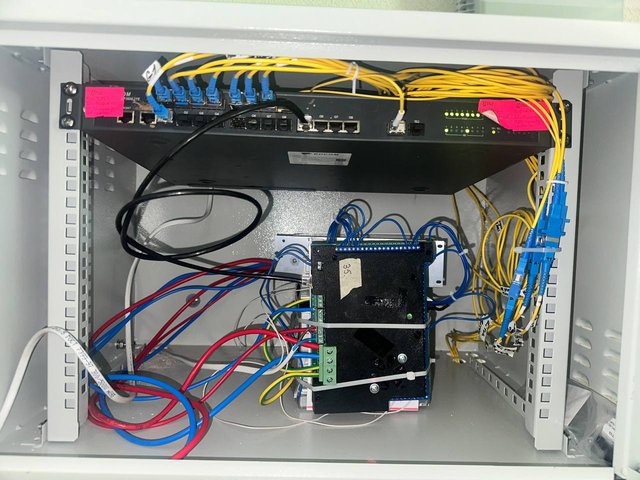

And for those who are interested, here's what things look like inside the box:

Operator playbook

With the system in place, here’s a playbook operators can use to put it into practice, followed up with some real-world notes on what to do when things break and how to keep things secure.

- Prioritise sites: start with OLTs, core/aggregation, and DWDM/EDFA transport

- Hardware checklist:

- Per-cell monitors and balancers;

- temperature sensors;

- thermal actuators;

- relays/ATS;

- interfaces to your NMS

- Charge parameters:

- Adaptive CC/CV/Adaptive

- per-chemistry voltage/current ceilings

- preheat to ~30–35°C before fast charge in cold

- set balancing thresholds near end of charge

- Cloud/NOC: enable alarms for over-T, under-T (do not charge Li chemistries below 0°C), cell variance, rising ESR; allow remote parameter edits and automation for fans/AC

- Load policy: prioritise core/aggregation → OLT → transport; shed non-critical loads automatically and restore after stabilisation.

- KPIs to track:

- MTTR/MTBF

- runtime margin at cutover

- frequency of critical events

- % time in safe temp window

- cell variance

- ESR trend

- capacity delta per quarter

- truck rolls avoided

- Blackout play: if you have outage schedules, pre-charge before cuts. If you don’t, trigger boost-charge on grid-instability heuristics. Return to a buffer/float regime after the event to reduce premature aging.

- Quarterly audits: capacity test; ESR trend; thermal system inspection; sensor recalibration

- Logging and audit: export alarms and configuration diffs to your SIEM/ticketing for auditability.

What broke (and how I fixed it)

- Cabinet overheat in summer → Integrate fan/AC control with the controller; throttle charge current as temps drift up

- Mixed chemistries across sites → Auto-detect profiles on deploy; standardise templates fleet-wide

- Sensor drift → Periodic recalibration and self-diagnostics; treat outliers as maintenance triggers

Security, safety and responsibility

This system controls high currents and site peripherals. Always follow local electrical codes and fire-safety rules; keep independent hardware interlocks separate from firmware logic; test changes in a lab before rolling to production cabinets.

What you can reuse today

- High-level implementation guidance and a deployment checklist for network sites

- Telemetry schema (high-level) and example alert maps (no proprietary thresholds)

- Automation recipes (high-level) for pre-charge triggers, load shedding, and site climate control

- Auditability: export alarms and configuration diffs to SIEM/ticketing

Conclusion

For ISPs, power resilience is where Internet resilience begins. A fast-charge, thermal-disciplined, cloud-aware system keeps critical nodes online through repeated outages while extending battery life and reducing truck rolls. This approach was designed and fielded with network nodes in mind; I hope the architecture, runbook, and KPIs help you do the same. For operators in blackout-prone regions, contact RIPE Labs or me via yura@bestlink.in.ua for telemetry templates or deployment advice.

Comments 0