DNS is a complex set of protocols with a long history. Some TLD's go as far back as 1985 - but not all of them are built equal. And not all of them have the same performance depending on where the end user is situated in the world. So just how different are they?

History

DNS was born out of a need that was already present in ARPANET, which already used TCP/IP. To be able to contact machines more easily, people back then used a host.txt file. But this didn't scale very well. So they created WHOIS in its first iteration in RFC812 and allowed to query information about the hosts.txt file without contacting the SRI Network Information Center (NIC) via telephone. Soon after, in 1983, the first version of DNS was created in RFC882 with the BIND package following one year later.

ARPA was the first TLD. Then came the first gTLDs (generic TLDs). Those were COM, ORG, NET, EDU, MIL, INT and GOV. The initial ccTLDs (country code TLD's) were also introduced at that point. Those were US, UK, IL. IDN TLD's (Internationalised Domain Names in Unicode) were defined much later on in RFC3490 in 2003.

As the Internet became popular, more and more ccTLDs and gTLDs got created. Those all needed to be managed. ICANN only got created in 1998. Before that, IANA was the one delegating management to various organisations.

Problems

DNS evolved during the years. The introduction of IPv6 on the Internet and DNSSEC (which started to be discussed in 1997 in RFC2065) are examples of those evolutions. However, the implementation of those evolutions was left at the discretion of the maintainers by IANA/ICANN for legacy TLDs.

That means that we can't assume IPv6 or DNSSEC to be implemented on all of them. Some TLDs even need a registrar to send an email to the TLD management organisation to create register a new domain. A human then has to manually edit the zone (see media.ccc.de - how to run a domain registrar).

Furthermore, the way people use domains has evolved. Where back in the days, taking a .be in Belgium or a .fr in France made sense, people are now composing names including the TLD. An example of that is youtu.be .

This raises a question. If someone is situated in South America and wants to access youtu.be, is their performance going to be impacted (assuming he has to do the entire recursive lookup with no cache)?

Solution

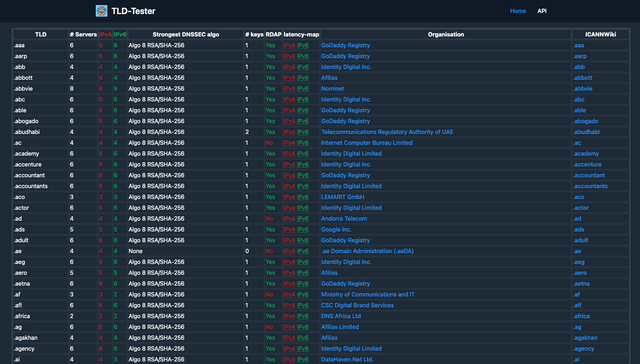

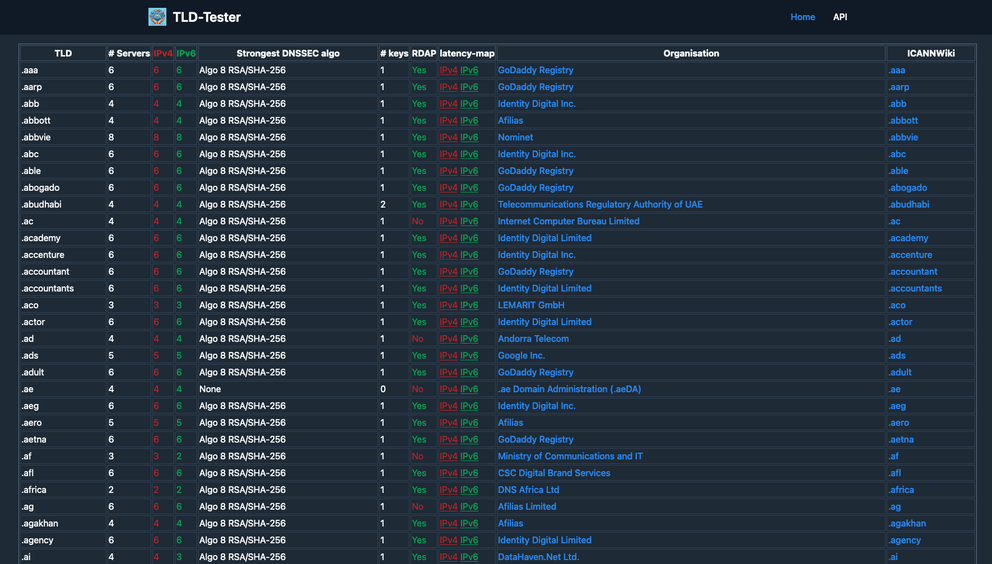

Lack of easy access to DNSSEC, latency, IP stack and maintainer's organisation information for every TLD led to the creation of https://tldtest.net (the project source code is hosted on GitHub under GPLv3).

There is still work to do regarding the user interface. The idea is to put the crucial information about all the TLD's on a single page.

tldtest uses IANA/ICANN sources to get a list of all the currently active TLD's today. Per TLD it gives the amount of servers, the amount of servers in IPv4 and IPv6, their DNSSEC algorithms, the number of DNSSEC keys in use to sign the zones, if it runs RDAP or not, a latency map and the name/link to the organisation that manages it.

RDAP

Registration Data Access Protocol (RFC7482) is going to be what will replace the Whois protocol specification (RFC3912). The problem of whois is that it is really hard to script. RDAP allows users to query the information of whois and more in a Restful Web Access Pattern. It even allows you to get more information if you are authenticated depending on how it has been implemented.

ICANN mandates RDAP implementation on all it's new TLDs. It sadly can't enforce it on the legacy ones (which is why, even within the same organisation, you have some TLDs that have it and some that don't. They haven't been forced on all of them).

During the creation of https://tldtest.net, rdap.iana.org got implemented to query most information about every TLD, greatly simplifying development.

DNSSEC

DNSSEC is a way of signing zones to avoid cache poisoning attacks. Various algorithms are used. They all correspond to a number and are listed here. The most common ones are algo 8 and algo 13. In ideal conditions, the DNS zone of a given domain should implement the same DNSSEC algorithm as the TLD.

DNSSEC is based on a chain of trust. A chain is as weak as his weakest link. This means that if your TLD is implementing algo 8 and you sign your zone with algo 13, which is stronger, you are only going to add processing time while not adding security. The opposite way means that, if your TLD implements algo 13 and your zone is signed with algo 8, now, it's your zone that is the weakest link.

Latency map

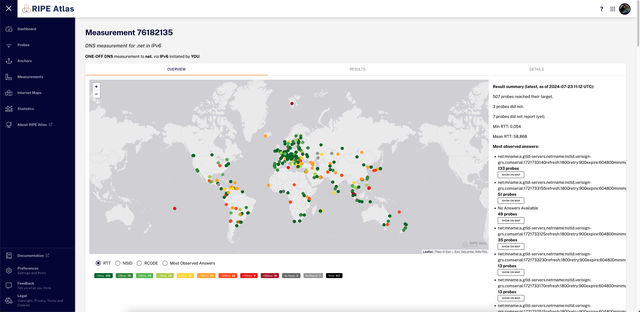

The latency map represents the latency required for a given atlas probe to get a response from the following command:

dig -t soa <tld>

Many TLDs claim to be anycast. But the term "anycast" in itself is vague. Anycast means that multiple servers are connected to different entry points in the network with the same IP. In theory, this will accelerate resolution time, but "anycast" doesn't specify where those servers are geographically situated. It could be in the same country or it could be around the world.

Although having anycast servers for your zone will have a positive impact on the resolution time, the recursive nature of a DNS resolution process can make that time go up if your TLD itself has a long response time, but this metric is often ignored. To determine these values and place them on a map, I used a self written script that will select RIPE Atlas probes worldwide depending on defined constrains (like the probe ability to reach over IPv6, being on various ASNs, etc.).

Then, I automatically create measurements for every single TLD in IPv4 and IPv6 with a DNS resolution on the probe itself (please note that the probe is not capable to do his recursive resolution on it's own. So it will be using a local resolver).

Sadly, I can't renew those measurements that often. Because, although I asked, RIPE NCC was unable to expand my credit spending limit in RIPE Atlas that would have allowed me to redo all my measurements every now and then.

Please note, a recent RIPE Labs article written by Ulka Athale - How We Distribute RIPE Atlas Probes - exposes a problem that I also experienced in this case. The geo-distribution of Atlas probes is mostly concentrated in Europe and the USA. So although I tried varying the sources, I couldn't get data for every single country. But we can still see patterns in it.

Conclusion

Not all TLDs are built the same. Consideration should go into their security, supported IP stacks and what organisations are managing it. Try not putting all your eggs in the same basket.

Don't forget to also consider the performance of the TLD you are going to use in the geographical zone of your target audience.

Sources

Namecheap - All about top level domains

IETF RFC882 - DOMAIN NAMES - CONCEPTS and FACILITIES

IETF RFC2065 - Domain Name System Security Extensions

IETF RFC3490 - Internationalizing Domain Names in Applications (IDNA)

IETF RFC3912 - WHOIS Protocol Specification

IETF RFC5890 - Internationalized Domain Names for Applications (IDNA)

IETF RFC7482 - Registration Data Access Protocol (RDAP) Query Format

MCH2022 media.ccc.de - Running a Domain Registrar for Fun and (some) Profit by Q Misell

GitHub - ripe-atlas-world-probe-selector

Comments 5

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.

Stéphane Bortzmeyer •

"This was a precursor to DNS." whois, a precursor of the DNS??? "IDN TLD's (Internationalised Domain Names in Unicode) were defined much later on in RFC5890 in 2010." No, seven years before (RFC 3490) "Some TLDs even need a registrar to send an email to the TLD management organisation to create register a new domain. A human then has to manually edit the zone" There is nothing wrong with that, if that suits their constituency. The whole point of decentralisation (a strong feature of the DNS) is the ability to have different policies. "the amount of servers" It is an useful information, yes, but less important than the "strength" of the servers. bortzmeyer.fr has eight name servers but cannot be compared to .de (six servers) "It [ICANN] sadly can't enforce it on the legacy ones" See my point above about the freedom brought by decentralisation.

Arnold Dechamps •

Hello Stéphane, About the precursor to DNS, I guess this was bad phrasing on my end. About the IDN TLD's, indeed. I missed the first RFC. Both points are now corrected. Thank you for the heads up. About the DNS decentralisation, we agree that this is a good thing. However, I would assume that having it working in a standardised way (yet still decentralised) would be a good thing. The specific example I gave about the manual editing of the zone file has allegedly be a problem (mentioned in the MCH2022 talk) for some TLDs that have manually edited their zone and left errors inside of them. From what I understood, making the standard is the purpose of the IETF, but enforcing TLDs to actually implement them is the purpose of ICANN. About the amount of servers, there to, we agree. I was also thinking about that when writing tldtest. I haven't figured out yet a way to measure the specific "strength" of those servers in an objective way. I know that some TLDs have a better reputation than others when it comes to that and other signs around the TLD itself might give clues. (if the TLD implements DNSSEC algo 13 and RDAP for example, you might get a clue that the team behind it spent more time and more budget into making it solid compared to a TLD that has 2 servers, no DNSSEC, no RDAP and no IPv6). I would be very interested if you have an idea of a way to objectively measure that. I'm open to discussion if you have time

Named Bird •

I know ICANN is doing the gTLD program's Next Round. Then i read this post and it made me wonder: How IPv6-ready is the domain infrastructure? Which systems still rely solely on IPv4 and would break when IPv6 is the only thing available? Therefore i do a suggestion: Register a new TLD during the program that is going to only use IPv6. All related systems, including the DNS servers would run v6, and you can't register "A" records at all. It would be the ultimate IPv6-ready test for global infrastructure. While IPv6 is routable on the backbone level, it is by far not fully reliable or usable. Many end users, organisations, websites and more still need IPv4 to function correctly. Having the entire domain infrastructure v6-only will stand out wherever v6 is unavailable or broken. This would massively help with identifying and resolving such compatibility issues. Since i proposed this idea, i should probably also suggest a name: .VERSIONSIX Using this name, any connectivity issues would stand out. I'd LOVE to do this myself, but as a lone (graduating) IT student, i could never afford the fees. So handing the idea over to someone who can would be the most beneficial for the IPv6 adoption. Please let me know whether this is a stupid or a smart idea, and/or if it's worth considering.

Arnold Dechamps •

The problem with that is that someone would have to manage the TLD and pay for it. Not only that, but how many domains would you realistically expect to host on it (knowing that people will have to pay for every domain they register while other TLD's offer dual stack for the same price or less...)? It's an interesting experiment to make. You would be surprised at the amount of domains that would just continue to work. The fact that most companies now use cloud services for everything has the benefits that most authoritative DNS's in them are dual stack. So you might not access the website over IPv6, but you will get a response from the DNS. You can test that on your side. If you run a self-hosted recursive resolver on a v6 only network, you will have interesting results. Some TLDs will just not work (because they don't have v6 authoritative DNSs). We did that at the HSBXL once. It was both surprisingly usable (at least in our part of the world because our most common used TLDs work in IPv6) and breaking some stuff in bizarre ways that we couldn't observe differently.

Named Bird •

Well, i guess ICANN would probably be just as interested in the results as us? I meant it as a temporary and unbuyable TLD which would only have a few test domains. By not doing domain registrations, they could relax certain requirements and relief some concerns. This would make experimentation and/or debugging issues a lot easier. (Pushing to prod? ;-) Then when everything works, the domain would have served its function and be retired. IANA would have minimal burden or risk this way, which is important for them to even consider foregoing fees. I don't really want to focus on consumer websites, as we already know those (kind of) work on IPv6-only. I am more interested in the effects on OTHER systems, like for example WHOIS, DNSSec, etc. etc. There are many other aspects of the internet that haven't been properly v6 tested yet. Those are my target.