After a short service outage, I was interested to find out what happened. Please find below a case study for using public RIPE NCC Tools for this purpose.

I received this email last week:

Date: Fri, 17 Sep 2010 10:37:26 +0200 From: Anand Buddhdev < anandb@ripe.net > To: undisclosed-recipients Subject: K-root outage on 16 September Dear colleagues, Between 07:00 and 08:12 (UTC) on 16 September 2010, the performance of all the global instances of K-root was affected by a leaked prefix from one of the local instances. DNS queries towards K-root were either not answered, or there were delays in receiving responses. There was, however, no noticeable impact on global DNS root server operations. We are working with the host of the affected instance to ensure that this does not happen again. We are taking additional measures to avoid such a disruption in the future. Anand Buddhdev, DNS Services Manager, RIPE NCC

Naturally, I was curious to see what happened. I've documented the process I went through to give you insight into the tools we offer to look at operational events.

What happened to the service?

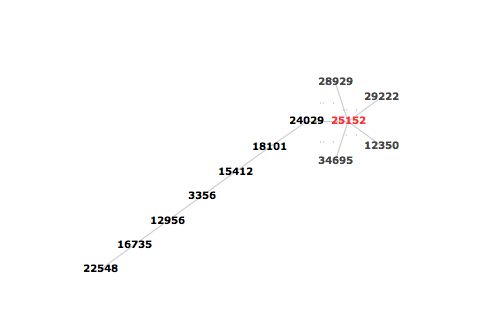

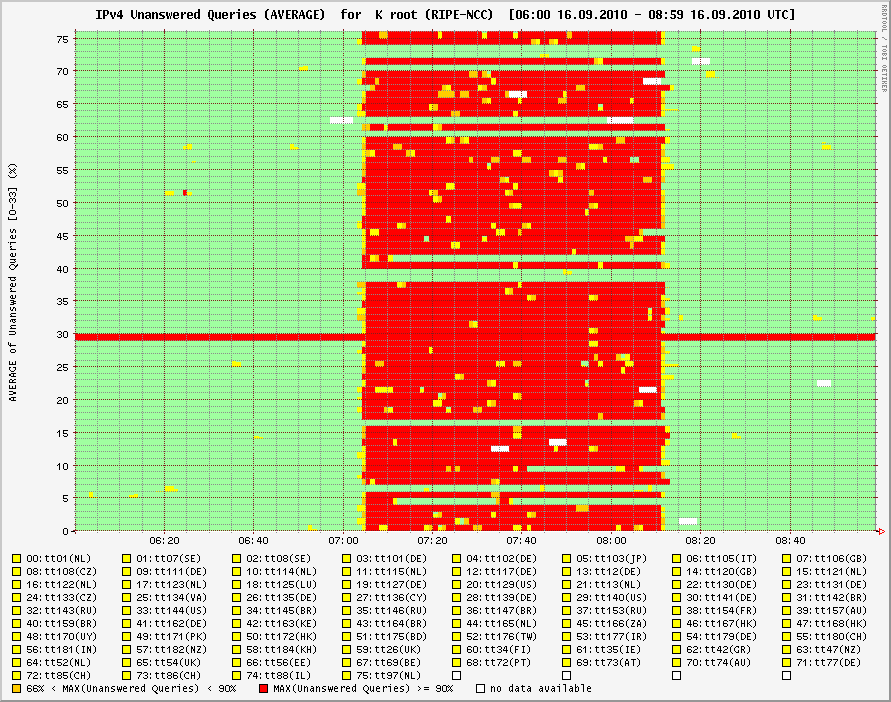

For DNS, we have dnsmon , a service that looks at quite a few DNS name servers serving the root and top-level domains around the world. After clicking through a few steps, I arrived at a graph that showed the impact of the event on the service level of k.root-servers.net , K-root for short:

This graph shows whether queries from 77 different clients on the Internet are answered by K-root. Time runs along the x-axis. There are 77 plots stacked above each other on the y-axis, one for each client. In each plot, green means that 'queries are answered', yellow means that 'many queries are not answered', red means that 'no queries are answered', white means that no data is available because of problems internal to dnsmon .

The way the graphs are stacked makes it easy to see whether a problem is at the server end or at the client end. A vertical pattern like the one that dominates this graph indicates a problem at or near the server side because all clients experience a similar service degradation. Horizontal patterns like the one in row 29 indicate the problem is closer to the client side.

This shows an almost complete outage of K-root. However, some clients remained totally unaffected. Since K-root is heavily anycasted it is likely that this is a routing problem, rather than an outage of several K-root instances at exactly the same time. It is interesting to see that nine clients do not see any outage at all: lines 6(IT), 16(NL), 38(FR), 39(AU), 61(IE), 63(NZ), 70(AU), 72(CH) and 73(CH). Four clients recover more quickly than the others: 4(DE), 9(DE), 42(KE) and 46(HK).

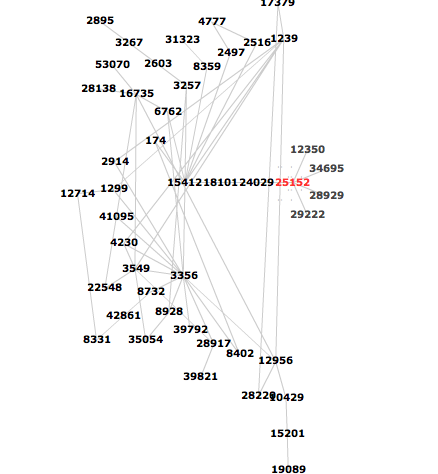

At this point, I already suspected routing problems rather than a simultaneous outage of several anycast instances. So, next I checked the IPv6 service:

And indeed, K-root's IPv6 service appeared to work just fine. Remember that horizontal patterns are indicative of problems near the client and not at the server. Also note that we have only about half the number of clients in the IPv6 version of dnsmon. This is because not all the TTM test boxes used for dnsmon have IPv6 connectivity yet.

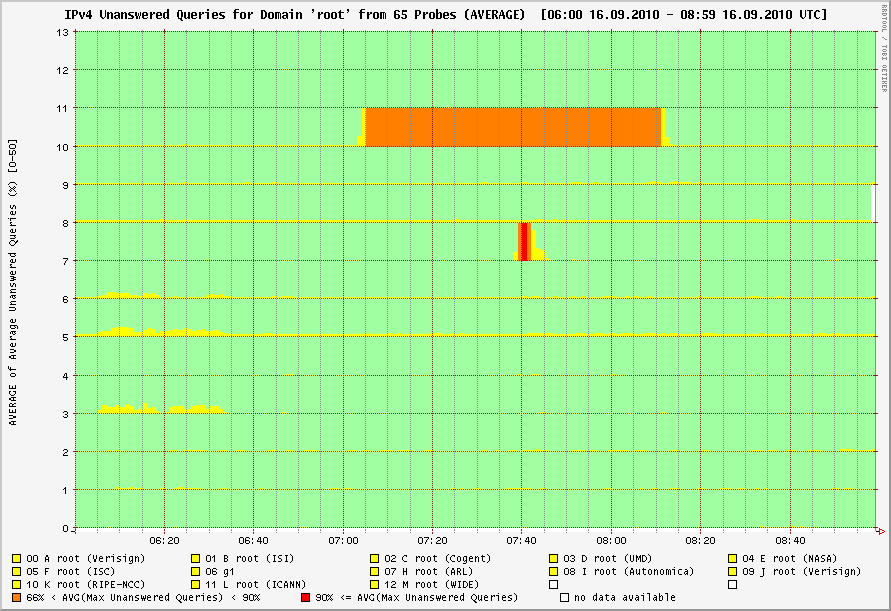

Now before anyone gets the impression that the DNS root as a whole had service problems because of this outage, here is the dnsmon graph that shows that the other servers for the root were available via IPv4 during the time of K-root's outage:

This graph is a summary for all servers serving the DNS root zone. Again, time flows along the x-axis. This time there are 13 plots stacked above each other. Each plot gives a summary of the results for one of the 13 root name servers. So, line 10 here is a summary of the previous graph; it shows orange and not red because more than 10% of the queries were still answered by K-root, represented by the green and yellow in the previous graph. Note that the short outage of H-root during this time was due to scheduled maintenance of the network connection for this unicasted server.

What happened to routing?

Just to be complete here are the elementary steps: who is involved?

reif!dfk 103> host k.root-servers.net k.root-servers.net A 193.0.14.129

So, which are the prefixes normally announced for this address?

reif!dfk 104> whois -h riswhois.ripe.net 193.0.14.129 % This is RIPE NCC's Routing Information Service % whois gateway to collected BGP Routing Tables % IPv4 or IPv6 address to origin prefix match % % For more information visit http://www.ripe.net/ris/riswhois.html route: 193.0.14.0/23 origin: AS25152 descr: K-ROOT-SERVER AS of the k.root-servers.net DNS root server lastupd-frst: 2010-03-18 20:10Z 202.249.2.20@rrc06 lastupd-last: 2010-09-17 07:26Z 198.32.176.164@rrc14 seen-at: rrc00,rrc01,rrc03,rrc04,rrc05,rrc06,rrc10,rrc11,rrc12,rrc13,rrc14, rrc15,rrc16 num-rispeers: 111 source: RISWHOIS route: 193.0.14.0/24 origin: AS25152 descr: K-ROOT-SERVER AS of the k.root-servers.net DNS root server lastupd-frst: 2010-07-23 17:10Z 192.65.185.157@rrc04 lastupd-last: 2010-09-16 15:09Z 198.32.124.215@rrc16 seen-at: rrc04,rrc10,rrc14,rrc16 num-rispeers: 6 source: RISWHOIS

riswhois is a whois service for BGP routing tables. It is based on routing tables collected by the RIS every eight hours, so it cannot show short term or real time information. I use it to see what the "normal" state of things for a given address or prefix should be. The lastupd-first lines provide a good intuition about how long routing for this prefix has been stable and the lastupd-last lines give an intuitive idea whether there has been recent routing changes. In this case, it looks like both are solid and quite stable.

Routing is somewhat special for K-root because it uses anycasting ; the prefixes are announced from multiple locations in the Internet topology. In addition, we use two prefixes: the longer prefix, a /24, is much less widely seen than the /23: only six RIS peers see it compared to the 111 who see the /23. This is intentional. The /24 should only be visible to clients using 'local instances' of K-root. These are instances that serve a limited local community rather than all clients on the Internet. Local instances do not have the capacity and connectivity to support a large number of clients. So the first suspicion about the cause of the problem is that an announcement for the /24 may have leaked more widely than intended, causing more clients than intended to use that instance and consequently overwhelming it.

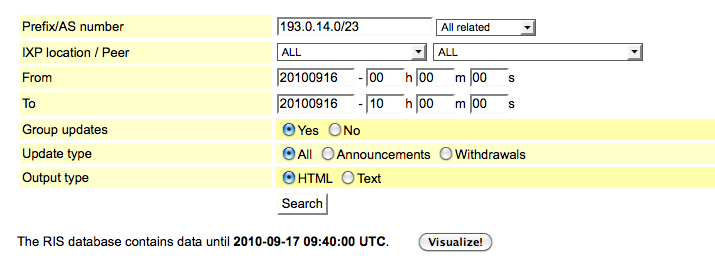

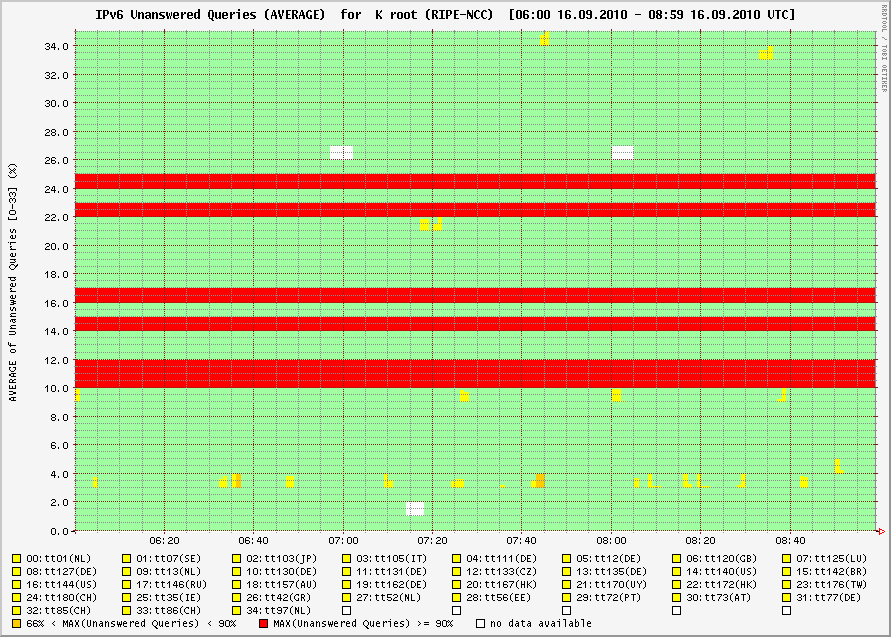

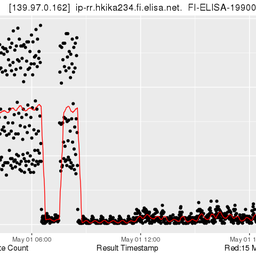

I investigated further using RIS ; a link from dnsmon brought me here with all the fields already filled in:

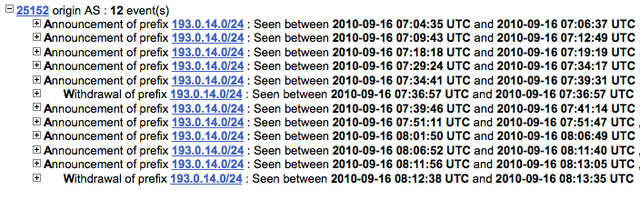

After a short while, I was able to see all BGP updates recorded by the RIS between midnight and 10UTC from the day before the incident:

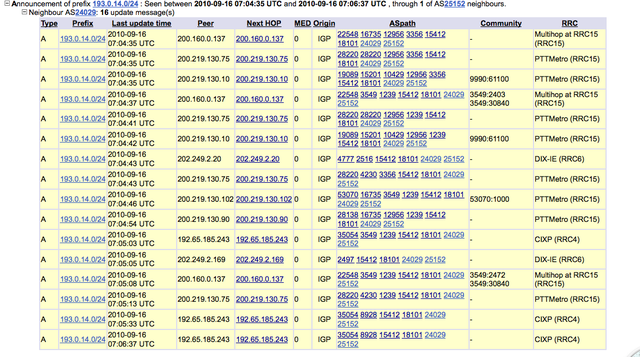

Indeed, there had been routing activity for the /24 prefix that exactly matched the times of the service problems in dnsmon . Unfolding the details for the first group of updates revealed that the routes are being announced via AS20249->AS18101->AS15412, so the leak was near the K-root local instance in India:

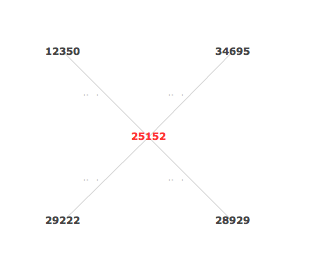

I even got a nicely animated graphical overview showing what happened by clicking the 'Visualise!' button and selecting the /24 prefix. Here are a few snapshots. This is the normal state as seen by RIS :

The final spread as seen by RIS :

See the animation for yourself.

The RIS tools also work for IPv6 and of course I checked whether there are any routing anomalies there; as expected I found none. Check it out yourself if you want to know first hand.

I have briefly shown you how I find out what really happens when I receive reports of network events. I hope this gives you an idea on how to use the public tools we provide. Comments are always welcome.

Comments 1

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.

Anonymous •

So in summary: You did not notice any outage, if deployed the current standard protocol. Only if you are still stick to the legacy Internet protocol, you might had to wait some seconds longer for your DNS resolution.