Post-quantum cryptography could make DNSSEC responses bigger, pushing more DNS traffic from UDP to TCP. In this guest post, Eline Stehouwer (SIDN Labs) explores what that means for authoritative name servers, replaying real .nl traffic across NSD, Knot, BIND and PowerDNS.

To prepare for a future where powerful quantum computers exist, many systems using classical cryptography will need to migrate to post-quantum cryptography (PQC). One such system is DNSSEC. Because of the larger signature and key sizes associated with PQC algorithms, it might be the case that DNSSEC-related queries more often require the use of TCP instead of UDP. We have investigated the impact of increased TCP traffic on an authoritative name server with the .nl zone file. Our results indicate that the impact is limited. We mainly found that an increase in TCP usage increases CPU consumption.

The cryptography currently in use is not secure against attacks carried out using quantum computers. Although practical quantum computers may still be years away, experience based on a previous algorithm rollover shows that it takes around 10 years for a newly introduced DNSSEC signing algorithm to be widely adopted. To speed that up, it helps to be prepared and to know what impact possible replacement algorithms may have.

In many of the proposed PQC signing algorithms, the signature size is relatively large compared with the ECDSA signature size of 64 bytes, which is the current standard in DNSSEC. For example, Falcon-512, a promising PQC alternative, has a signature size of 666 bytes. DNS messages are sent over UDP by default and are recommended not to be larger than 1232 bytes, based on an MTU of 1280 bytes. If multiple Falcon signatures need to be included in a single DNS response, the message exceeds that recommended size limit.

In DNS, a client can advertise their UDP buffer size using EDNS0. Whenever a name server needs to send a response bigger than a client’s advertised EDNS0 limit, they will instead send a truncated response. This means that the message is truncated to fit the size limit and the TC bit is set. When the resolver receives a response with this bit set, it starts a TCP session with the authoritative name server to receive the full response.

Currently, only a small fraction, about 1 to 2%, of the DNS traffic to the .nl name server goes over TCP. It is possible that when PQC signing algorithms are introduced in DNSSEC, that percentage will go up significantly. That would lead to a pattern where DNSSEC-enabled queries often have to be resent using TCP. The impact of increased TCP traffic on authoritative name server performance is currently unclear. During my internship, we therefore sought to clarify that impact.

Measurement setup

When considering the PQC DNSSEC scenario in terms of retrying large messages over TCP, we are interested in the impact of an increased number of TCP sessions. To look into that, we did several measurements.

Our measurements required a setup where we could replay queries to a name server. The replay tool had to be able to replay the queries at a given time, with a high level of precision. Since we were unable to locate any libraries or tools that could replay both UDP and TCP queries and send the same query first over UDP and then over TCP in a specified percentage of cases, we developed our own replay tool in Go using miekg’s DNS library. With this replay tool, we can send queries to a name server and receive the responses.

Next, we needed a way to simulate truncating a percentage of the messages. There are various ways to do that - e.g. using actual long messages, or using some rate-limiting features server-side. We chose to manage the simulation on the client side. In a given percentage of cases, the query-sending client ignores the server UDP response and tries the query again over TCP. That simulates the behaviour pattern one would see when the messages are actually truncated on the server side, and puts the same load on the name server. Note that, in the rest of this text, when we refer to a percentage of TCP, we mean the percentage of queries that are first sent over UDP and then retried over TCP.

To measure the actual impact, we replayed a list of queries to a name server, with the relative timing of the queries preserved. At the same time, we measured the amounts of memory and CPU time the name server used. To minimise interference between the query sending process and the name server process, we ran each process on a different server.

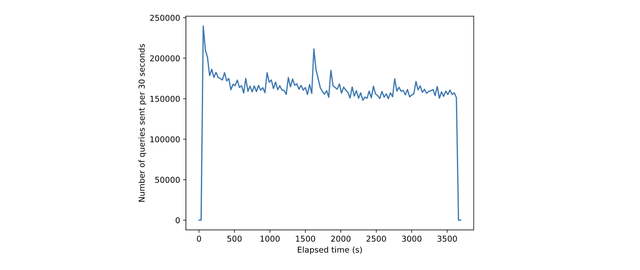

We replayed one hour of real-world queries that were originally sent to one of the .nl name servers. By using such query data, we aimed to make our tests representative of a .nl name server’s normal operations. More specifically, the data was taken from one of the .nl name servers on 1 October 2025 between 12:00 and 13:00 CEST. The query data the name server received in that hour is visualised in Figure 1.

The figure shows the number of queries sent every 30 seconds for the whole hour of the experiment. The queries are grouped into 30-second intervals, aligning with our metric measurements, which are also taken every thirty seconds. That arrangement makes it easier to identify any correlation between the peaks in this graph and the later graphs of these metrics. For this experiment, we used a copy of the zone file from the same day so that it was able to respond to a query whenever the actual .nl name server would also have responded. For privacy reasons, the query data and corresponding zone file were pseudonymised versions of the originals, with every domain name replaced by a randomised string of the same length. All IP addresses were also anonymised.

We performed the measurements using two different machines. One of them ran the code of our query sending tool, which had to send DNS requests to the other machine and receive the responses. The other machine ran an authoritative name server in a Podman (version 4.9.3) container, which was serving the pseudonymised zone file and was configured to respond to requests coming from the first machine. Both of the machines were running Ubuntu 24.04.3 LTS and were completely dedicated to these experiments, meaning that no other software was running on them and no other users were using them. The machines were also located within the same network, so we disregarded network latency.

Because the name server was running in a Podman container, we used the Podman API to collect statistics on the CPU time and memory usage of the name server.

Our experiment set consisted of 12 different experiments. In each of the first 11 experiments, a different percentage of the queries was retried over TCP. The percentages went from 0% to 100%, in incremental steps of 10 percentage points. In the last experiment, we sent all the queries immediately over TCP, so nothing was retried or first sent over UDP. All experiments in the experiment set were repeated 10 times, to get an accurate average. Since we also wanted to see if there was any difference in behaviour and impact between authoritative servers, we ran the experiment set with four different name server implementations: NSD (version 4.12.0), Knot (version 3.5.2), BIND9 (version 9.20) and PowerDNS (version 4.9.0 in BIND mode).

For NSD, Knot and BIND, the default settings did not have any excessive restrictions on TCP connections. We kept their settings as close to standard configuration as possible. For PowerDNS, the server setting max-tcp-connections has a default value of 20. That means we can have at most 20 simultaneous incoming TCP connections. That is quite a small number, so we expected that, if we were to run our test with the setting unchanged, the name server would not be able to handle our queries. However, that was not actually the case: we did not find a major difference between our test with max-tcp-connections set to 200 and set to the default value. We suspect that might be because the servers are close together, meaning that each individual TCP connection is short-lived. In a normal setup, it might be necessary to set a higher value if you expect many requests over TCP.

Results

After we ran the tests, we visualised the results in two graphs, each of which provides its own insight. Our discussion of the results uses the graphs for guidance.

Both the user space and kernel space CPU time of the name server processes were tracked during the tests. However, only the graphs of the user space CPU time are shown below, since the effects on that and kernel space CPU time are similar. As previously mentioned, we also monitored the memory usage of the name server process while running the experiments. We found no change in memory usage, which is good news given the recent price increases for computer memory. Hence, that variable is not of interest for this blog post.

Before we start with the actual discussion of our results, we would like to add a qualification. Our measurements were gathered using configurations as close to the standard configurations as possible. The configurations of all four tools can probably be optimised to make the tools more efficient than they appear from our measurements. For completeness, we have put the code we used for the experiments, including the configuration files, online. The link to the code can be found at the end of the blog.

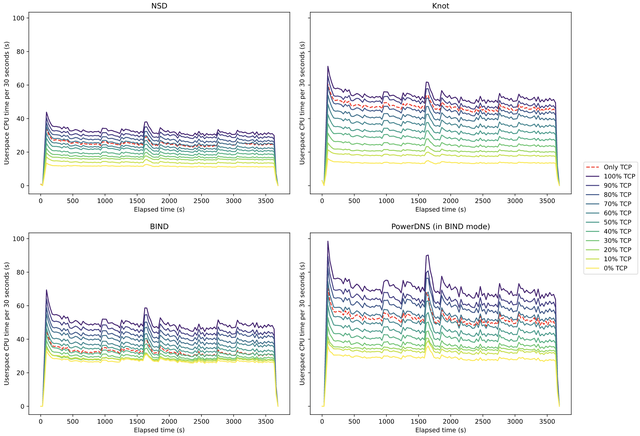

Figure 2 shows a graph for every name server implementation that we tested. The graphs show the CPU time used every 30 seconds over time, as measured during our experiments. They all have 12 lines, 11 of which show the CPU time used every 30 seconds when a specific percentage of queries is retried over TCP. The red dashed line shows the CPU time used when all queries are sent over TCP only. All lines represent the average of the 10 independent measurements we performed for that experiment. To verify that the average was representative, we plotted the individual measurements and checked how they varied. The variance we observed was low enough to justify using the mean, with at most one outlier per experiment.

In all four subgraphs of this figure, we see the same phenomenon: when a bigger percentage of queries is retried over TCP, that leads to higher overall CPU time usage. The graphs also show spikes in CPU usage at specific moments. We can explain the spikes if we analyse both Figure 2 and Figure 1, since at those same moments we can see in Figure 1 that there was the same spike in the number of queries that were sent. When a name server has to handle a large query volume, it has to use more CPU time to answer the queries, which explains the spikes.

In Figure 2, we can see that a higher percentage of TCP retries has an impact on the CPU time usage. The size of this impact differs for each name server software, and we can compare the differences because the graphs have the same scale. The NSD name server has the smallest increase in CPU usage. When we compare Knot and BIND, we see that although BIND starts out with a higher CPU usage when no TCP retries are done, its increase from this starting point is relatively small. Knot, on the other hand, has quite a significant increase in CPU time between each line in the graph. PowerDNS has the worst of both worlds: like BIND, it has a high baseline CPU usage and, like Knot, the increase between each line is relatively large.

With all the name servers, each incremental increase in the percentage of retries over TCP leads to higher CPU time usage. For NSD and Knot, the increase between the lines also seems to be around the same value every time. That suggests that there might be a linear relationship between the percentage of TCP retries and the CPU time used.

The red dashed line in each graph, corresponding to our experiment in which we sent all queries only over TCP, shows us what the cut-off point is for each implementation. Meaning, the percentage of TCP retries at which it is advantageous to send everything over TCP in the first instance, instead of doing UDP first and then using TCP if the response is too large. For NSD and PowerDNS, that point is at 70%: with that percentage of TCP retries, CPU time usage is higher than when everything is immediately sent over TCP. For Knot, the cut-off point is higher at 90% and for BIND it is lower at 50%.

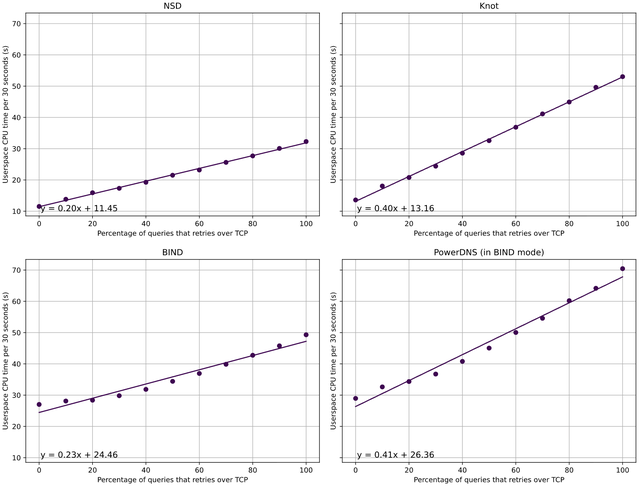

To investigate the possible linear relationship we saw in Figure 2, we made another graph, which can be seen in Figure 3, which omits the red dashed lines. It contains the percentage of TCP retries mapped against the average CPU time used throughout the whole measurement time for each TCP percentage. The average therefore summarises an entire line from Figure 2 into a single data point. In addition to plotting the single data points, we performed a standard linear regression analysis, yielding the lines in Figure 3.

The R-squared values corresponding to the regression are as follows: NSD has an R-squared of 0.9961, Knot is at 0.9958, BIND is at 0.9561, and PowerDNS shows 0.9783. R-squared is a statistic that shows how well a linear regression model fits the data, so it indicates the strength of the relationship between our model and the dependent variable. It ranges from 0 to 1, where a value of 1 means that the model perfectly describes every data point and one closer to 0 indicates a poor fit. In our case, NSD and Knot have very high values, around 0.996, which indicates a strong fit to the data. In contrast, BIND and PowerDNS have lower values, suggesting a weaker relationship compared with NSD and Knot, but they still demonstrate a good fit overall.

We plotted the resulting regression line alongside the averaged data points. From each model, we also got the linear regression equation which describes the relationship we found in our data, and we included it in the corresponding graph.

In Figure 3, both NSD and Knot have a clear linear relationship between the number of queries that are retried over TCP and the CPU time used by the name server, similar to our findings in Figure 2. There are no big outliers and the R-squared values are high. We can also see that Knot’s line is steeper than the NSD line. This means that on a Knot name server, an increase in queries over TCP has an impact that is twice as big as the impact on an NSD name server.

For BIND, on the other hand, we cannot conclude that there is a linear relationship. The line generated with linear regression is not a great match for the actual data points in the graph, especially for the TCP percentages from 0 to 20%. So, although we do not necessarily see a linear relationship, we do see that the increase in CPU time used from 0 to 100% TCP is smaller for BIND than for Knot, so BIND seems to handle this increase better. When we compare BIND with NSD, we see that their CPU time usage growth is similar. However, NSD has a lower baseline, so between these 2, NSD is also the better option.

The growth of the line of PowerDNS is comparable to that of Knot, although just like BIND, the linear relationship here is less strong. Especially in the lower percentages, the increase in CPU usage is relatively small. As we mentioned before, we can see that the CPU usage of PowerDNS starts with the highest baseline. That, coupled with the steep gradient of increase, makes it the worst performing name server option in our measurements.

The results indicate that higher TCP retry percentages consistently lead to higher CPU usage, but for all tested name server implementations, the increase is gradual and predictable. Especially in the case of NSD and Knot, where the relationship between the TCP retry percentage and CPU time is strongly linear. The linear behaviour is interesting from an operational perspective, since it shows that there should not be any drastic performance degradation when a name server needs to handle many TCP connections at the same time. And, while different name server implementations are shown to have varying efficiencies, none of them show behaviour that would cause issues with higher TCP rates.

Therefore, in a PQC DNSSEC scenario, setting up more TCP sessions should not pose a big problem. And, if we ever get to a percentage of TCP retries above 90%, our results suggest that it will be better to switch to using only TCP, regardless of which name server implementation is used.

Looking ahead, several areas should be explored further in future research. First, previous research and testing suggests that increased queries per second over TCP may lead to kernel limits. Additionally, kernel memory usage and other kernel metrics could provide valuable insights. Future work could focus on the effect of increased TCP on such kernel metrics and settings.

Second, it would be useful to repeat our measurements in a setting where the server sending the queries is located further away from the authoritative name server. That would probably give higher and more realistic round-trip times (RTTs), which could change the impact of increasing TCP connections. One possible way to do that would be to use the Linux tool tc, which can configure Traffic Control in the kernel.

Finally, experimenting with zone files containing actual PQC signature records could show how often in the PQC scenario we actually have to send the messages over TCP, and what that would mean for the name servers. Using such an approach, it would be interesting to look into different possible PQC signing algorithms that are up for standardisation.

Conclusion

All the authoritative name server implementations that we tested can handle an increased number of TCP retries well, at the cost of higher CPU usage. Even in the worst-case scenario, where 100% of the queries were retried over TCP, the additional load was still manageable. If that worst-case scenario were to arise, we would switch to using only TCP anyway, since that is effectively more efficient.

What could be interesting for operators is the diversity amongst name server implementations in terms of how much the CPU usage increases with increasing TCP retry percentages. With the software versions and configurations that we tested, NSD is the most efficient overall, while BIND scales better than Knot and PowerDNS.

We would welcome your feedback and comments. Our experimental scripts and configuration are shared as open source here.

Comments 0