The ATLAS, CMS, ALICE and LHCb particle detectors are designed to study particle collisions at the Large Hadron Collider (LHC) at CERN. Those as well as other existing and emerging large-scale experiments produce increasingly high volumes of data. Their source can be a wide variety of instruments, including sensors, detectors, antennas or telescopes. Although characteristics and goals of these experiments vary, they share a common challenge for real-time filtering, storage and analysis of the acquired data. One of the key components of this chain is a network, called the data acquisition network (DAQ).

Introduction

Data acquisition networks collect the outputs from all the instruments to reconstruct a physical process. This many-to-one communication pattern is particularly demanding for networks based on the TCP/IP and Ethernet technologies. It leads to overloading the switch buffers, dropping packets and, as a result, TCP throughput collapse. This phenomenon is widely known as TCP incast, also present in datacenter networks. On the other hand, there is desire to use commodity hardware in these systems whenever possible in order to reduce costs and simplify configuration and maintenance.

New technologies and protocols are emerging from both academia and industry that can be applied to avoid the negative impacts of network congestion in data acquisition systems based on the TCP/IP protocol and Ethernet. It becomes particularly important to investigate those technologies as the requirements for each of the LHC detectors can approach 100 TB/s after the LHC major upgrades, which will make the network congestion problem even more critical (for more information on general IT challenges in large experiments see the CERN openlab Whitepaper on Future IT Challenges in Scientific Research ). In our research project we are exploring the potential of these proposals for possible adoption in the next generation data acquisition systems at CERN. This project is part of the ICE-DIP program (the Intel-CERN European Doctorate Industrial Program), which is a European Industrial Doctorate scheme hosted by CERN and Intel Labs Europe.

Requirements

The fundamental requirements a DAQ network must address are focused on:

- Bandwidth

- Latency

- Reliability

- Congestion notification

As already indicated in the introduction, the requirements of the upgraded LHC detectors can reach 100 TB/s, making the bandwidth requirement alone a challenge. In terms of the data collection latency (the time it takes to collect the outputs from all the sensors of a detector), its mean should be kept in a reasonable range, but more importantly the variance must be low. It allows a precise prediction of the network delay, so that the entire DAQ system can be designed accordingly. DAQ networks must also be reliable to minimise the risk of unrecoverable data loss. This can be very costly since the LHC was designed to study extremely rare phenomena. Finally, if the network is not capable of handling the amount of traffic at a given moment, a mechanism for signaling ( busy signal ) must be available. That's how the preceding subsystems of the DAQ chain are notified to temporarily limit the data rate.

Challenges

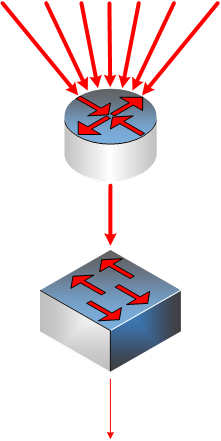

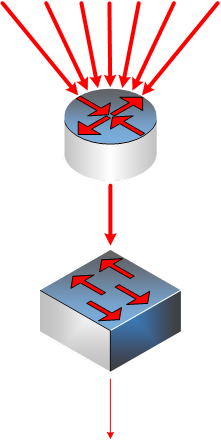

The challenge of data acquisition is the bursty many-to-one traffic pattern of DAQ data flows (as illustrated in the image below). Synchronous data transfer from multiple sources to a single compute node place a burden on the congestion control mechanism of a protocol and the network hardware itself. The default congestion control mechanism of TCP/IP has been found to be incapable of dealing with multiple relatively small flows in parallel. Provided that the network has not enough bandwidth to handle the instantaneous rates or enough buffers to absorb the bursts, costly packet losses and retransmissions will occur.

We have identified three general options (or their combination) for solving the challenge of data acquisition:

- Increasing the network bandwidth;

- Increasing the buffers sizes in the network;

- Controlling the data injection rate into the network to avoid congestion while keeping it fully utilised.

We are focusing our research on the last two approaches, since high speed links are expensive and the network would not be fully utilised on average. Any excess of the available bandwidth should be used for increasing the overall system throughput. Controlling the data injection rate into the network to avoid congestion while keeping it fully utilised.

Potential Solutions

40/100 Gigabit Ethernet (GbE) is standardised in IEEE 802.3ba with products already available on the market and 400 Gigabit Ethernet is under development. 4x10GbE ports are now becoming standard in servers available on the market. These trends show that Ethernet will remain capable of sustaining the data rates required by the upgrades of the LHC experiments. However, with ever increasing link speeds the challenge of congestion control in high speed Ethernet, especially in case of data acquisition, will become even more critical. We are exploring a number of solutions ranging from the link layer through the transport layer up to the application layer with the intention of making data acquisition over Ethernet networks robust and straightforward.

Starting at the link layer, there are the enhancements to the Ethernet standard, Ethernet flow control (IEEE 802.3x), priority-based flow control (PFC, IEEE 802.1Qbb) and congestion notification (IEEE 802.1Qau) for congestion control at the link level. At the transport layer, a number of TCP variants are proposed to diminish or eliminate the issues arising from congested links. Also, dedicated application layer solutions have been proposed specifically for the many-to-one communication patterns.

The recent trends in Software Defined Networking (SDN) and Network Function Virtualization (NFV) are boosting the advance of software-based packet processing and forwarding on commodity servers. High performance load balancers, firewalls, proxies, virtual switches and other network functions can now be implemented in software and not limited to specialised commercial hardware, thus reducing cost and increasing flexibility. Packet processing in software has therefore become a real alternative to specialised commercial networking products. It can be potentially very attractive from the viewpoint of data acquisition networks.

Conclusion

In this article we gave a brief introduction to data acquisition networks for large experiments and presented an overview of the research areas that we are going to explore in this project. If you have your own observations or comments on many-to-one communication patterns, please share them below.

This research project has been supported by a Marie Curie Early European Industrial Doctorates Fellowship of the European Community's Seventh Framework Programme under contract number (PITN-GA-2012-316596-ICE-DIP).

Grzegorz is one of the successful candidates of the

RIPE Academic Cooperation Initiative (RACI)

and will be presenting his research at

RIPE 69

.

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.