Next in our series of articles on navigating network measurements, it's time to talk about how measurement.network helps to support artefact evaluation with infrastructure.

Issues of artefact evaluation

'Artefact Evaluation' describes the process of - essentially - checking if the tools used by researchers for an accepted paper can be used by others. Anyone who ever wrote 'some small tool' to do something in one's own infrastructure can likely imagine what kinds of challenges can come with that. Luckily, many academic conferences now run artefact evaluation committees. In those committees, PhD students (usually) try running what others built for their papers, working with the original authors to get things into a reusable or ideally reproducible state.

So what are the issues? Well, again, similar to imagining why somebody else might be unable to 'get that small automation tool I wrote running', it is rather easy to understand why one would not try to get it running on one's own notebook. (Or any other machine. That cannot be immediately wiped afterwards... Twice... Just to be sure.)

Beyond that, reviewers might not always have access to the resources needed to evaluate an artefact. GPUs are often not commonly available. And beyond that, having supported some artefact evaluations already, students can be rather relieved when the answer to 'how on earth would I evaluate an artefact that needs half a TB of memory' is a simple 'please shutdown and boot up your VM again; It should now have enough memory.'

Finally, sometimes artefacts are actually active network measurements. Running those from one's own institution is pretty much at the centre of 'how to make unexpected non-friends in the IT department'.

Solving issues

The infrastructure of measurement.network is a good option to address this issue. There is no 'institutional red tape' involved in providing access and resources are plentiful (well, apart from GPUs, but we have some).

And we even managed to get blanket clearance from an ethical review board: As long as a paper went through proper ethics review, the artefacts can be ethically evaluated via the measurement.network infrastructure.

This is HotCRP

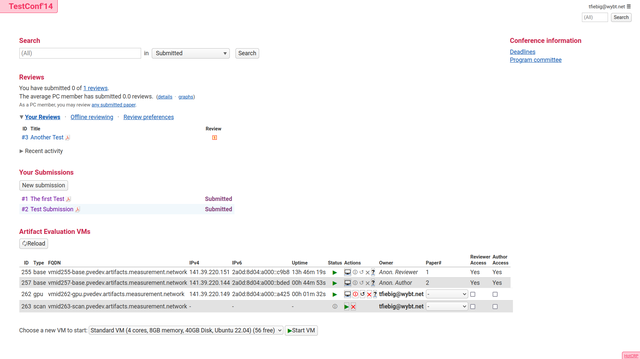

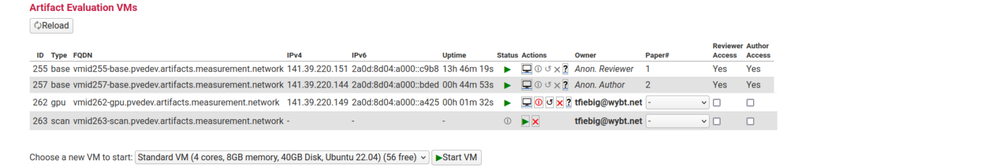

Now, how to give access to such infrastructure in the review process? In computer science conferences, reviewing is usually done using 'HotCRP' (a rewrite of CRP, the "Conference Review Program"). HotCRP is open source software, making it a prime target for some (rather prototype-y; Don't hate me, I am not a coder, send patches... please. :-| ) additions to enable artefact evaluation. These patches, essentially allow reviewers and authors to start virtual machines directly from HotCRP.

Available virtual machine types are (all - for now - running Ubuntu 22.04) either a base VM (16GB memory, 8 cores, optionally with docker), a compute VM (64GB memory, 16 cores), or a scan VM. The scan VMs have the same resources as base VMs, but are in a dedicated network segment outlining the scanning purpose, providing a reachable abuse contact, and run a web-server providing further information.

Talking of potential abuse: the project has a rather simple abuse policy when it comes to users trying to 'explore' the system: "You break it, you fix it." Meaning: A friendly unrequested security evaluation by a user is no big deal. If you break things, don’t snoop in data and send patches. Repeated offenders will be recruited into the admin team.

Running artefact evaluation

With this in place, it was time to run some artefact evaluation. :-) The first conference getting artefact evaluation infrastructure was the Symposium on Privacy Enhancing Technologies (PETS).

PETS is one of the top privacy venues, and operated non-profit. An especially nice aspect of PETS is their rather limited patience with publishers, meaning that since 2023 PETS became self-publishing and open access. Artefact evaluation has been running for PETS since the 2024.2 deadline, so - with artefact evaluation for 2025.2 starting soon - for nearly a year.

Working with a smaller venue helped in ironing out many of the teething problems of the infrastructure, as well as figuring out the right size of VMs to provide. We also just concluded supporting ACSAC, a more security focused venue. Resource use there was significantly higher than for PETS, ultimately eating nearly 3 TB of memory across 77 VMs.

For ACSAC, the overall hosting was also more comprehensive, with all review systems (including for the papers) being hosted. Thanks to the automation already in place, this was actually a breeze to make happen. In a small survey among users, the reception was overall positive, with the obvious spots of 'well, more resources and more very specific things' left on the table.

The tragedy of the commons

One thing to note, though, in providing such a service, is that there is a certain 'tragedy of the commons' involved. One really needs to keep an eye on resource utilisation. This isn't so much for people using systems for something else, but rather for not using them at all.

What we experienced a lot is that users would not power down or release systems after they were done using them. Still, this is not too much of an issue due to the hard time-box of the review timeline of artefact evaluation: As soon as the deadline passed, all systems can go.

Concluding on a (not so) Costly Endeavour

So overall, artefact evaluation is a huge success. It may be a bit tangential to the initial goal of testing research instruments before the research, but helping doing so afterwards still helps making science more transparent and reproducible.

One thing left to discuss might be cost, though...

The most common question from conference organisers was 'well, but what would that cost?!' Naturally, with measurement.network being a project for the community, the answer is: Nothing*

*As long as the conference is non-profit and papers are open access.

The question, though, is not surprising... out of curiosity, we quickly ran the numbers of what something similar to ACSAC (3TB memory, ~80VMs for several weeks with rather high load) would cost when run on a major cloud provider. The number was surprisingly high, and surprisingly five-figure-y. But luckily, it doesn't have to be.

So, if you want support in artefact evaluation for your conference as well, please reach out via email: contact@measurement.network

Comments 0