Measuring the Internet is a delicate process and without the proper setup, things can get pretty out of hand pretty quickly. The measurement.network is a RIPE NCC Community Projects Fund supported project that helps researchers run network measurements without breaking things.

Performing "network measurements" is something every network engineer does on a day-to-day basis. Fire up ping, check the RTT, look at a bandwidth graph... all of these are network measurements (on the smaller end of the scale). Going a bit bigger, network measurements are also what underlies all forms of real-time and historic monitoring. And if we go even bigger, we arrive at Internet scale measurements done, for example, by scientists, for example in the RIPE MAT-WG or in the IRTF.

What they all these measurements have in common is that they are usually done to understand and ideally improve how the Internet - in various ways, from congestion control to security - works.

The good, the bad, the...

Good measurements can provide valuable perspectives on how protocols should be designed and adjusted, can help figure out where "operators have potential for improvement", and in general, can make the world a better (pinging) place.

That's good measurements. Bad measurements, however, may be annoying or straight out harmful. These might be measurements running at packet rates that make systems topple, or contain unexpected data crashing implementations, or are simply designed in a way that produces biased results, harming any processes that rely on them.

For a measurement to be bad doesn't mean it was intentionally badly designed. Instead, far too often, it can quickly happen that in order to try to measure something, you end up having to do things on the Internet that not a lot of people are doing. This is rather natural, because otherwise measuring something would most likely be rather boring.

Still, this tends to collide with the Internet's nature as a stitched together collection of corner cases held together by bubble gum (in places where they ran out of duct tape) and the hope that everyone would play nice (yes, even in 2024), or more precisely, would play somewhat like everyone else.

What makes this even worse is that the (often rather long tail) of corner cases tends to have grown for decades. The canonical example here is likely DNS, but mail (with its myriad of additions and improvements) is also a strong contender.

What good measurements need

Doing good measurements is surprisingly easy. All you have to do to create reliable results in a way that doesn't risk causing (too much) harm is:

- Thoroughly understand the protocol stack you are measuring, including operational lore and lived experience since the inception of these protocols.

- Be versed in the domain of available implementations to identify components you can (re)use to construct the measurement setup.

- Be an experienced programmer and versed in software development in general to follow development best practices and produce tested and reliable code.

- Be an experienced system administrator to setup the measurement system, including all basic services the system depends on, including historic and real-time monitoring of all components.

Arguably, though, this is not a person, but a rather concise team description.

Academic measurements

Now, let's take a look at academic network measurements. Academia is often seen, by people in the industry, as a group of people somewhat removed from practice who yet have the privilege of being able to drill into a topic for years, far deeper than would be possible in industry. The ivory router, if you like.

However, arguably, reality tends to be a bit less tower-ish. In practice, academia has been long since captured by "metricification" - like so many other things - and time is in short supply between requirements and expectations on the one hand, and (in my opinion somewhat unnecessary) competition on the other.

These days, a PhD is supposed to take somewhere between 4 and 6 years, culminating in 4-5 'top tier' papers on (ideally) a somewhat consistent topic. If you do the math (and ignore some other effects like pipelining), this means that the first paper should happen around a year-ish into the PhD.

Looking back at the requirements and (corner-case-laden) realities above, it becomes 'a bit much' to ask from a single person. Consider a recent master's graduate, for example (I leave it to the reader to assess how much practical experience in running systems one gets from the average MSc). It is reasonable to expect someone in such a position to understand nearly four decades of DNS developments, to be a good enough operator to reliably run a network measurement infrastructure, to be good enough programmer to implement a measurement tool that works and does not break stuff...

To put this into context, when asked whether a PhD in that context could write DNS measurement software that could be safely run against the Internet without breaking stuff, a 'person I know' working on DNS for the better part of two decades commented: "... well, I wouldn't trust myself writing DNS software that can be safely run against everything in the Internet..."

Pressure forges diamonds... or... not

Well, with all of that in place we have a person under a lot of pressure, with a lot of expectations, and even more requirements. This, naturally, leads to one very specific thing - people doing people things.

So, in general, pressure does not forge diamonds. Instead, it creates scarred people taking shortcuts where they can to somehow cope with the situation. Copy paste from stackoverflow, blindly follow that howto, clicking until it works and not asking too many questions... we've all been there one time or another.

Side note (somewhat related): this is also the reason why investing into an additional 'normal' operator instead of spending the same amount of money on a 'security appliance + subscription' whose logs are never read can actually have a profound positive impact on an organisation's security posture... so, if your shopping cart currently holds a SecurityBox2000, consider getting more operating staff instead...

There are a lot more dimensions to this - also on how academic realities make it difficult for supervisors to spot such issues - but these would make the article even longer than it has to be. If you are interested, take a look at this paper about measurement.network.

The key items to take away are:

- Nobody does bad things out of malice - it's the system around us

- Don't hate the player, hate the game

- We're all just cooking with water

Practical challenges

In addition to that, doing Internet measurements holds a couple of technical requirements and implications concerning the network they are being run from.

A core technical thing is that the network should be able to handle a certain amount of packets flowing through it. The classic of academic network measurements are PhD students bringing down the whole campus by overflowing a state table in a firewall.

Besides that, the network should support the needs of measurements. This becomes especially challenging with universities sometimes lacking the resources (classic: no IPv6 on campus... or maybe quickly getting an ASN) or having outsourced things.

Running scanning infrastructure on some funny public cloud basically drags their whole stack into the measurement things "that have to work". It also makes things really hard for people trying to block you, because what runs your measurements today may be part of their core infrastructure also running on that public cloud a few weeks down the line.

Additionally, there are a lot of ethical requirements that ought to be fulfilled. Things like informative reverse DNS, an abuse@ that is actually being read (and replied to), and maybe even RFC9511.

And, talking of ethics, also ethics review. There are established standards in the academic community on what constitutes ethical measurements. People (commonly referred to as an ethics committee) should look at experimental plans to check whether these rules are being followed, and for example, harm-benefit analyses are logically contingent.

Now, the obvious issue here is that 'unethical' can really vary between who looks at something. Tell a DNS person you're sending a handful of funny packets to them doing (very much valid and standard compliant) DNS queries, and they will likely shrug at the additional load.

Tell the same thing to mail operators, and they may also shrug at the load... and already getting the torches and pitchforks ready while waiting for the answer to "And where did you get the destination mail address for those emails... ?"

And now imagine the ethical assessment being done by academics. Say, for example, psychologists, doctors, and philosophers, as you often find on universities' ethics boards. And if all of that came together kind of well, you may have people finding that the Internet is, indeed, held together by duct-tape and hope...

For people not familiar with those realities, that can be a rather huge thing. Imagine learning that peering agreements and upstream relationships are often not negotiated between the legal departments of two corporations (exceptions confirm the rule), but instead have more to do with, e.g., BoF sessions at RIPE meetings. You may be very excited about this huge finding - but you might also find many operators will visibly shrug at you, going like: "Yeah... er... soooo... ?"

A measurement.network

So, what is measurement.network?

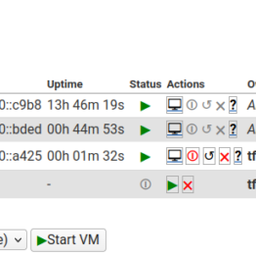

It is basically an idea to make things a bit better for everyone by creating a semi-open measurement infrastructure and support structure for academics to use. This project should provide measurement infrastructure to conduct active measurements, especially for measurements not feasible with platforms like RIPE Atlas.

It does not only make (especially active measurement) infrastructure more (globally) accessible, but can also help researchers in 'getting some things right', e.g., proper historic and real-time monitoring for ongoing measurements.

Measurements done via the project should receive ethical and feasibility review, via a mixed panel of operators and academics (not to accept or reject, but to ultimately improve). Additionally, the project should help researchers in publishing their results and collected data.

Furthermore, measurement artefacts - when run on the project - can also be shared more easily in a 'working environment'.

Sidenote: to be honest, just forcing people to roll out their measurement toolchain on a freshly installed machine can often spot rather 'interesting' things that tend to accumulate in the entropy heap of an ongoing measurement study.

This also ties in with the option of the project helping peer review of academic measurement work by providing infrastructure for artefact evaluation. For example, for conference reviews, provide authors and reviewers with systems they can run and test measurement artefacts on, having taken care of all the requirements of running network measurement infrastructure (blocklists, probe attribution, abuse handling...).

What happened so far

measurement.network received support from the RIPE Community Projects Fund 2023.

This has led to a spike in activity and an interesting infrastructure getting built. Going into all this right now would make this article even more unreadably long, but future articles are already lined up to look into Infrastructure build up, Artifact Evaluation, V4LESS-AS and other topics.

I want to run my measurements on measurement.network!

Sadly, the point where measurement.network was least successful so far is setting up an organised submission and review process. That, however, does not keep it from helping where it can. So, if you need infrastructure for a research idea, and measurement.network might be able to help, reach out, and we see what we can do: contact@measurement.network

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.