If you want to run network measurements, you need to run them off of something. For a whole measurement.network, that means building a whole network. In this article, we will go into the infrastructure behind measurement.network, how it was built, and what it can do.

Numbers and names

All networks start with their numbers. Well, numbers and names. For measurement.network, the name was a relatively obvious pick. Getting numbers, though, turned out to be more difficult.

Luckily, the Max-Planck Institute for Informatics had a spare /22 IPv4 LEGACY space well suited for the purpose of measurement.network. However, to get that routed - and support more than the IP of the past - we also needed an AS number and an IPv6 prefix.

Initially, the plan was to get an ASN and a /46 IPv6 PI to keep the resources transferrable. However, that turned out 'a bit' more difficult than anticipated. In the end, the solution was a PA block being transferred, giving a bit more leeway in a roomy /32.

In summary, measurement.network now has the following permanent resources:

- ASN: AS211286

- IPv4: 141.39.220.0/22

- IPv6: 2a0d:8d04::/32

That should give enough room to get things going.

Routers

Now, the best numbered resources won't ping anywhere, if there is nothing to route (and switch) them. With the limited (even with the generous funding from the RIPE Community Projects Fund) resources available, it is somewhat difficult to find routers that can reliably route a few gbit at line rate while also holding a full table.

Initially, the plan was to use Mikrotik routers. However, over the course of the project another viable option emerged: Juniper MX150. The MX150 is, essentially, a DPDK based soft-router (or: vMX). However, it sports plenty of memory, and is able to easily handle a full table. Forwarding wise, it can do roughly 5gbit with 64b packets, which should be enough for starting out.

Finding colocation

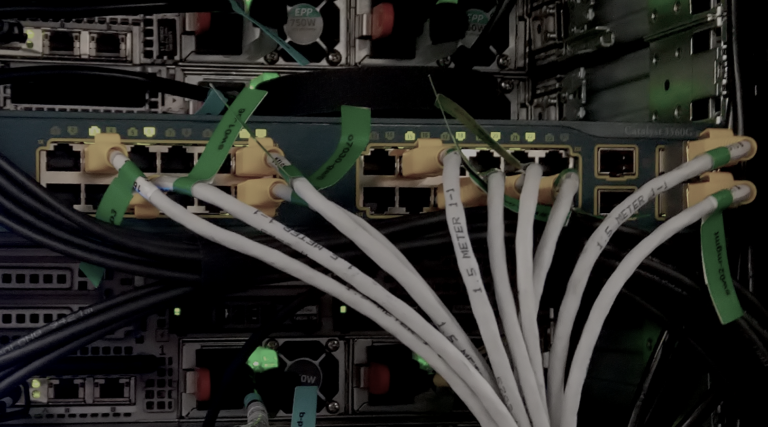

With the routers in the bag, the next step was finding a place to put them. At the moment, measurement.network is physically present in Dusseldorf/WIIT and in Berlin in the racks of IN-BERLIN.

The already present infrastructure of AS59645 there also means plenty of resources in-place to host core and latency sensitive services. Furthermore, for more compute heavy systems (especially artefact evaluation), some infrastructure could be, for now, placed in Saarbruecken with the MPI for Informatics.

Finding upstream

The last step to make things ping was finding upstreams. Luckily, in Berlin, the Community-IX provides a 'peering' platform via which non-profit community projects can get upstream via several major providers.

Additionally, a BCIX and an NL-IX port were made available via IN-BERLIN. In Dusseldorf, AS50629 (LWLcom) and AS58299 were willing to provide upstream (thank you!). LWLcom also transports a VLAN between Dusseldorf and Berlin.

Servers and compute

We mentioned above, that the existing infrastructure of AS59645 was very useful in getting core services (web, mail, etc.) running via systems in place in Dusseldorf and Berlin. What likely ate the largest chunk of resources there was artefact evaluation hosting and the first two network measurements being run (check out the corresponding blog article as soon as it comes live!).

In Saarbruecken, additional infrastructure for compute-heavy services was put into place. The MPI there loaned a stack of Dell R730 servers, including one with two Nvidia A30 for artifact evaluation (again, another article you should definetely check out ;-)).

Furthermore, a 4-node system found its way into the rack. This boasts an impressive 1TB of memory per node, features 1-1.6TB of NVMe flash (again, per node), and two Intel(R) Xeon Gold 6226 per node. With that, measurement.network can run a lot of 'things'.

Routers: instrumentation and automation

Now, measurement.network has quiet a few routers (in addition to the two cores in Dusseldorf and Berlin). Saarbruecken has two machines running VyOS as soft-routers, there is an MX-80 there that handles the tunnelling, etc.

As running one (or two or three... ) ASes 'on the side' can be a bit of a time-eater, we had to turn to automation. Automation is done via a configuration generator that consumes meta-configs. It then creates either VyOS or JunOS configuration files, that can be deployed on the routers.

To hear more about the fully automated version of this, check out the V4LESS-AS article as soon as it comes online. :-)

Servers: instrumentation and automation

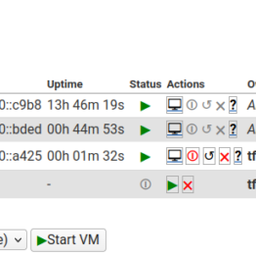

Similar to running routers, running servers can be a major time sink. The monitoring (see below) shows 49 linux hosts, 13 Proxmox hosts, and 9 OpenBSD machines (mostly for OOB connectivity/firewalling).

Herding such a zoo manually is, of course, hardly possible. There is always something to forget. Instead, we are running puppet to ensure systems are correctly and consistently configured. Being able to auto-provision services, e.g., HotCRP instances for artefact evaluation, significantly reduces the overhead of providing such services.

Monitoring

"If you're not monitoring it, you're not running a service".

Well, that, if course, is true. Monitoring for measurement.network is done the (very very) old school way: With Nagios4 and Munin. A more 'recent' monitoring platform would probably be more fun, but the clear benefit of Munin and Nagios4 is that it works, while being simple and easily extensible.

Furthermore, we integrated Nagios4 and Munin into puppet, meaning that monitoring is automatically provisioned as soon as a system is put in place, or a new service deployed. And, of course, we also have a weather map. ;-)

Services

So, what is running on the infrastructure? Well, everything that is needed, basically (and all the systems for artefact evaluation and measurements ;-)). This includes authoritative DNS, recursive DNS, the website etc.

To make measurement.network actually usable it also runs a (to be opened but already deployed) submission system. Of course, a method to share files should not be missing, which has been implemented with a Nextcloud, and all of that should authenticate against a central authentication system - Keycloak in this case.

So, in essence, everything is there to get going. So stay tuned for the review system to open (or, if you are inpatient and have some measurements to run, drop an email to contact@measurement.network :-)).

Comments 0