In 2009, Google launched its Public DNS service, with its characteristic IP address 8.8.8.8. Since then, this service has grown to be the largest and most well-known DNS service in existence. Due to the centralisation that is caused by public DNS services, large content delivery networks (CDNs), such as Akamai, are no longer able to rely on the source IP of DNS queries to pinpoint their customers. Therefore, they are also no longer able to provide geobased redirection appropriate for that IP.

As a fix to this problem, the EDNS0 Client Subnet standard (RFC 7871) was proposed. Effectively, it allows (public) resolvers to add information about the client requesting the recursion to the DNS query that is sent to authoritative DNS servers. For example, if you send a query to Google DNS for the ‘A record’ of example-domain.com, they will ‘anonymise’ your IP to the /24 level (in the case of IPv4) and send it along to example-domain.com’s nameservers.

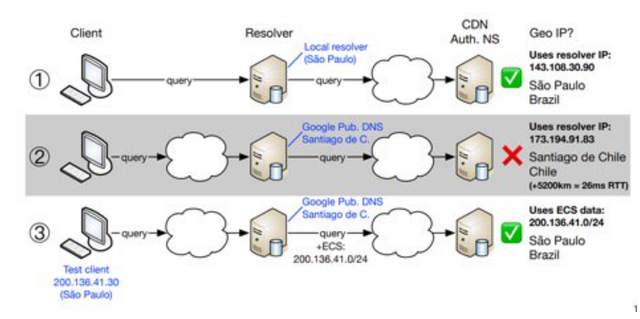

Figure 1 shows an example for a client in São Paulo, Brazil. In situation 1, the client uses a local resolver and is accurately geolocated to São Paulo. In situation 2, the client uses Google Public DNS, but the EDNS0 Client Subnet is not used. Because Google’s nearest Public DNS PoP is in Santiago de Chile, geolocation fails. Finally, situation 3 shows that if the EDNS0 Client Subnet is used, geolocation works again.

Figure 1: Three examples of clients using different resolvers

We have collected over 3.5 billion queries that contained this EDNS0 Client Subnet extension that were received from Google, at a large authoritative DNS server located in the Netherlands. These queries were collected over 2.5 years. In this post, we look at the highlights of the things we can learn from this dataset.

Out-of-country answers

Since Google uses anycast for their DNS service, end users can typically not easily see what location their request is being served from. On the other hand, from our perspective we can see the IP address that Google uses to reach our authoritative nameserver, as well as the IP address of the end user (by the EDNS0 Client Subnet).

Google publishes a list of IP addresses that they use for resolving queries on 8.8.8.8, and what geographic location those IPs map to.

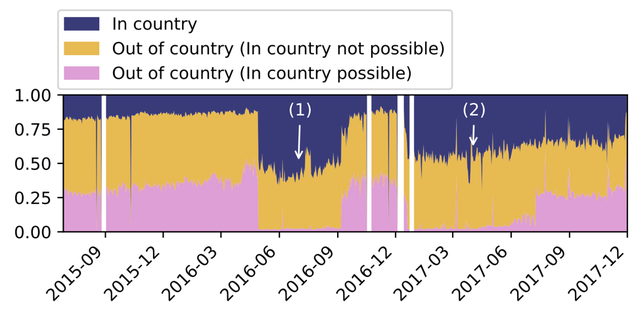

Figure 2 shows the queries from Google divided into three categories:

- Blue: queries served from the same country as the end-user.

- Yellow: queries not served from the same country, and Google does not have an active location in that country.

- Pink: queries not served from the same country, but Google does have an active location in that country.

Figure 2: Number of queries from Google divided into three categories: blue = in country; yellow = out of country; pink = out of country where Google has an active location

It’s clear that a non-negligible fraction of queries are unnecessarily served from a location outside the economy of the end-user. Moreover, the end-user is likely not aware of this fact, and might assume that since there is a Google DNS instance in the same economy they are being served from this location.

Further investigation shows that quite often queries are served from countries that are actually far away. During periods 1 and 2 (marked in Figure 2) Google temporarily performed better in terms of ‘query locality’.

Outages drive adoption

Given that DNS is probably a fairly foreign concept to the average user, we were wondering what could drive users to configure Google Public DNS. We had the idea that it was likely that ISP DNS outages might cause this, and set out to verify this.

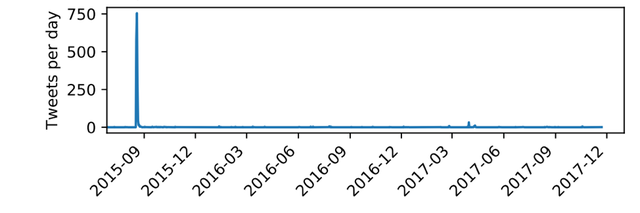

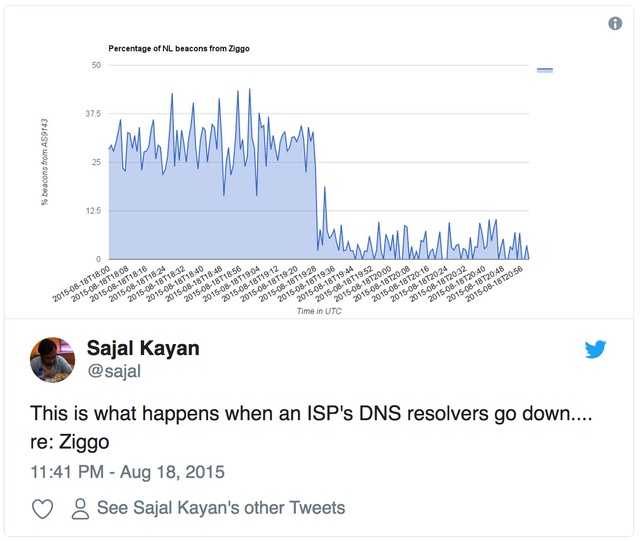

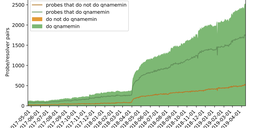

Back in 2015, the company Ziggo (one of the larger ISPs in the Netherlands) had a major DNS outage that lasted several days (caused by a DDoS attack), which prompted a lot of discussion on Twitter (Figure 3).

Figure 3: Tweets per day mentioning DNS and Ziggo

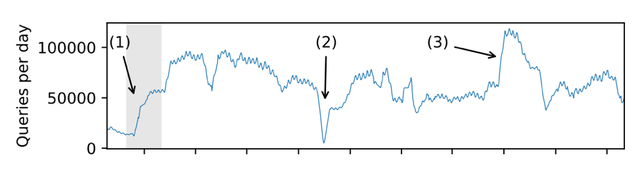

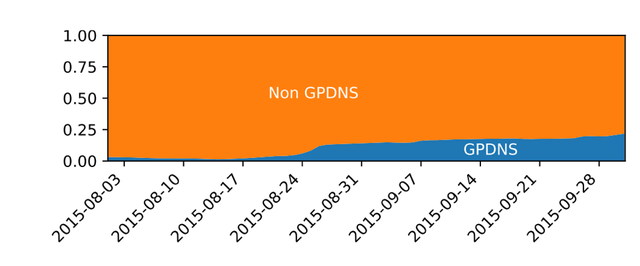

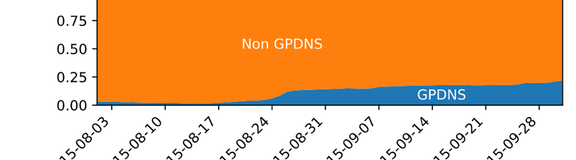

Figure 4 shows the number of queries from Ziggo end-users through Google rising rapidly shortly rising after the incident started (1). This was confirmed by looking at the fraction of ‘Google DNS queries’ and ‘Ziggo DNS queries’ (Figure 5).

Interestingly, the number of queries per day never went down to its original level, except for an outage we had in data collection (Figure 4 (2)). N.B: The increase (Figure 4 (3)), was a DNS flood performed by a single user.

Figure 4: Queries moving average over -5 and +5 days

Figure 5: Total queries (including non-Google) from Ziggo

Anycast does not always behave as we think it should

We can see that anycast does not always behave as we think it should, and users concerned about their privacy might want to think twice about using a public DNS resolver that uses it — your packets may traverse far more of the Internet than you might expect.

We also see that users are willing to configure a different DNS resolver if their current one is not reliable, and tend to not to switch back.

For a more in-depth analysis, we recommend that you take a look at our paper [PDF 780 KB]. Our dataset is also publicly available.

This was originally published on the APNIC blog.

Comments 1

Comments are disabled on articles published more than a year ago. If you'd like to inform us of any issues, please reach out to us via the contact form here.

Sylvain BAYA •

Hi Wouter, I think your Team have done an interesting work, because it brings more understanding in the DNS Resolving Service (Public|Private|ISP). But, we can just imagine which tools|methods were used. I guess that for more technical information, others who may want to reuse your work, must read your mentionned paper :-) For a more balanced (in term of DNS Public Resolver) observation of the 'resolving public service', ones may want to also look at Nishal' presentation [1] at #SAFNOG4. That's about Africa DNS but it's interesting to consider the more local approach, used here, to make measurements... ;-) “..Africa is not a country...” he said to explain why country (IXP) based measurements are the best to see the real (local) impact of differents Public DNS Resolvers includind Quad9, Quad1, OpenDNS and Quad8. __ [1]: http://safnog.org/pdfs/2.nishal.goburdhan-safnog4.pdf Thanks for sharing. Regards, --sb.