We did some measurements on the round-trip (RT) values of DNS queries for SOA (Start of Authority) records from our RIPE Atlas probes, over both UDP and TCP. We plotted the TCP/UDP ratios on graphs, and found that, as expected, for the majority of the measurements, it is around 2. However, we also observed other values for some servers (including K-root). Upon investigation, we found that the K-root servers were configured to allow at most 10 concurrent TCP connections. This caused several much bigger RT values than on other root servers. When we changed the configuration to allow for 100 concurrent TCP connections, K-root showed ratios similar to the other root servers.

All the active RIPE Atlas probes query all the root name servers for the SOA record of the root zone every 30 minutes, both over UDP and TCP. We used the results to determine the TCP/UDP response time ratio of these measurements. The measured response time is the interval between sending the DNS query and receiving the complete response. Because of the TCP 3-way handshake, it is expected that a response over TCP will take at least twice as long to arrive compared to a response over UDP.

A new RIPE Atlas map is available which shows these ratios per probe.

For each cycle, we picked for each probe the UDP and TCP measurements, and if they were within a 150-second interval, we considered them close enough to calculate the ratio. If they were further apart, we discarded those two measurements. Also, if we only have a UDP (and no TCP) measurement, or vice versa, we discarded the measurement.

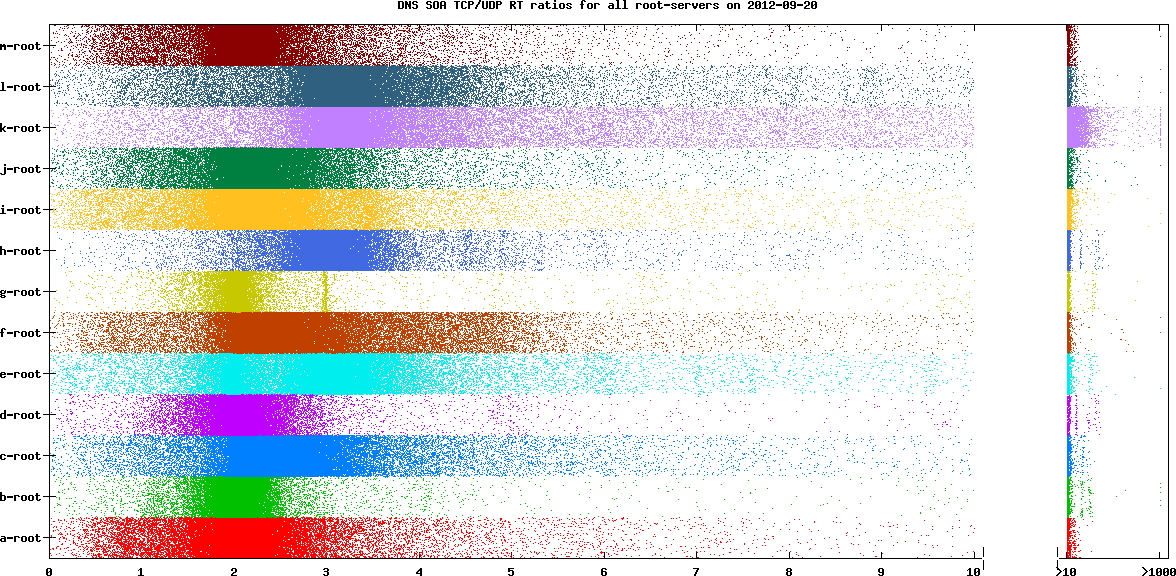

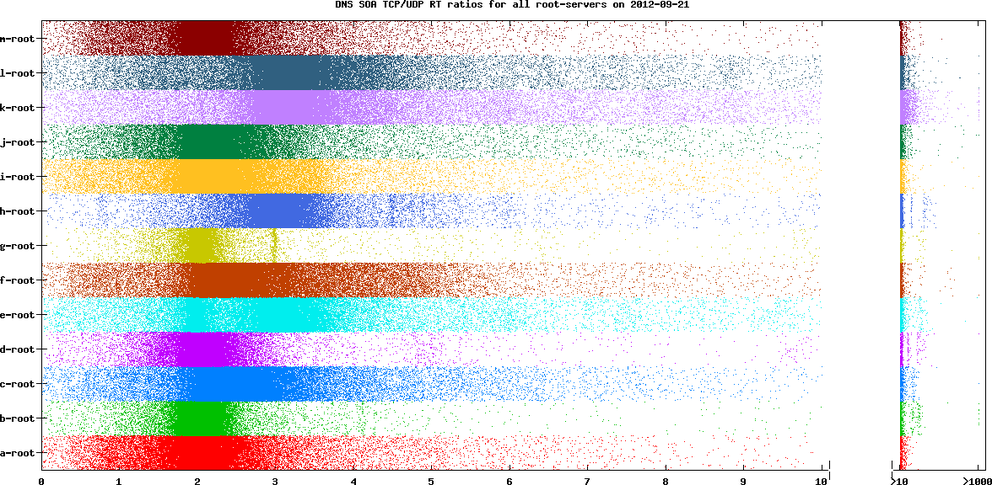

We then plotted all these ratios on a graph (you can enlarge all images by clicking on them):

Figure 1: DNS SOA TCP/UDP RT ratios for all root servers on 20 September 2012

Figure 1: DNS SOA TCP/UDP RT ratios for all root servers on 20 September 2012

As you can see in the graph above for 20 September 2012, the most dense area is around a ratio of 2 for most root servers. But some (notably K-root and L-root) have more often a ratio of 3. You can also see that these two have much more variation in the ratio, sometimes going up to 10. K-root even has many results above 10.

K-root's behaviour intrigued us, so we looked at its configuration. We found that there was a maximum of 10 concurrent TCP connections allowed. This caused some TCP queries to be held in a queue, waiting for a connection to be established. While we did not lose any requests at K-root, they took longer to respond. The result was that many TCP responses had much bigger RT values, and you can see this in the graph. Some queries may have even timed out and so may not have been included in the counts/graphs.

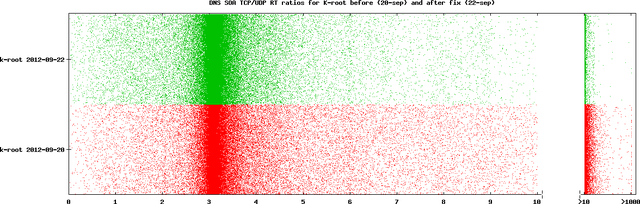

We changed K-root's configuration during the day on 21 September 2012, to allow 100 concurrent TCP connections. This brought the ratio density to around 3. See the following graph for the full day comparison of K-root on 20 and 22 September 2012.

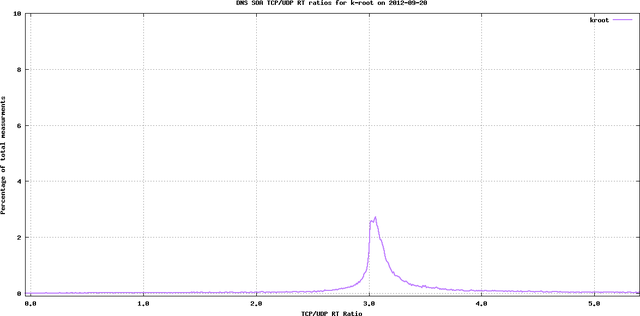

We also plotted histograms for various days. In this case, we binned the ratios into bins of 1/100th of a second and calculated the percentage of entries in each bin (percentage of total (valid/used) measurements).

Figure 3 shows an example of this for K-root on 21 September:

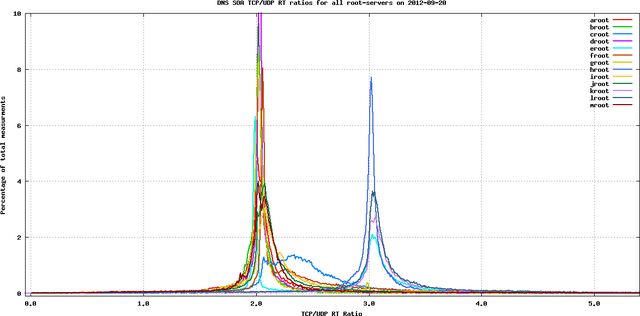

In Figure 4 you see a graph in which we show all root servers together. It shows that for most root servers the ratio is around 2, but for some it is around 3:

For a complete set of graphs for 20 September 2012 go to dns-probe-measurements 2012-09-20 .

NSD's TCP behaviour

You'll notice in these graphs that there are two distinct peaks, at ratios 2 and 3. There are three name servers exhibiting the ratio at 3. It turns out that these three name servers run the NSD name server software . This suggested that NSD was taking longer to respond to TCP queries. We ran the following query against a BIND server and an NSD server, and did packet captures:

dig +norec +tcp soa . @<name server>

First, let's look at how F-root (which runs BIND) responded:

193.0.10.249.49744 > 192.5.5.241.53: Flags [S], seq 3070710725, win 65535, options [mss 1460,nop,wscale 4,nop,nop,TS val 172155998 ecr 0,sackOK,eol], length 0

192.5.5.241.53 > 193.0.10.249.49744: Flags [S.], seq 3594360937, ack 3070710726, win 65535, options [mss 1460,nop,wscale 3,sackOK,TS val 1909669925 ecr 172155998], length 0

193.0.10.249.49744 > 192.5.5.241.53: Flags [.], ack 1, win 8235, options [nop,nop,TS val 172156005 ecr 1909669925], length 0

193.0.10.249.49744 > 192.5.5.241.53: Flags [P.], seq 1:20, ack 1, win 8235, options [nop,nop,TS val 172156005 ecr 1909669925], length 1952227+ SOA? . (17)

192.5.5.241.53 > 193.0.10.249.49744: Flags [P.], seq 1:748, ack 20, win 8326, options [nop,nop,TS val 1909669936 ecr 172156005], length 74752227*- 1/13/22 SOA (745)

193.0.10.249.49744 > 192.5.5.241.53: Flags [.], ack 748, win 8188, options [nop,nop,TS val 172156016 ecr 1909669936], length 0

193.0.10.249.49744 > 192.5.5.241.53: Flags [F.], seq 20, ack 748, win 8192, options [nop,nop,TS val 172156019 ecr 1909669936], length 0

192.5.5.241.53 > 193.0.10.249.49744: Flags [.], ack 21, win 8326, options [nop,nop,TS val 1909669946 ecr 172156019], length 0

193.0.10.249.49744 > 192.5.5.241.53: Flags [.], ack 748, win 8192, options [nop,nop,TS val 172156025 ecr 1909669946], length 0

192.5.5.241.53 > 193.0.10.249.49744: Flags [F.], seq 748, ack 21, win 8326, options [nop,nop,TS val 1909669946 ecr 172156019], length 0

193.0.10.249.49744 > 192.5.5.241.53: Flags [.], ack 749, win 8192, options [nop,nop,TS val 172156025 ecr 1909669946], length 0

Next let's see how K-root (which runs NSD), responded:

193.0.10.249.49743 > 193.0.14.129.53: Flags [S], seq 2260025309, win 65535, options [mss 1460,nop,wscale 4,nop,nop,TS val 172152327 ecr 0,sackOK,eol], length 0

193.0.14.129.53 > 193.0.10.249.49743: Flags [S.], seq 2528398468, ack 2260025310, win 5792, options [mss 1460,sackOK,TS val 2332945284 ecr 172152327,nop,wscale 2], length 0

193.0.10.249.49743 > 193.0.14.129.53: Flags [.], ack 1, win 8235, options [nop,nop,TS val 172152328 ecr 2332945284], length 0

193.0.10.249.49743 > 193.0.14.129.53: Flags [P.], seq 1:20, ack 1, win 8235, options [nop,nop,TS val 172152328 ecr 2332945284], length 1914386+ SOA? . (17)

193.0.14.129.53 > 193.0.10.249.49743: Flags [.], ack 20, win 1448, options [nop,nop,TS val 2332945285 ecr 172152328], length 0

193.0.14.129.53 > 193.0.10.249.49743: Flags [P.], seq 1:3, ack 20, win 1448, options [nop,nop,TS val 2332945286 ecr 172152328], length 2

193.0.10.249.49743 > 193.0.14.129.53: Flags [.], ack 3, win 8235, options [nop,nop,TS val 172152329 ecr 2332945286], length 0

193.0.14.129.53 > 193.0.10.249.49743: Flags [P.], seq 3:748, ack 20, win 1448, options [nop,nop,TS val 2332945287 ecr 172152329], length 74534048 [b2&3=0x1] [13a] [22n] NS? ^@^A^@^@^F^@.^@.Q.^@@^Aa^Lroot-servers^Cnet^@^Enstld^Lverisign-grs^Ccom^@wM-n;|^@^@^G^H^@^@^CM-^D^@^I:M-^@^@^AQM-^@^@^@^B^@^A^@^GM-i^@^@^BM-@^\^@^@^B^@^A^@^GM-i^@^@^D^AbM-@^^^@^@^B^@^A^@^GM-i^@^@^D^AcM-@^^^@^@^B^@^A^@^GM-i^@^@^D^AdM-@^^^@^@^B^@.^@.M-i^@^@^D^AeM-@.^@^@^B^@^A^@^GM-i^@^@^D^AfM-@^^^@^@^B^@^A^@^GM-i^@^@^D^AgM-@^^. (743)

193.0.10.249.49743 > 193.0.14.129.53: Flags [.], ack 748, win 8188, options [nop,nop,TS val 172152330 ecr 2332945287], length 0

193.0.10.249.49743 > 193.0.14.129.53: Flags [F.], seq 20, ack 748, win 8192, options [nop,nop,TS val 172152332 ecr 2332945287], length 0

193.0.14.129.53 > 193.0.10.249.49743: Flags [F.], seq 748, ack 21, win 1448, options [nop,nop,TS val 2332945292 ecr 172152332], length 0

193.0.10.249.49743 > 193.0.14.129.53: Flags [.], ack 749, win 8192, options [nop,nop,TS val 172152333 ecr 2332945292], length 0

You can see that BIND responds with a single TCP packet, whereas NSD sends the same type of response in two TCP packets. NSD first sends a TCP packet whose payload is just two bytes (highlighted in bold text), indicating the length of the following DNS message. It then sends the actual DNS message containing the response in a second TCP segment. This means NSD requires an additional round-trip to finish sending a response over TCP.

We brought this difference to the attention of the NSD developers. After some discussion and with a suggestion from one of our colleagues, they decided to make use of the writev() system call. This allows NSD to bundle the two-byte length and the following DNS message into the same TCP response packet. This change was made to NSD in SVN commits 3644 and 3645, and will be part of NSD 3 in release 3.2.14 as well as in the upcoming NSD 4.

C-root

In the graph, C-root also stood out. The ratios for C-root do not show any clear peak at either 2 or 3. Instead, the ratios are spread fairly evenly between 2 and 3. We don't know what's causing this, and we would be interested to hear from you if you know the cause, or have ideas about what may be happening.

Future steps

In a follow-up article we will describe more findings based on these RIPE Atlas queries.

If you have any questions, suggestions or concerns, please post them below!

Comments 2

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.

Greg A. Woods •

An interesting analysis for sure, but I'm rather surprised that nobody had spotted that little 2-byte packet in a direct packet analysis of the TCP responses from NSD that could or should have been done during testing. It will be most interesting to learn how and why this wasn't spotted earlier.

Bert Wijnen •

From our point of view: The TCP issue wasn't a bug: just sub-optimal. Also, it was already known (and reported on the nsd-users mailing list), but since it wasn't a big deal (TCP performance isn't crucial to a DNS server), there was no urgent need to fix it.