Last week we published a proposal for new organizational layers in order to be more effective in fighting spam. In this article we describe planned experiments to determine the effects of outbound spam reputation on Internet organizations.

These layers can bring quantification and experimental rigor to a commons model of the Internet, while providing the reputational and economic motivation needed to organize IT security to defeat the spam and botnet black market.This time we describe some specific planned experiments to determine the effects of outbound spam reputation on Internet organizations. First, some background on the current state of Internet economic externalities, a dive fifty years into the past for the origins of social comparison theory, and then the experiments.

Unfortunate Economic Externalities

Currently E-Mail Service Providers (ESPs) have no direct incentive to control spam that may originate from their network and impact others. They consider it an economic externality; somebody else's problem. ESPs' investments are usually prompted by the incentive to provide better service for their own customers, or sometimes not even that:“Businesses put their profits above defending their customers and business partners.” ( Herzog2010 )Internet security professionals are starting to recognize that security metrics are required to replace fear, uncertainty, and doubt in the Internet ( Jaquith2007 ), but to date while metrics have been deployed extensively within organizations ( Seiersen2010 ), the ignored elephant in the room remains “the necessity of comparative analytics” across organizations ( Hutton2010 ).

Social Comparison Theory

Fifty years of social science research and literature indicates

that making behavior public changes that behavior

(

Festinger1954

).

People actually do care how well they are doing compared to similar

people, and if they are given a way to accurately make such comparisons,

they tend to act on them.

Fifty years of social science research and literature indicates

that making behavior public changes that behavior

(

Festinger1954

).

People actually do care how well they are doing compared to similar

people, and if they are given a way to accurately make such comparisons,

they tend to act on them.

In examining Internet movie ratings, letting everybody know how others rated movies changes behavior positively ( Chen2010 ).

“When given outcome information about the average user's net benefit score, above-average users mainly engage in activities that help others. Our findings suggest that effective personalized social information can increase the level of public goods provision.”

Those findings confirm previous research ( Ba2002 ) on trust in information asymmetry:

“... when bidders view a product listing at an online auction site, for example, they may not have easy access to information regarding the true quality of the product and therefore may be unable to judge product quality prior to purchase ( Fung and Lee 1999 ). The difference between the information buyers and sellers possess is referred to as information asymmetry.”Developing trust through reputation does more than just facilitate an auction market in general; it provides advantages for trusted sellers:

“Trust can mitigate information asymmetry by reducing transaction-specific risks, therefore generating price premiums for reputable sellers.”Or, in other words, reputation enables price differentiation for sellers with good reputation, which in turn provides incentive for sellers to develop a good reputation by providing good service.

“To promote trust and reduce opportunism in the electronic market, credible signals should be provided to differentiate among sellers and give them incentives to be trustworthy.”Reputation turns cheap talk into useful market signals. That particular research was in online auctions, in which the buyers and sellers may be individuals. Applying the same kind of reputational transparency to Internet organizations should affect organizational behavior similarly.

For example, Secunia examines the security of computers, software, and software vendors, including such metrics as how many patches are not installed ( Frei2010 ). The most insecure programs and vendors are not who you might think. Not Microsoft: actually some well-known third party software wins that honor. Microsoft has already reacted to its bad reputation by implementing an automatic update system that is widely used. The author when presenting that paper mentioned another example of a software vendor (open source in this case) that had come out poorly in these rankings before, and then (apparently because of that poor showing) implemented a better automatic update system and greatly improved its ranking.

Internet Experiments

How does the reputation system itself change Internet security?Placebo rankings for a control group would not be prudent. Fortunately, there is another way. Deploying a reputation system on a worldwide scale will take time, since it needs to have multiple time scales (such as day, week, month), grouped organizationally (ISPs, banks, medical) or economically (Fortune 500), normalized (by addresses, customers, employees), and with derivative rankings such as

- how quickly they get infested by bots (from resistant to susceptible),

- how quickly they stop spam from infested nodes (from prompt to dilatory),

- and how frequently nodes get re-infested (from resilient to recidivist),

One finding of social comparison theory is that people are most likely to change their behavior when they know how they compare to similar people. Secunia's results indicate this may also be true of Internet organizations. Comparing a bank to an ISP isn't likely to make either care. Comparing banks to banks is likely to make the banks care. Similarly, comparing a bank in Belgium to a bank in Texas is not as likely to make either care as comparing banks in Belgium to each other.

The desirability of many rankings of small groups like organizations is an opportunity for experiments during rollout. If, for example, Belgium and the Netherlands are similar in how they deal with the Internet, then while deploying rankings for organizations in Belgium, organizations in the Netherlands serve as a control group. That is, the rankings would already be generated automatically so that the experimenters could see them, but rankings for Belgium would be made public first while observing if there are any changes in them that do not have parallels for the Netherlands. Then make the Netherlands rankings public and see if similar changes appear in them.

Rankings in Belgium

Table 1 shows one possible kind of ranking for Belgium, in this case by spam volume (spam message counts) derived from custom data from the CBL blocklist. These example rankings are for all Autonomous Systems Numbers (ASNs) registered for Belgium. The data permits us to dig deeper and find specific types of organizations (banks, medical, etc.) but we do not show that here.

| Volume | Sizes | ASN | CC | Description |

| 5621169 | 2688256 | 5432 | BE | BELGACOM-SKYNET-AS Belgacom regional ASN |

| 2337280 | 106240 | 41451 | BE | "TELEDIS-AS TELEDIS AS" |

| 1357564 | 308224 | 12392 | BE | ASBRUTELE AS Object for Brutele SC |

| 1204960 | 864256 | 3304 | BE | SCARLET Scarlet Belgium |

| 947642 | 1544888 | 6848 | BE | TELENET-AS Telenet Operaties N.V. |

| 517562 | 237568 | 12493 | BE | AS12493 be.mobistar Autonomous System |

| 474940 | 86016 | 21491 | BE | UGANDA-TELECOM Uganda Telecom |

| 387094 | 34816 | 29587 | BE | SCHEDOM-AS schedom-europe.net autonomous system |

| 325056 | 8192 | 48315 | BE | "ALPHANETORKS-AS Alpha Networks S.P.R.L. _s AS number" |

| 304500 | 14336 | 25395 | BE | Gateway Communications |

|

Table 1: Most Spam Volume from Belgium, October 2010 Volume (spam message counts) derived by the IIAR project from custom CBL blocklist data. |

The AS with the most spam volume from Belgium is also the one with the most IP address space, which is no surprise. However, the AS with the second most address space is number 5 in volume, not number 2. Many other factors probably contribute to these rankings, such as the specific IT security techniques, procedures, and processes (infosec) in use at the organization owning the ASN. Traditional infosec if applied well may help prevent or stop botnet infestations, and to suppress inbound spam. Organizations that show up high in such rankings may decide to also do something about outbound spam.

This particular list contains two ASNs for organizations that seem to mostly operate in Africa, not Belgium:

- Uganda Telecom (ASN 21491)

- Gateway Communications (ASN 25395)

The RIPE NCC tells us that Uganda Telecom got its ASN directly from RIPE NCC before AfriNIC existed, and Gateway Communications is a Belgian organisation also providing services in Africa.

This leads to some interesting questions:

- If an ASN is currently registered under the RIPE NCC (or ARIN, or any of the other five Regional Internet Registries (RIRs)), and if it were registered for the first time today be registered under AfriNIC (or LACNIC, or another RIR in a different region), should that ASN be counted as RIPE NCC or AfriNIC for rankings?

- If an ASN mostly does business in a country or countries far from where it is registered, in what country should it be counted for rankings?

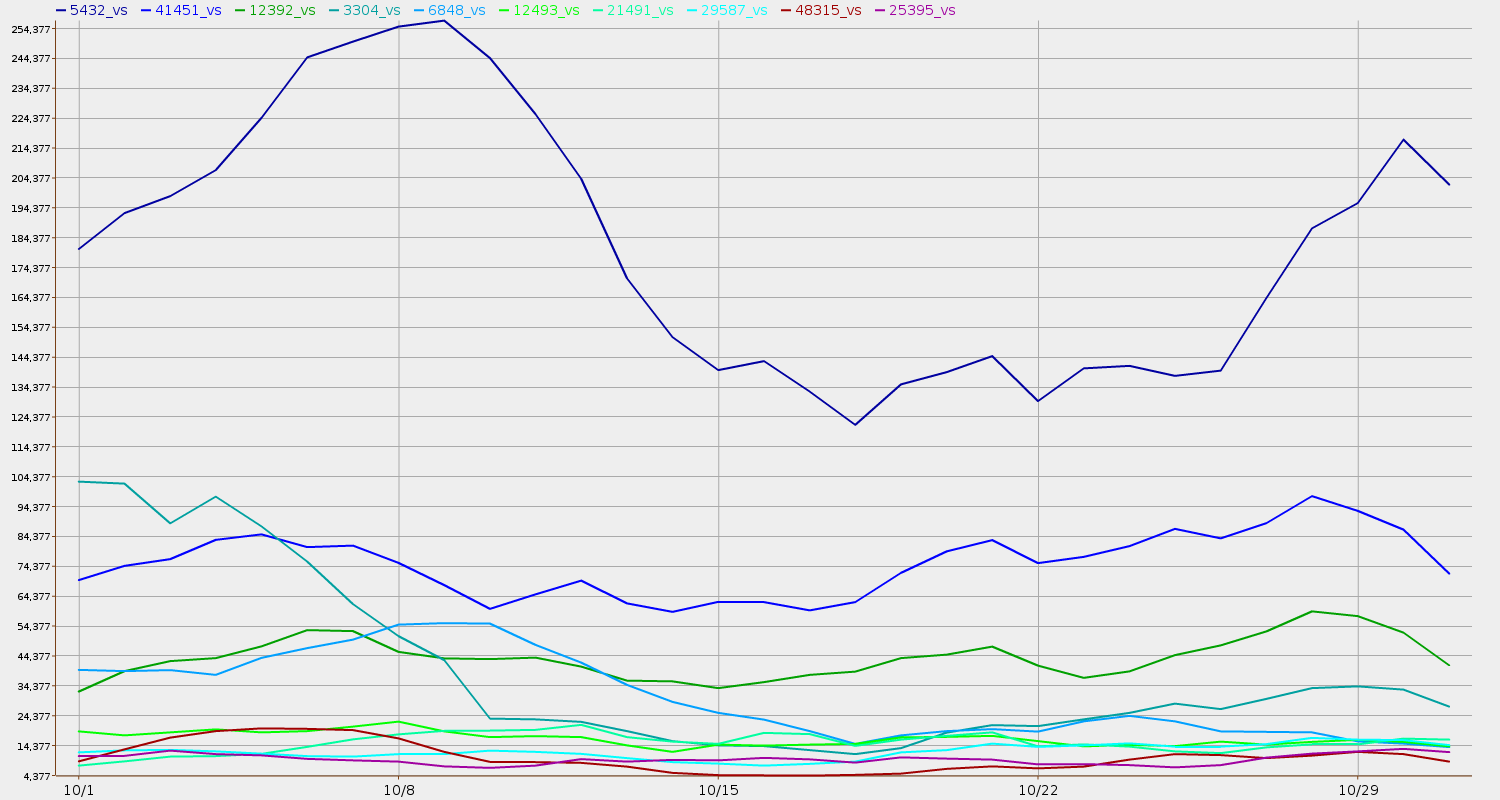

In Figure 1, we can see that towards the beginning of the month the second-highest ASN drops rapidly to fifth place and keeps going down in spam volume.

Figure 1: Most Spam Volume from Belgium, October 2010

Volume (spam message counts) derived by the

IIAR project

from custom CBL blocklist data.

This is why rankings need to be updated frequently. They can change rapidly, and such changes may correspond to changes in infosec (or even changes in pricing) at specific ASNs.

Rankings in the Netherlands

Table 2 shows the top 10 ASNs in the Netherlands by spam volume for October 2010.

| Volume | Sizes | ASN | CC | Description |

| 33224499 | 3488000 | 9143 | NL | ZIGGO Ziggo - tv internet telefoon |

| 14826178 | 442368 | 5615 | NL | TISNL-BACKBONE Telfort B.V. |

| 8930184 | 2454784 | 286 | NL | KPN KPN Internet Backbone |

| 1767160 | 182272 | 15670 | NL | BBNED-AS |

| 1583520 | 1513984 | 13127 | NL | VERSATEL AS for the Trans-European Tele2 IP Transport backbone |

| 1439155 | 115456 | 12634 | NL | "SCARLET Autonomous System for Scarlet Telecom B.V." |

| 1234552 | 65536 | 28685 | NL | "ASN-ROUTIT Routit BV EDE The Netherlands" |

| 1169493 | 139520 | 12414 | NL | NL-SOLCON SOLCON |

| 1155735 | 131072 | 15435 | NL | KABELFOON CAIW Autonomous System |

| 957329 | 1115392 | 3265 | NL | XS4ALL-NL XS4ALL |

|

Table 2: Most Spam Volume from Netherlands, October 2010 Volume (spam message counts) derived by the IIAR project from custom CBL blocklist data. |

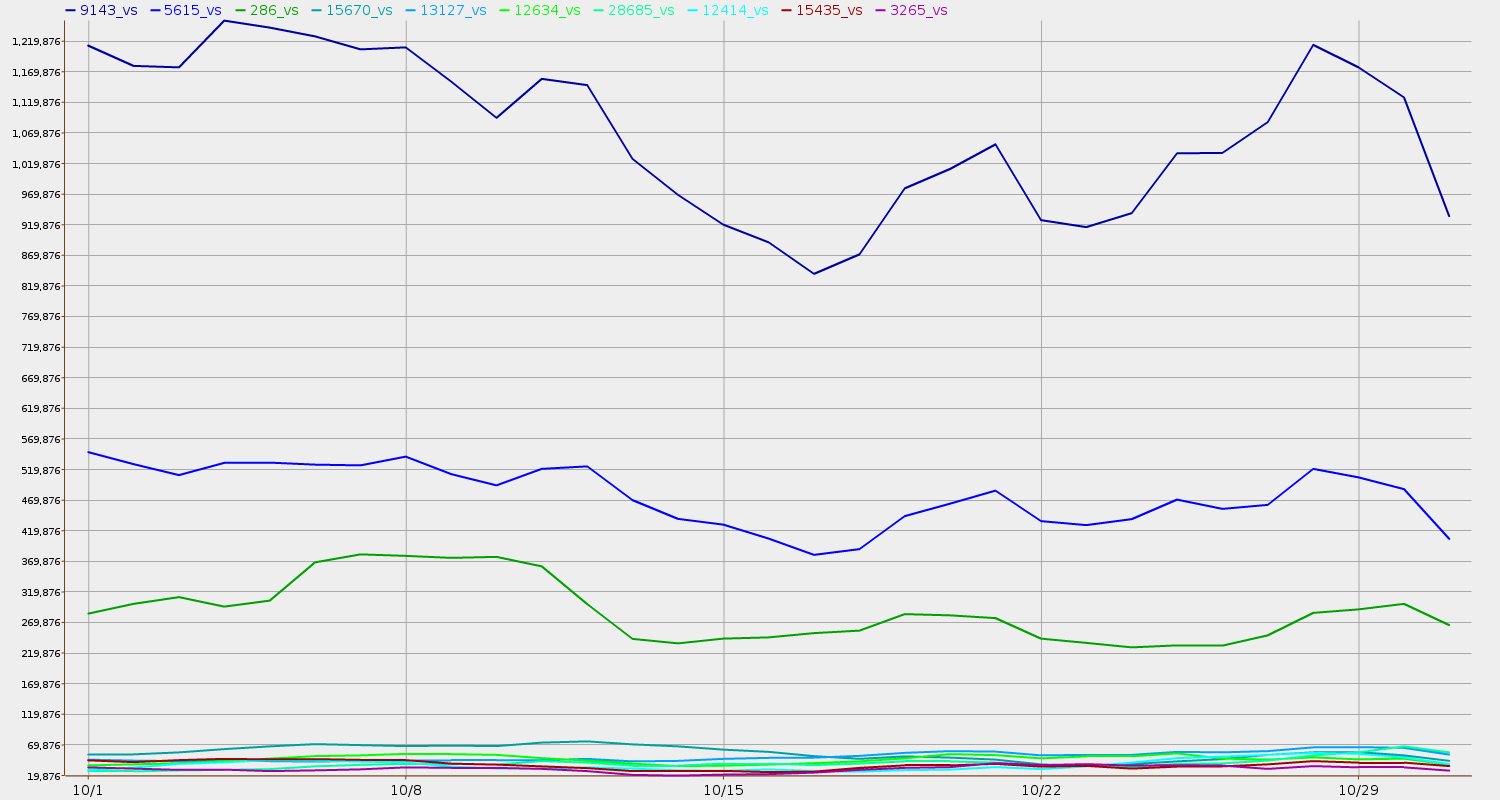

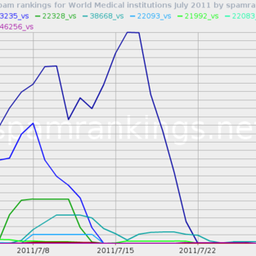

Figure 2 shows daily change in rankings for those same top 10 Dutch ASNs.

Figure 2: Most Spam Volume from Netherlands, October 2010

Volume (spam message counts) derived by the

IIAR project

from custom CBL blocklist data.

These Dutch rankings don't seem to include any organizations that may need to be ranked in a different region. Or are there? People in the Netherlands may see something in this data that we do not. That's one of the points of such rankings: many eyes to debug the Internet.

Rollout of Additional Rankings

The initial rankings deployed for Belgium and later the Netherlands do not have to be all the rankings related to those countries. Each additional set of rankings (by additional organizational types, different normalizations, different derivatives, etc.) is another experimental opportunity, as are later changes to already-deployed rankings, and of course deployments to additional countries.Organizational Participation

Some organizations can be invited to participate more directly in these experiments by providing feedback that can help tune the rankings. Some such feedback has already been received: some large ISPs are dubious about rankings purely by volume of spam observed, and would prefer rankings normalized by for example size of address space. That normalization actually favors organizations with very small numbers of IP addresses (probably plus organizations with a few high-volume spammers), so further tuning is needed. This kind of development of appropriate rankings is greatly aided by participation of ranked organizations.Participating organizations can also engage in a different class of experiments in which the organizations change their infosec and they and the Reputation System Organization (RSO) observe how the rankings change in response. This type of experiment does not require building traditional infosec chains of connections: instead just try something new and see the resulting measurements. Simulations are also not needed: the Internet itself is the laboratory.

To assist organizational feedback and both kinds of experiments, the RSO can provide drilldowns to help the participating organizations determine why they appear the way they do in the rankings. This kind of interaction will help develop drilldown techniques and reports that can be systematized and automated for deployment as drilldown products. Such drilldowns can also be used later by insurers in due diligence before issuing insurance policies, and in claims adjustments.

Pricing Correlations

With access to some external information, further experiments could be conducted. As Ba and Pavlou note ( Ba2002 ):“Trust is especially critical when two situational factors are present in a transaction: uncertainty (risk) and incomplete product information (information asymmetry) (Swan and Nolan 1985).”Outbound spam is an indicator of botnet infestation, and nobody wants higher risk of that. Currently there is very little information publicly available in a digestable form about which ESPs send more outbound spam. So both these two situational factors apply. Thus we would expect some of Ba and Pavlou's hypotheses to apply, such as this one:

Price Premium Hypothesis (H3): Higher trust in the seller's credibility results in higher price premiums for an identical product or service.To test this hypothesis requires observing ESP pricing for related products (email, hosting, web services, etc.) and seeing if they change with rankings rollouts, or with changes in rankings over time.

Since gathering such information is time-consuming, it would be good to be able to select small samples to work with. This hypothesis could help with that:

Expensive Product Hypothesis (H4): The relationship between trust and price premiums is stronger for expensive products than for inexpensive products.So for at least initial experiments, it would be useful to find out which are the most expensive providers and watch their prices.

This would be a long-term set of experiments that would require tuning those hypotheses, accounting for ISPs that prefer to gain market share rather than raise their prices, and other interesting phenomena in the real-world Internet environment. Simply observing price changes would take several months at least. A set of pricing experiments might take years to show any conclusive results as to effects produced by the reputation system. However, price changes are a likely third-level effect to explore after changes in the rankings and changes in infosec.

Summary

The Internet itself is our laboratory to conduct experiments to see which organizations change as we roll out the rankings: what flinches and what blooms when we shine a light on Internet security. While the data we are using is specifically about spam, most of that spam comes from botnets, which are implicated in other Internet security problems as well, so these experiments, rankings, and organizational layers should affect Internet security in general. Further, reputation has effects beyond its origin ( Ba2002 ):“A good reputation, and the trust associated with it, works not only in the market where it is originally generated. Research has shown that trust is transferable (Lewicki and Bunker 1995). Sellers could use an accumulated positive reputation to receive economic advantages in different settings.”Such a reputation system, and the certificates, SLA self-insurance, and insurance policies that can be built out of it, should provide transparency and economic incentives to help all Internet stakeholders, from banks to ISPs to Law Enforcement Organizations (LEO)s to users, cooperate to implement a much more secure Internet.

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. 0831338. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.We also gratefully acknowledge custom data from CBL, PSBL, Fletcher Mattox and the University of Texas Computer Science Department, Quarterman Creations, Gretchen Phillips and GP Enterprise, and especially Team Cymru. None of them are responsible for anything we do, either.

John S. Quarterman for the

IIAR project,

Andrew B. Whinston PI,

Serpil Sayin,

Eshwaran Vijaya Kumar,

Jouni Reinikainen,

Joni Ahlroth,

and other previous personnel.

antispam@quarterman.com

References

- Ba2002

- Sulin Ba and Paul A. Pavlou. Evidence of the effect of trust building technology in electronic markets: Price premiums and buyer behavior. MIS Quarterly , 26(3):243-268, September 2002.

- Chen2010

- Yan Chen, F. Maxwell Harper, Joseph Konstan, and Sherry Xin Li. Social comparisons and contributions to online communities: A field experiment on movielens. American Economic Review , (100):1358-1398, September 2010.

- Collins2007

- M. Patrick Collins, Timothy J. Shimeall, Sidney Faber, Jeff Janies, Rhiannon Weaver, Markus De Shon, and Joseph Kadane. Using uncleanliness to predict future botnet addresses. In IMC '07: Proceedings of the 7th ACM SIGCOMM conference on Internet measurement , pages 93-104, New York, NY, USA, 2007. ACM.

- Festinger1954

- Leon Festinger. A theory of social comparison processes. Human Relations , (7):117-140, 1954.

- Frei2010

- Stefan Frei. The security of end-user pcs an empirical analysis. In DDCSW: Collaborative Data-Driven Security for High Performance Networks . Internet2 and WUSTL, August 2010.

- Fung and Lee 1999

- R. Fung and M. Lee. Ec-trust (trust in electronic commerce): Exploring the antecedent factors. In W. D. Haseman and D. L. Nazareth (eds.), editors, Proceedings of the Fifth Americas Conference on Information Systems , pages 517-519, 1999.

- Herzog2010

- Pete Herzog. Better security through sacrificing maidens. infosecisland , August 2010.

- Hutton2010

- Alex Hutton. Bridging risk modeling, threat modeling, and operational metrics with the veris framework. In Metricon 5.0 , August 2010.

- Jaquith2007

- Andrew Jaquith. Security Metrics: Replacing Fear, Uncertainty, and Doubt . Addison-Wesley Professional, 2007.

- Seiersen2010

- Richard Seiersen. Practical security metrics in the 4th dimension. In Metricon 5.0 , August 2010.

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.