For 20 years, the RIPE NCC counted the number of hosts in its service region. The Internet has grown dramatically since then and there are various ways to measure that growth. The article below describes the history of the Hostcount, the challenges we faced over the years and the reasons why we intend to stop doing the Hostcount as of January 2011.

When the Hostcount began in 1990, the intention was to get an idea of the size and the growth rate of the Internet. At the time we did that by simply counting Address Resource Records (A RRs) in country code Top Level Domains (ccTLDs) in the RIPE NCC service region.

This methodology, however, is not producing the desired results anymore. For security reasons many TLD operators block zone transfers which makes it impossible to count the number of A RRs. Today there are other better mechanisms to measure the growth of the Internet. The Hostcount has outlived its purpose. Since 2000, Hostcount has not been producing reliable results and we recommend users to treat all results after the year 2000 with great care.

We therefore intend not to run the Hostcount for January 2011 and thus stop the data series in December 2010.

Technical Background Information

In the first Hostcount report, the following countries were included:

- Austria (AT)

- Belgium (BE)

- Denmark (DK)

- Finland (FI)

- France (FR)

- Germany (DE)

- Greece (GR)

- Iceland (IS)

- Ireland (IE)

- Israel (IL)

- Italy (IT)

- Netherlands (NL)

- Norway (NO)

- Portugal (PT)

- Spain (ES)

- Sweden (SE)

- Switzerland (CH)

- United Kingdom (UK)

- Yugoslavia (YU - since deleted)

The first Hostcount was published in November 1990 and counted 31,724 unique hosts. Two years later the count was already 8 times larger, at 271,795 unique hosts.

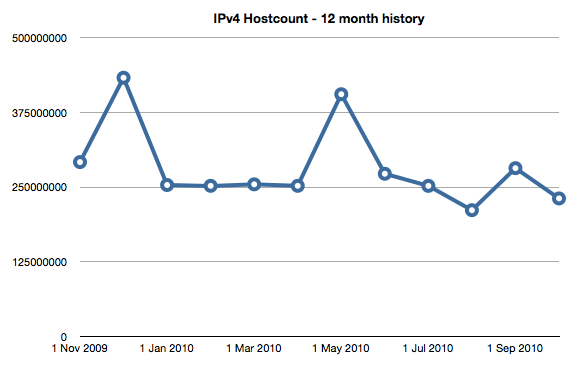

During 2010 the Hostcount measurements ranged between 211M and 430M hosts. This not only represents an almost 14,000 times growth since the first hostcount, but the variance in numbers also reveals that this way of measuring growth on the Internet does not produce very precise results.

Much has changed between 1990 and 2010 and the output and success of the hostcount have shifted dramatically:

- The methodology of using zone transfers (AXFR) to collect subdomain and host data for ccTLDs started to fail when security conscious operators began to block access to this data.

- The assumption that every active Internet host has a valid DNS entry is no longer sound - active hosts often lack DNS and DNS entries can exist for inactive hosts.

- The assumption that a single IP address with DNS represents one Internet connected device becomes increasingly untrue as NAT deployment increases.

- Recursively scanning an ever expanding DNS namespace on a monthly basis became more time-consuming and less practical.

By 2007, these problems had such a negative influence on the Hostcount output, that we had to put workarounds in place to ensure the ongoing integrity of the data:

- Parallelising the software to make it run faster (while simultaneously not overloading the DNS servers we needed to query)

- Distributing the effort to the ccTLD operators - the take-up of this was very low

- Enumerating the entire reverse DNS tree (looking for PTR records in the in-addr.arpa space) as a means to overcome blocked zone transfers

Initially, it appeared that these changes had made a truly positive improvement to the Hostcount service - the PTR scanning alone instantly improved our visibility of hosts from 20M to over 120M - but over time, the issues of scalability and reliability have returned.

In 2010, we conducted a strategic review of the long term feasibility of the Hostcount service. We realised that the reliability of the figures was too variable to be trusted as can be seen in the graph below.

In general, we observed that:

- Processing the entire DNS tree for our service region is time-consuming and error-prone.

- Measuring the 'size' of the modern Internet using data from the DNS is unreliable and unscalable.

- Counting hosts by processing DNS data is unreliable and unscalable on the modern-day Internet, and will not scale with IPv6.

After this analysis, it was obvious that the results of the Hostcount are not precise enough to be valuable.

For historic reference, the archived output of the Hostcount measurements will be retained within the Hostcount pages on the RIPE NCC web site.

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.