In this post, we at NLnet Labs look at how eXpress Data Path (XDP) can augment existing DNS software. We share our experiences of implementing Response Rate Limiting in XDP.

Augmenting DNS

In our first post on XDP, we explored a use case where we used this new technology at NLnet Labs to respond to DNS queries without touching the network stack of the operating system (OS).

Without even allocating a socket buffer, our XDP program xdp-dns-says-no (GitHub) responded to all incoming queries with response code REFUSED, while making sure all the details such as the checksum were correctly updated accordingly. This (perhaps rather silly) use case not only showcased the power of using XDP 'on its own' but the possibility of even greater applications.

Doing anything with DNS without having to traverse the OS network stack and without touching any software in userspace is very exciting. However, we learned from the previous post that XDP has its limitations in terms of flexibility and resources (all with good reasons).

Moreover, there are many fine nameservers, resolvers and other DNS solutions developed as userspace software, based on years and years of both operational experience as well as RFC detangling. Those efforts should not be taken lightly.

Taking that into account, we think XDP should not replace userspace software per se. Instead, it should augment it. Let XDP do some heavy lifting. Return the easy responses early on, but leverage the smartness of existing nameservers and resolvers for more complex tasks. Perhaps the most elegant part of this is the fact that such XDP programs could (or should?) be userspace software agnostic. As long as it makes sure valid DNS queries are being pushed up the network stack to the software, it is compatible with whatever is running up there!

Before we dive into actual code we need to address two considerations.

First, we need to acknowledge that applying XDP in such an augmenting way can only be done (trivially) for unencrypted, stateless traffic. In our case, plain old DNS over UDP. Even though we can keep some state (as we will see later in this post), we'll leave the implementation of DNS over TLS (DoT) in XDP as an exercise to the reader.

Second, perhaps an even more fundamental question is are we violating layers?

Layers in/of DNS and how (not) to violate them

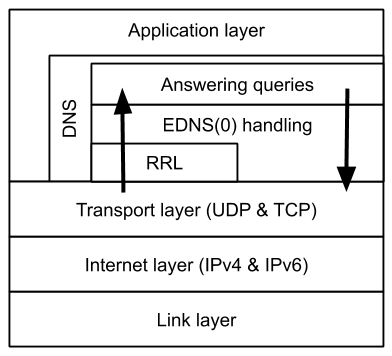

DNS is generally considered to sit at the application layer in the Internet Protocol suite. It delivers a service (over UDP and TCP at the transport layer) of looking up names for other applications and returning the IP addresses which those applications can then use to connect to, through the transport layer.

The DNS protocol itself, however, also has an internal layered structure. More specifically, we can distinguish the upper actual application layer of requesting and/or servicing DNS names, and a layer below, involved with the communication aspects of a pair of hosts in a DNS resolution process. This lower layer is, for example, involved with signalling payload size and padding messages on encrypted channels (EDNS(0)), or mitigating denial-of-service attacks.

Figure 1 (left) — Layers that make up the Internet Protocol suite. Note how the DNS layer has internal layers too.

| Note that this lower layer is completely independent of the DNS resolution process: one could argue that this part of DNS actually sits at the Session layer in the OSI model. Current DNS software provides the functionality at this layer, but in theory, this could be implemented independently, for example with XDP/BPF. This can be beneficial performance-wise, but also from the system administration perspective, it can be convenient for example to have denial-of-service attack management distinct from DNS content servicing management. |

So, are we violating layers by augmenting DNS software with XDP programs? Perhaps, but such violations are already there in the userspace software anyway. If anything, we are pulling functionality from there and putting it back in, well, another layer again. Even though it might still not fit the OSI model perfectly, we do not think it will make things worse per se.

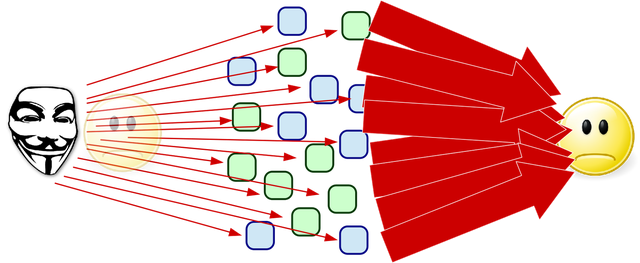

Figure 2 — Amplified denial-of-service type of attacks

One low-hanging fruit lower-layer DNS functionality that could benefit from implementing XDP (both performance and sysadmin wise), is Response Rate Limiting (RRL).

RRL mitigates against amplified denial-of-service type attacks, in which the attacker sends requests to a DNS server soliciting large answers spoofing the request’s source IP address to be that of the victim so that the victim will be flooded with the large answers. It does this by measuring the rate of the requests per source IP address (in Queries per Second (QPS)) and dealing with the requests differently once a certain threshold is surpassed. In that case, a percentage could be simply dropped, but alternatively, to maintain serviceability of the DNS server, the request can be answered with an empty answer (no amplification) but the TC header bit set. A real resolver would, upon receiving such a response, requery over TCP and get an answer, but in the described attack scenario the packet will be received by the victim, albeit without amplification nullifying the reason for the attacker to use the DNS server as an amplifier.

RRL in XDP

In the examples below, we will build an XDP based RRL module step-by-step, introducing new XDP/BPF concepts along the way. Remember that we are augmenting an existing DNS service, so these examples assume a running nameserver software on the machine.

Like in our previous blog post, the code snippets in this part are not full programs (for those, see https://github.com/NLnetLabs/XDPeriments.git ).

Round 1: RRL per IP address

BPF maps

Up until this point, we have only seen examples of XDP programs working without any form of keeping state, and without any interaction between the kernel and userspace. BPF provides a mechanism to do both those things, called maps.

In short, maps are data structures defined at compile-time, allowing to keep state in-between packets and supporting read/write operations from userspace. So, for example, we can keep track of statistics ('how many packets did we observe?') by updating the map from the XDP program and reading from userspace, but also configure the running XDP program ('set my_threshold to X') by writing a value from the userspace into the map which is read and used by the XDP program.

Let's have a look at a map definition we will be using later on:

We see a map definition consists of a type, a key_size and value_size, and its max_entries. In this case, we’ve stored a bucket struct (the value) per IP address (the key) in a hashmap (the type). The types are specific to BPF, but come in a wide variety:

- Our hashmap type, which is unique per CPU, allows us to modify the map without interference

- BPF_MAP_TYPE_HASH type, which is shared amongst all CPUs

- BPF_MAP_TYPE_ARRAY type (yes, it's an array)

- BPF_MAP_TYPE_LRU_HASH, which is a hash with Least Recently Used replacement policy)

- A _QUEUE, a _STACK, an _ARRAY_OF_MAPS, and a _HASH_OF_MAPS, among many others (To quote the man page, "See /usr/include/linux/bpf.h for the full list.")

Small side note: these map types are supported by the BPF implementation of the Linux kernel. If you are looking into offloading XDP to hardware (hopefully the topic of a future post in this series), not all map types might be available.

Manipulating the maps at runtime is done via specific helper functions, most with pretty self-describing names: bpf_map_lookup_elem(), bpf_map_update_elem() and bpf_map_delete_elem() will enable you to do exactly what it says on the tin.

Now that we have the map introduction out of the way, let’s look at some code! In this post, we’ll focus on an XDP program that does RRL on incoming DNS queries per source IP address. Below we see a snippet that manages the RRL process such that the incoming packet can be processed correctly.

After parsing the IP header (not part of this snippet) the udp_dns_reply_v4 function is called, which takes a cursor (keeping track of our position within the incoming packet) and the source IP address that will be used as a key in the hash map. When the packet is verified to be a DNS query, by checking the protocol and port number, we can do a lookup in the map to see if this source IP address has been observed before. If this is the case, we call do_rate_limit() which we detail below, otherwise, we create a new bucket and put it in the map.

With the bucket for this specific source IP address at hand, we first check whether its time frame has not expired. The time frame is checked per packet by comparing the time of arrival of the packet (current time) and the start of the time frame. When the time frame runs out, i.e. the delta between the two timestamps is greater than FRAME_SIZE, both its start_time and the query count n_packets are reset. With FRAME_SIZE set to 1 second, this basically means everything with a rate lower than 1 QPS is not considered for RRL.

An example scenario: configuring our XDP program to have a TIME_FRAME of 1 second and a THRESHOLD of 1000 packets on a single CPU system, we send queries to it at a rate of 1500 QPS. Then, every query that is received after the thousandth packet is rate limited, while all preceding queries are passed onto the network stack. This process of passing the first 1000 queries and returning the next 500 queries with (TC) bit set, will repeat every second as the time frame resets. Beware that our buckets are unique per CPU though, so for accurate rates you need to divide the number given to THRESHOLD by the number of CPUs on the system.

If the number of observed packets is lower than the threshold, we return 1 — that will result in the packet being XDP_PASS'ed on to the network stack towards the running nameserver software. But if the threshold is exceeded, we want to relieve the nameserver of handling requests from this 'enthusiastic' sender and do the heavy lifting in XDP: the query is converted to a response with the truncated (TC) bit set. Swap the addresses, update the checksum, and out it goes again, not a socket buffer in sight.

Just to show our XDP-based RRL actually kicks in, we configure a very low threshold, send some queries (`watch -n 0.1 "dig …"`), and monitor the responses using tshark:

$ tshark -T fields -e ip.src -e ip.dst -e dns.flags.truncated \

'ip && udp && src host bpf.nlnetlabs.net'

Capturing on 'eno1'

3.120.68.146 192.168.10.21 0

3.120.68.146 192.168.10.21 0

3.120.68.146 192.168.10.21 0

3.120.68.146 192.168.10.21 0

3.120.68.146 192.168.10.21 1

3.120.68.146 192.168.10.21 1

After the first couple of queries being answered perfectly fine, the RRL kicks in and the truncated flag is set. Moreover, initial measurements have shown the XDP version to be using less CPU capacity compared to an NSD instance applying RRL in userspace. Amazing! But wait, there's more!

Round 2: The regular customer is king

From talking with DNS operators at conferences, we know that RRL works for them, but that they would like to exclude the resolvers with which they have a well established long-standing relationship.

What they’d like to have is a dynamically editable Very Important Prefixes (V.I.P.) list of networks that will always be exempt from any RRL so that the service to these networks is guaranteed while other networks might see rate limiting applied to them. Let's see how we can implement that functionality, by introducing another map.

There is a specific map type very suitable for matching prefixes: the Longest Prefix Match Trie (BPF_MAP_TYPE_LPM_TRIE). The definition of the maps in the XDP program look like this:

Strictly speaking, when using the map only for matching IP addresses, we could theoretically have done with a .value_size of 0, but this is not allowed for this map type. We could have also done with a .value_size of 1 and only store an unused uint8_t value. Instead, we have chosen to store a uint64_t to track the number of times the prefix matched the source IP address of incoming queries, so this value can be used for statistics.

Keys in a LPM_TRIE map have a special format which is defined in /usr/include/linux/bpf.h :

The .key_size’s in the map declarations (shown above) have to be set to the sum of sizeof(struct bpf_lpm_trie_key) (4) and the number of bytes needed to match the IP address (i.e. 4 for IPv4 and 8 for IPv6 because we deemed it unnecessary to match anything more specific than a /64 for IPv6 addresses).

When adding data to the map, prefixlen has to be set to the length of the prefix (i.e. the number behind the slash) directly followed by the bytes making up the prefix. When searching for a match for a specific IP address in the map, prefixlen has to be set to the maximum number of bits that a prefix in the map can match; i.e. 32 for IPv4 and 64 for the first 64 bits of an IPv6 prefix.

Below the snippets will return 1; // XDP_PASS when the IP address matches a prefix in udp_dns_reply_v4() and udp_dns_reply_v6().

Note that the complete IPv6 address is copied, which is not necessary. An optimization exercise left for the reader is to copy just the first 64 bits of the IPv6 address.

Also, note that the map type was not ‘per CPU’ and thus multiple CPUs could access the counter simultaneously. The counter needs to be increased using the atomic operation lock_xadd(count, 1) (which we have copied from basic example 3 of the XDP Hands-On Tutorial).

Pinning the VIP lists

To provision the prefixes that are exempt from RRL, before loading the XDP program, we can provide them as so-called pinned maps.

Pinned maps can be shared between multiple BPF programs and are kept alive after the XDP program gets unloaded (so they can be reused once the program is loaded again). In order to pin a map to the BPF File System, we first need to mount it:

# mount -t bpf /sys/fs/bpf

Now, we can create the maps for the IPv4 and IPv6 prefixes using the following values:

# bpftool map create /sys/fs/bpf/rrl_exclude_v4_prefixes flags 1 \

name exclude_v4_prefixes type lpm_trie key 8 value 8 entries 10000

# bpftool map create /sys/fs/bpf/rrl_exclude_v6_prefixes flags 1 \

name exclude_v6_prefixes type lpm_trie key 12 value 8 entries 10000

The values given after key, value and entries have to match .key_size, .value_size and .max_entries attributes of the map declarations in the XDP program precisely.

The name parameter is obligatory when creating a map, but does not necessarily need to match the declaration.

Also flags 1 is obligatory for this map type. The 1 stands for BPF_F_NO_PREALLOC defined in /usr/include/linux.bpf and means that keys representing prefixes are dynamically allocated when added to the map (instead of having all 10000 entries preallocated).

Now load the XDP program with the bpftool to associate the pinned maps with the declarations in the XDP program:

# bpftool prog load xdp_rrl_VIP_list.o /sys/fs/bpf/rrl_VIP_list type xdp \

map name exclude_v4_prefixes \

pinned /sys/fs/bpf/rrl_exclude_v4_prefixes \

map name exclude_v6_prefixes \

pinned /sys/fs/bpf/rrl_exclude_v6_prefixes

Here the name parameters do have to match the names given to the map declarations in the XDP program, as this is how they will be associated with the pinned maps on the BPF filesystem.

Note that the XDP program is now also pinned to the BPF filesystem, and not associated with a network device.

To finally associate it with for example the eth0 device, we can use the ip tool again:

# ip --force link set dev lo xdpgeneric \

pinned /sys/fs/bpf/rrl_VIP_list

To populate the map we need to pass a 32bit value for the prefix length, even though we likely only want to express a decimal '24' or '64' for our program. The same goes for the initial value, which in our example is typed as 64bit integer. Nevertheless, we will need to pass the entire value for both the prefix length and the initial value, both in little-endian, so we end up typing a bunch of zeroes:

# bpftool map update pinned /sys/fs/bpf/rrl_exclude_v4_prefixes \

key 24 0 0 0 80 114 156 0 \

value 0 0 0 0 0 0 0 0

We can then find the specific map from the list of maps with “bpftool map”. Note that it still shows zero hits in the values.

# bpftool map dump pinned /sys/fs/bpf/rrl_exclude_v4_prefixes

key: 18 00 00 00 50 72 9c 62 value: 00 00 00 00 00 00 00 00

Found 1 element

When we send a query

# dig -4 foo.nl @bpf.nlnetlabs.net

And then find the value to be increased

# bpftool map dump pinned /sys/fs/bpf/rrl_exclude_v4_prefixes

key: 18 00 00 00 50 72 9c 62 value: 01 00 00 00 00 00 00 00

Found 1 element

It works! 🎉

The full code for all of these is again available in our GitHub repo.

Conclusions and musings

The maps available in BPF provide us with mechanisms to keep state, allowing us to do simple but effective things with little effort.

Specifically, when doing anything networking we have seen how maps can provide us with ways of configuring a running XDP program, and query it for runtime statistics. Further, it shows how powerful the concept of BPF is: kernel-level performance with significant flexibility, but without the need for reboots.

Choosing the right map type might not always be trivial. We have seen the non-locking PERCPU_ variant of the hashmap type, while there is no such variant for the LPM_TRIE. Perhaps PERCPU_HASH would therefore be better in terms of performance, though LPM_TRIE offers a built-in way to match on prefixes. And perhaps one can be offloaded to hardware while the other can not. We hope to gain more insights (and some numbers) by doing performance measurements in the near future.

Next time

For our next post, we want to explore another augmentation possibility: DNS Cookies as described in RFC7873 and draft-ietf-dnsop-server-cookies. It will require exploring other mechanisms related to the BPF/XDP ecosystem, namely the Traffic Control (TC) layer, to be able to act upon outgoing packets.

Furthermore, it will require more complex stateful logic, as we need to relate incoming queries and outgoing answers. Can this be done in a fully transparent way without adapting the actual resolver? Stay tuned to find out and, in the meantime, share your experience with using XDP and BPF below.

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.