As part of our research into post-quantum cryptography (PQC) for DNSSEC, we test PQC as a drop-in replacement for classical algorithms. We explore a transition where both run simultaneously, analysing how resolvers validate records, edge cases, and the feasibility and impact of such a period.

In order to analyse the feasibility and impact of a transition period in which PQC and classical algorithms are used simultaneously, we compare what should theoretically happen according to the RFCs with what actually happens in practice. We first briefly talk about DNSSEC, and then discuss how DNSSEC signatures are validated in various cases, and what the impact of new algorithms could be.

DNSSEC in a nutshell

In DNS, a zone such as example.nl can contain multiple resource records (RRs) of various types. For example, an AAAA record pointing to ::1, and an MX record pointing to mail.example.nl. You can also have multiple records of the same type. RRs of the same type are grouped into RRsets. When DNSSEC is enabled for a zone, the RRsets are then digitally signed.

In order to sign RRsets, we need cryptographic keys. In DNSSEC, the public keys are stored in DNSKEY records, and the signatures in RRSIG records. Zones often use the split key configuration, where a zone signing key (ZSK) is used to sign the RRsets within a zone, and a key signing key (KSK) is used to sign the ZSK. This ZSK is then signed by the parent zone, creating a chain of trust from the root zone. It is also possible for a zone to use a combined signing key (CSK) that can be used for both purposes. When a validating resolver is queried for a record within a DNSSEC-signed zone, it will use the available RRsets, DNSKEYs and RRSIGs from the root zone to the queried zone and every zone in between to validate the response.

Opportunistic validation for new algorithms

When validating an RRSIG, a validating resolver needs to find the corresponding DNSKEY in a list of available keys. Instead of using a fixed-length identifier field, DNSSEC uses a simple 16-bit checksum of the key itself (DNSKEY RR KEY Tag), combined with some attributes of the key (algorithm, owner, signer, etc.) to identify DNSKEYs.

In some situations, the DNSSEC validation process can be flexible. For example, it is possible for two distinct keys to have the same identifying attributes and the same checksum; a situation known as a 'key collision'. A validating resolver cannot further distinguish between such keys, and will have to try all colliding keys until the correct key is found. When a colliding key does not work, it does not automatically invalidate the signature. The signature is only invalidated when none of the keys work. Hence, the DNSSEC validation process uses a flexible key selection policy for similar keys.

It is possible to simultaneously sign a zone with multiple keys based on different algorithms. In such cases, every record must be signed with at least one key for each algorithm. In 2013, the DNSSEC validation process was updated to explicitly instruct validating resolvers to ignore unsupported algorithms. This relaxes strict algorithm validation requirements, in favour of validating new algorithms opportunistically. This is fantastic news for a potential transition period, since we need resolvers that do not understand PQC algorithms (yet) to simply ignore them. However, we want to know whether resolvers around the world actually follow these updated validation standards, and adequately ignore unsupported algorithms in practice.

Before the RFC from 2013, if there was no working key for one algorithm, the response was considered invalid (bogus), even if there was a working key for another algorithm. That validation requirement for zones signed by multiple algorithms is rather strict, in stark contrast to the flexible key selection policy.

That led to the following problem: if there were a transition period where zones were signed simultaneously with both old and new algorithms, resolvers that did not yet understand the new algorithm would automatically consider the response bogus, even if they could validate the response with an older algorithm. That is not great for a transition period, where we would rather have a more flexible way of dealing with unsupported algorithms.

Historically, it would be up to ‘local policy’ to deviate from these standardised validation requirements. Although that allowed resolvers to relax strict algorithm validation requirements, it also allowed lots of diverse, individual validation requirements.

To fix that drawback, DNSSEC validation was updated to steer away from local policy decisions, and towards more uniform validation requirements.

Intentionally invalidated signatures

We also want to analyse the effect of signature validation failures on validation decisions across resolvers, when multiple known algorithms are used simultaneously. Specifically, we want to know how resolvers handle specific combinations of valid and invalid signatures. To that end, we use three different key configurations for intentional signature invalidations.

The first key configuration, ‘split’, uses one KSK and one ZSK. When a zone needs to be invalidated, we only invalidate the ZSK’s signatures, and the KSK’s signatures are left intact. The second key configuration, ‘combined’, uses one CSK. When a zone needs to be invalidated, we invalidate all the CSK’s signatures.

The third key configuration, ‘splitmany’, uses one KSK and 4 ZSKs. Here, three of the four ZSKs are always intentionally invalidated. When a zone needs to be completely invalidated, we corrupt the remaining ZSK’s signatures as well.

Test setup

In order to test how well resolvers would handle the transition period in our scenario, we have set up a name server that is authoritative for many zones with specific combinations of algorithms, key configurations and signature invalidations. This name server setup is similar to the SIDN Labs DNS workbench, and might be added to the workbench in the future.

Our work follows and extends deSEC’s PQC DNSSEC field study, by analysing the validation of zones signed with multiple known and unknown algorithms simultaneously. In our setup, we use the RIPE Atlas network to send 64 different DNS queries from 10,000 probes, where we request the same list of probes for every measurement. RIPE Atlas cannot guarantee that every probe participates in all 64 measurements, so for some measurements we have fewer participating probes.

Most participating probes are configured to use multiple resolvers. In each measurement, we instruct the probes to query all their configured resolvers for a specific domain. We set the DO flag for each query, which asks the DNS resolver to respond with DNSSEC-related resource records. Since RIPE Atlas does not support a retry over TCP when responses are truncated over UDP, we simulate this behaviour by querying over UDP and TCP separately.

We use three algorithms: the classical algorithms eight (RSA) and thirteen (ECDSA) that MUST be implemented by DNSSEC validators, and the post-quantum algorithm Falcon-512 with fixed-size padded signatures. Since there is no standardised algorithm number for Falcon-512 (yet), and we are not using it as a private algorithm, we pick algorithm number 251. This is an unassigned algorithm number that currently cannot be assigned to any new algorithm. By using this number, we avoid accidentally claiming an algorithm number by precedent without proper standardisation.

To distinguish the measurements, we use a structure of domain names as follows: <algorithm config>.<key config>.pqcdnssec.sidnlabs.nl. The algorithm configuration denotes the algorithms being used, and whether their signatures are intentionally invalidated. For example, the domain name 8-valid-13-invalid.split.pqcdnssec.sidnlabs.nl indicates that the zone uses the ‘split’ key configuration and is signed by both algorithm eight and thirteen, where all signatures of thirteen are intentionally invalidated.

Most probes are configured to query multiple resolvers, so we expect multiple responses per probe. We also expect that many probes are configured to use certain popular resolvers, like Cloudflare’s or Google’s, which means that some resolvers will be over-represented in our data. Therefore, we need to filter our responses by unique resolvers, instead of by unique probes. We distinguish unique resolvers by their public IP addresses or, in the case of local resolvers, their private IP addresses combined with their probe IDs. For each unique resolver, we expect to see a different number of possibly varying responses. We select the most frequent response, pruning some timeout or load-balancing errors, to get one response for each unique resolver.

By looking at the flags in the responses, we can filter out responses that contain obvious mistakes. Since we are only interested in resolvers, not name servers, we filter out responses that don’t have Recursion Available (RA) set. Similarly, we filter out responses that set the Authoritative Answer (AA) flag, since those responses falsely claim to be authoritative for our testing zones. When responses contain the Checking Disabled (CD) flag, or don’t contain the Recursion Desired (RD) flag, it indicates that the resolver did not handle the query as requested.

We additionally filter responses based on how the resolver answered for a typical DNSSEC-signed zone. Resolvers that did not answer correctly for 13-valid.split over UDP are ignored, since the implementation of algorithm 13 (ECDSA) is mandatory in DNSSEC validators, and the split key configuration is commonly used. That allows us to measure and compare Falcon against the baseline of ECDSA.

Comparing local and public resolvers

We got answers from 11,224 unique resolvers, consisting of 34.76% public resolvers and 65.24% resolvers located on local networks. From 95.10% of the unique public resolvers, we received an answer over TCP. That does not automatically mean that 4.90% did not support TCP, since RIPE Atlas cannot guarantee that every selected probe contributes to every measurement.

For the unique local resolvers, however, we only received an answer over TCP from 0.237%. That suggest that, save some exceptions, TCP might be widely unsupported on local resolvers.

Digging further, we can see the error message “TU_BAD_ADDR: true” quite often, and only on queries over TCP to local resolvers. It is likely that this is the result of a firewall or policy setting specific to RIPE Atlas probes, which has been previously reported on the RIPE Atlas mailing list. Therefore, the results for local resolvers might not accurately reflect real-world behaviour. We filter out those very few successful responses from local resolvers over TCP, and leave the support of DNS over TCP by local resolvers to future research for now.

Appropriate responses

In evaluating whether a response is an ‘appropriate response’, we distinguish between validating and non-validating resolvers. Where non-validating resolvers are concerned, we consider a response to be appropriate if it contains the correct IP address, has the response code NOERROR, and does not have the AD flag set.

Where validating resolvers are concerned, a distinction is made on the basis of whether we expect the answer to be validated. For queries that are expected to be invalidated (bogus), we expect a validating resolver to respond with a SERVFAIL response, without any data. For queries that are expected to be validated, we expect a validating resolver to respond with the correct IP, the AD bit set, and the correct number of signatures. We also allow validating resolvers to answer as if they are non-validating resolvers.

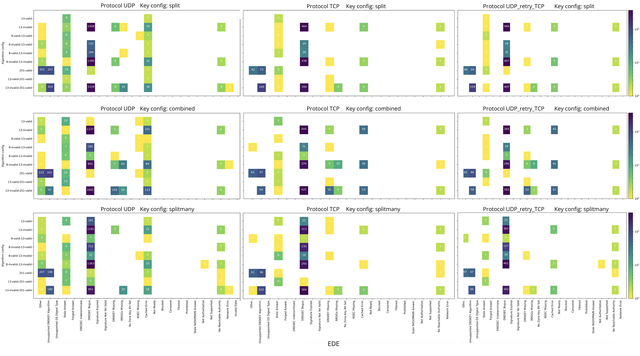

Figure 1 shows for each protocol, algorithm and key configuration the percentage of unique resolvers that gave an appropriate response.

Figure 1 (click here for full size image) is divided into a grid of 3x3 graphs. Each row of subgraphs shows a key configuration (split, combined and splitmany), and each column shows measurements performed via a certain protocol (UDP, TCP or UDP with retry over TCP). Each subgraph then shows, for each measurement, the percentage of unique resolvers that gave an appropriate response.

Looking at the results for the split and combined key configurations, we can see that, for nearly all queries, most resolvers returned an appropriate response. However, there are some clear outliers.

Order seems to matter

We can see that over UDP and TCP, the queries to 8-invalid-13-valid returned far fewer appropriate responses than those to 8-valid-13-invalid. A possible explanation for the difference is that many resolvers might sort the signatures by ascending algorithm number.

In theory, the order of the signatures should not matter; as long as there is a valid signature, the response should be authenticated regardless of the number of invalid signatures. However, as a countermeasure against the KeyTrap attacks, some validating resolvers abort the validation process when there are too many invalid signatures. For some resolvers, it appears this countermeasure allows no invalid signatures at all. Such resolvers will incorrectly consider 8-invalid-13-valid bogus, but correctly consider 8-valid-13-invalid validated.

It seems like the order of the signatures matters a lot. We can see similar patterns in the results for the splitmany key configuration, where the order of the keys and the number of incorrect signatures determine how well they are validated.

Additionally, the splitmany key configuration has a lot more incorrect answers over UDP, where responses are truncated more often due to the larger message sizes.

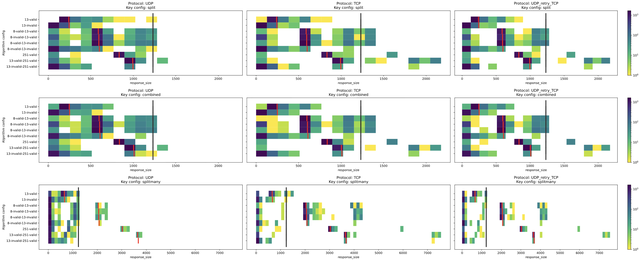

Some of the resolvers included Extended DNS Errors (EDE) in their answers. These are shown in Figure 2. We can see that EDE code 1, indicating that the resolver encountered an unsupported DNSKEY algorithm, was present in queries to 251-valid as expected. In queries to 13-valid-251-valid, this error code is completely absent, yet present again in queries to 13-invalid-251-valid. That shows that the resolvers likely attempted to validate the signatures in ascending algorithm number order.

In Figure 2 (click here for full size image), each row of subgraphs shows a key configuration (split, combined and splitmany), and each column shows measurements performed via a certain protocol (UDP, TCP or UDP with retry over TCP). Each subgraph then shows, for each measurement, which Extended DNS Errors appeared in resolvers’ answers.

Response sizes

Figure 3 shows, for each protocol, algorithm and key configuration the sizes of the responses received from unique resolvers. Each box has a width of 128 bytes, and is coloured to match the number of responses in that size range. The red lines show the response sizes returned by our own name server for reference. The grey lines show the boundary of 1,232 bytes, a suggested maximum UDP buffer size for DNS to avoid IP fragmentation.

Figure 3 (click here for full size image) is again divided into a grid of 3x3 graphs. Each row of subgraphs shows a key configuration (split, combined and splitmany), and each column shows measurements performed via a certain protocol (UDP, TCP or UDP with retry over TCP). Each subgraph then shows, for each measurement, the sizes of the responses received from unique resolvers.

Many more keys

There are some circumstances where the number of keys and signatures in a zone is intentionally increased. For example, a zone could have a standby key already published, to be used in emergencies. It is also common to have pre-published keys during key rollovers. During a double-signed key rollover, a complete zone can be signed by both its old key and the new one. During a transition period, we might expect multiple (valid) keys and signatures for both new and old algorithms at the same time.

In order to test how well resolvers handle an increase in the number of keys during the transition period, we created zones that were signed by algorithms 13 and 251 with a regular split key configuration, and increased the number of keys and signatures in a few steps.

Figure 4 shows the percentages of appropriate responses, and Figure 5 shows the response sizes for these zones.

The three sub-graphs in Figure 4 (click here for full size image) show measurements performed via a certain protocol (UDP, TCP or UDP with retry over TCP).

The three sub-graphs in Figure 5 (click here for full size image) show measurements performed via a certain protocol (UDP, TCP or UDP with retry over TCP).

Notably, using more than one set of keys for each algorithm already surpasses UDP limits with most resolvers. The ‘UDP_retry_TCP’ column shows that retrying over TCP increases the percentage of appropriate responses significantly, but not enough to allow reliable double-signed key rollovers.

The impact of a transition period

Our results show that unsupported algorithms are ignored by nearly all resolvers. The results also show that TCP is widely supported by public resolvers, and there are barely any issues in validation for realistic use cases over TCP. However, TCP support amongst local resolvers appears to be either extremely low or non-existent. Further research is required to test whether this reflects a shortcoming specific to RIPE Atlas, or is indeed a shortcoming specific to many local resolvers (in the past, this has certainly been an issue).

We saw some validation issues caused by strict limits in KeyTrap mitigation tactics for some resolvers. These validation issues are not specific to PQC algorithms, and occur only with intentionally invalidated signatures. Since intentionally invalidated signatures are very rare in the real world (DNS testbeds, attacks) the observed issues do not represent a serious problem.

The biggest issue with PQC algorithms is their increased key and signature sizes. Over UDP, most drops in appropriate responses are caused by the increased DNS message sizes. We noticed that using Falcon alongside ECDSA worked reasonably well with one split keyset for each algorithm. However, normal use cases involving multiple keys and signatures, such as key rollovers and standby keys, were badly affected over UDP, as their messages exceeded buffer limits.

This shows that a transition to PQC algorithms cannot realistically work well over UDP, emphasising the need for local resolvers to support TCP when they perform DNSSEC validation locally.

Many resolvers appear to sort keys and signatures in ascending order of algorithm number, key tag, and some other features. Because of that ordering, signatures with lower algorithm numbers are often checked first. Since the algorithm numbers are currently assigned and standardised in ascending order, newer algorithms will always have higher algorithm numbers, and will therefore usually be checked last. Validation succeeds upon the first validated signature, and in some cases, also fails upon the first failed validation attempt.

That means that, in practice, using multiple algorithms alongside each other will mostly result in signatures from newer algorithms being ignored. Validation then mostly depends on the signatures of the older algorithms, even if the resolver understands the newer algorithms. In other words, newer algorithms alongside older algorithms are not used opportunistically, even if they could be.

Conclusion

Considering the transition period, most resolvers handle our queries reasonably well. Unsupported algorithms are mostly ignored, and validation of algorithms unknown to the resolver alongside known algorithms does not differ greatly from validation of known algorithms on their own. However, that behaviour appears to be caused in part by the sorting order, where higher algorithm numbers are often ignored regardless of algorithm support, in favour of lower algorithm numbers. There is therefore little to be gained from having a transition period longer than that used for a quick algorithm rollover, unless the way DNSSEC validation works changes.

We have also seen that the larger message sizes associated with using PQC algorithms can lead to a large increase in validation issues. That shows that PQ-safe DNSSEC might require a solution that goes beyond the simple drop-in replacement of classical algorithms.

Comments 0