Dyn Research published an article on K-root recently. Here we would like to augment the picture with data from RIPE Atlas in order to provide a more complete picture of the effect of the K-root node in Iran.

We saw the interesting report from Dyn Research (formerly Renesys) about K-root and Iran. As K-root is run by the RIPE NCC and Iran is in our service region, and we do have Internet analysis tools, we wanted to see whether our data could augment the overall picture we have of this K-root node's effect on the surrounding region.

The good: K-root from Iran

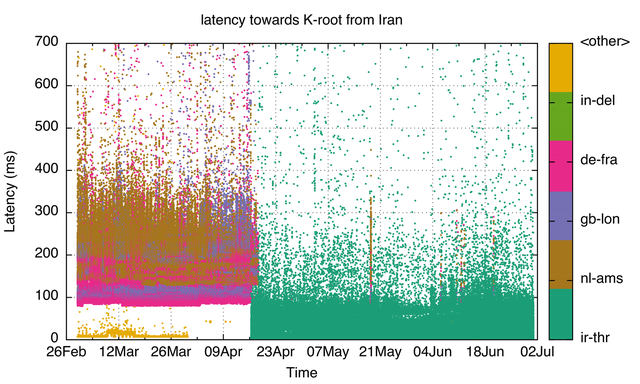

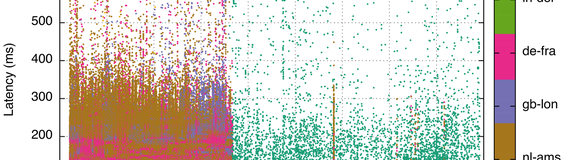

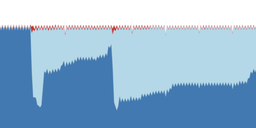

Having a K-root node in Iran lowers latency towards the root-server system significantly for Internet users within the country. Figure 1 shows the round-trip times from RIPE Atlas probes in Iran towards K-root. You can see that latency towards K-root dropped significantly when a K-root node was installed in Tehran in April this year. From mid-April, our vantage points began to measure significantly lower latency toward the K-root node in Tehran (ir-thr) than they previously experienced for K-root nodes located in Amsterdam (nl-ams), London (gb-lon) and Frankfurt (de-fra).

Note that the other data points in the bottom left corner are node IDs we get back as responses that are not part of the official set of node IDs for K-root, so it's likely there is a CPE or other device intercepting these queries for a few vantage points.

Figure 1: Latencies towards K-root from vantage points in Iran. Colours represent the specific K-root location that answered the query. Names are UN LOCODE based. (Source: RIPE Atlas probes in Iran doing DNS NSID measurements)

The sometimes-not-so-good: K-root from India

As reported, networks in India have seen the K-root node in Tehran, too. This is a bit surprising, since K-root hosts decide what scope their node will have, and the host in Tehran had decided to make it only visible in Iran.

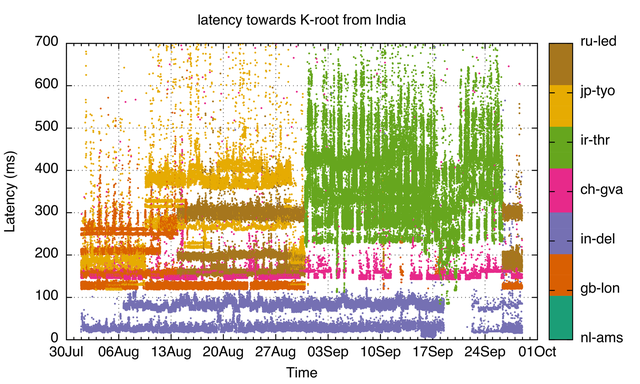

Figure 2 shows how our RIPE Atlas vantage points in India see K-root. We can see the switch from the St. Petersburg (ru-led) to the Tehran (ir-thr) node around 1 September. We do see probes still hitting the most local Noida/New Delhi (in-del) node at that time, but the same is true for the K-root instances in Tokyo (jp-tyo), London (gb-lon) and Geneva (ch-gva). You can also see that around 26 September, all the vantage points in India stopped seeing the Tehran node, and switched back to the "normal" pre-September set of K-root instances. This indicates that the original intention of the Tehran K-root host was achieved at that time.

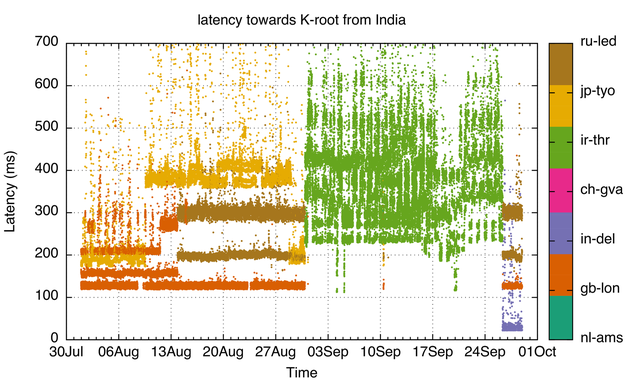

Because RIPE Atlas probes are not necessarily a good representation of networks with the most users, we redid the analysis, this time using only the probes that are in the largest-user networks in India where we have coverage. We used the "eyeballtrace" method to get a small set of probes representing these networks (read more in the methodology section below).

As you can see in Figure 3, this set of vantage points doesn't see the local K-root node in Noida/New Delhi, but some of them switch to this node around 26 September. This figure also shows that sometimes the latency towards the Tehran node is comparable to the baseline latency for the London node. For example, the lowest latency is around 120 ms for the period around 20 September.

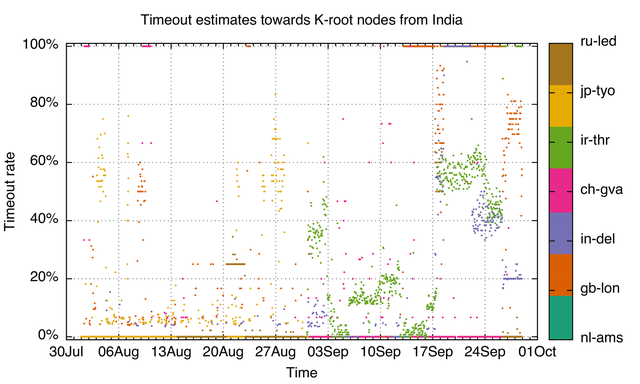

Figure 4 below shows estimated loss rates towards K-root nodes from our Indian vantage points. These are estimates because if we don't get a response, we don't know which server we didn't get a response from, so if our vantage points show a timeout we count that towards the last K-root instance they received an answer from. As you can see, there is a severe timeout episode to the Tehran node (on 17 - 27 September the timeout percentage was close to 60%). However, the same applies for other remote nodes like Tokyo and London. Perhaps more surprising are the large number of timeouts towards the Noida/New Delhi node, which you wouldn't expect because it is located in the same country as the vantage points.

Note that the horizontal line patterns are related to our estimation method, and caused by one of our vantage points getting an initial response from K-root but after that only receiving timeouts. The longer these horizontal lines are, the less likely it is that the timeout is actually attributable to the given K-root node.

Figure 4: Estimated query loss towards K-root from vantage points in India in the largest-user networks. The colours represent the specific K-root location that answered the query. Names are UN LOCODE based. (Source: RIPE Atlas probes in Iran doing DNS NSID measurements)

Iran K-root instance in other countries

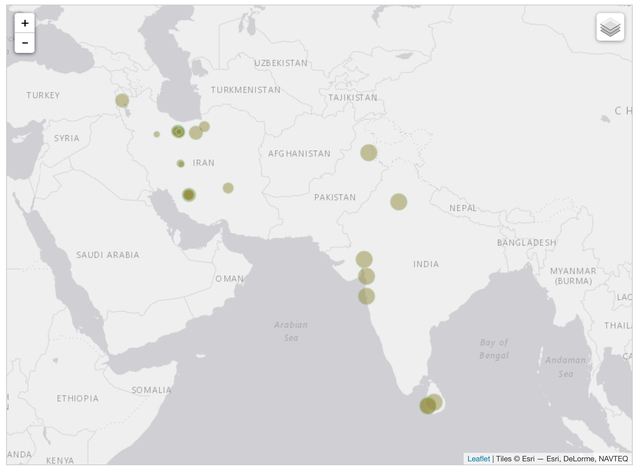

Now that we have the exact time frame of this routing anomaly, we can use the recently added RIPE Atlas time travel functionality on several RIPE Atlas maps to check if other countries besides India also saw the K-root node in Iran. Figure 5 is a screenshot of the "root instances" visualisation available on the RIPE Atlas website, showing only probes that hit the Tehran node on 15 September .

Figure 5: RIPE Atlas vantage points that hit the Tehran K-root node on 15 September

This shows that, apart from vantage points in India, some in Sri Lanka and Pakistan also saw the Tehran K-root node. We don't have an online probe in Oman, but we also expect it would have seen this.

Performance of the root-server system as a whole

How were the users in India affected by hitting the K-root node in Tehran? We don't expect this had a significant impact, if it had an impact at all. The root-server system is redundant in many ways. One of the most important mechanisms is that there are 13 different root name servers run by different organisations, so if one of the root servers is slow, there are 12 more that can "cover for you".

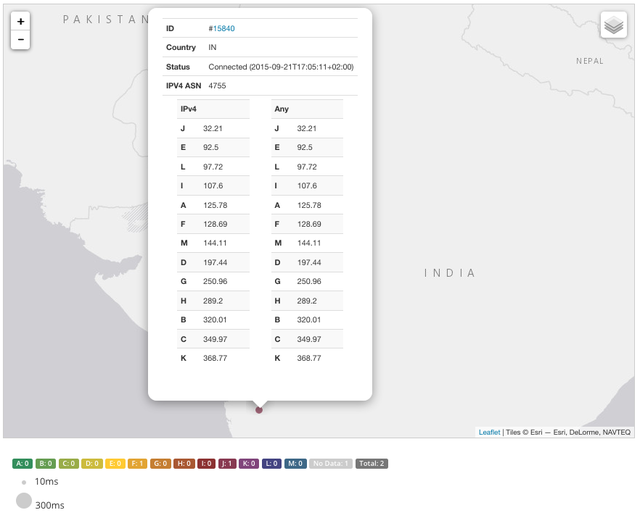

A typical caching resolver implementation will heavily bias the number of queries towards the server that is closest in terms of latency (see this excellent presentation on various caching resolver implementations ). If one of the root servers becomes latency-impaired for whatever reason, the number of queries it actually receives goes down significantly. We expect that to be the case in India: Because latency went up significantly for some networks, the query volume towards K-root went down relative to the other root servers. See Figure 6 for an example of root server latencies for a RIPE Atlas probe in India in AS4755 (TATA India). K-root is at the bottom of the list, because it has the highest latency from that particular vantage point in India. You can see current results here .

Figure 6: Example of root server latencies for a RIPE Atlas vantage point in India

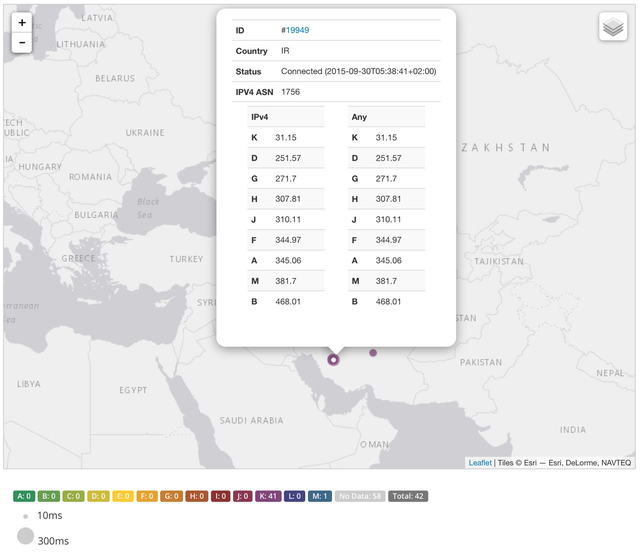

The opposite is the case for users in Iran, as can be seen in Figure 7. Because there is now a K-root node in the country, it dramatically reduced latency towards this root server. As a consequence, the query volume is expected to have gone up quite significantly relative to the other root servers, and you can see it on top of the list in Figure 7.

Figure 7: Example of root server latencies for a RIPE Atlas vantage point in Iran

Conclusion and Feedback

Routing anomalies happen, but the effect of this particular anomaly on end users appears to be small.

We've heard that people would like to be notified if we find anomalies in network behaviour, rather than just read about them in RIPE Labs articles. If this is something that is important to you, please leave a comment or send an email to labs [at] ripe [dot] net and let us know what you think. And if investigating and fixing routing anomalies is something the community feels we should spend more resources on, we welcome your feedback about that, too.

We've also heard that people are worried about the paths that root server traffic takes, or where root server traffic ends up. One way to address this is to deploy RIPE Atlas probes or anchors and periodically watch the DNS root instances page on the RIPE NCC website, filtering for your AS or country. One possible improvement could be a tool (maybe a Nagios plugin, for example) that sends you alerts if things are out of your comfort zone. If this kind of thing interests you, consider joining the RIPE Atlas tools hackathon the weekend before RIPE 71 in Bucharest!

We plan to include graphs like those shown Figures 1- 4 on our RIPE Atlas result pages, possibly to augment the new time travel feature. This would allow you to pick interesting points in time.

If you don't already host a RIPE Atlas probe, consider applying today. You'll help us expand the network and make even more Internet data available to the entire community. You can also join interesting discussions on the RIPE Atlas mailing list .

If you have any other comment about our services (K-root, RIPE Atlas or otherwise), please don't hesitate to contact us at labs [at] ripe [dot] net.

Footnotes:

For Figures 1-4, we look at built-in RIPE Atlas measurement 10301, which is a DNS NSID measurement towards K-root.

The raw data underlying the India graphs is available here:

https://atlas.ripe.net/api/v1/measurement/10301/result/?format=txt&prb_id=1083,4913,6107,13558,13602,14593,15840,20814,22793,23692,23695,23696,23697,25064,25111,25164&start=1438387200&stop=1443484799

The raw data underlying the Iran graph is available here:

https://atlas.ripe.net/api/v1/measurement/10301/result/?format=txt&prb_id=516,4066,4619,12089,12187,12435,12917,13179,13312,13938,13991,14018,15284,15379,15393,15535,15917,15920,17418,17731,17743,18260,18629,19083,19181&start=1425168000&stop=1435708800

Processing scripts are available

here

(RTTs) and

here

(loss estimates). The loss estimates are aggregated to one-hour bins for individual K-root instances.

For the "eyeballtrace" subselection, we used the "eyeballtrace" script (available here ) methodology to extract probe_ids. This resulted in this list of probe_ids:

25111,20814,13602,305,15840,14593,22793

which are in these ASNs:

AS9829 (BSNL, estimated 22% of browser users in India)

AS24560 (Airtel, estimated 12% of browser users in India)

AS45528 (Tikona, estimated 1.4% of browser users in India)

AS4755 (TATA, estimated 2.7% of browser users in India)

Browser user estimates are from APNIC and give an idea about what percentage of a country's end users reside in a particular network.

If you've read this far you are probably a good candidate for our upcoming RIPE Atlas tools hackathon !

Comments 1

Comments are disabled on articles published more than a year ago. If you'd like to inform us of any issues, please reach out to us via the contact form here.

Emile Aben •

As I learned more about this story, credits should go out to Anurag Bahia (@anurag_bhatia) for being the one to signal K-root traffic going out of India.