The Internet Measurement Conference (IMC) is an annual conference focusing on Internet measurement and analysis. The 17th edition is taking place from 31 October to 2 November 2018 in Boston. RIPE NCC staff at the event will be live blogging key moments. See this page for regular updates on the issues, arguments and ideas discussed over the course of the meeting.

2 November 2018, 16:50 EDT

Day 3: DNS

Why do users experience service outages from some real-world DDoS events, but barely notice the effects of others? - Caching and retries. The authors of the first paper in the session show that caching and retries by recursive resolvers greatly improve the resilience of the DNS as a whole. They can cover over partial DDoS attacks for many users, even with a DDoS resulting in 90% packet loss and lasting longer than the cache timeout. The authors encourage the use of at least moderate TTLs (Time-to-live) wherever possible.

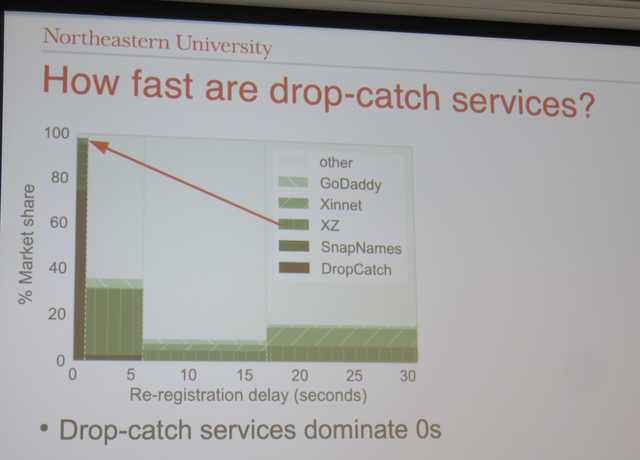

Domain drop-catching refers to the practice of re-registerng a desirable Internet domain name as soon as it expires. The authors of From Deletion to Re-Registration in Zero Seconds: Domain Registrar Behaviour During the Drop show that .com domains are deleted in a predictable order.

The authors show that 9.5% of deleted domains are re-registered immediately, with a delay of zero seconds

They observed a range of re-registration behaviours, including the use of reseller APIs for “home-grown” drop-catching seconds to minutes after the drop, and re-registration of deleted domains in large batches hours later. They use this information to create a precise metric which detects drop-catch re-registrations.

The authors of the last paper of the session presented their DNS experimental framework – Ldplayer – which enables DNS experiments to scale in several dimensions: many zones, multiple levels of DNS hierarchy, high query rates, and diverse query sources. The replay system is efficient (87k queries/s per core) and reproduces precise query timing, interarrivals, and rates (§4). The authors have used it to replay full B-Root traces, and are currently evaluating replays of recursive DNS traces with multiple levels of the DNS hierarchy. The software of the system is publicly available.

- Gergana

2 November 2018, 13:30 EDT

Day 3: Routes & Connections

In this session, Kevin Vermeulen presented his work on MDA-Lite in Multilevel MDA-Lite Paris Traceroute, an approach to maximise discoverability of multipath routes using traceroute while minimising the number of active traceroute probes required. There is interest in the community to find ways to identify and map these topologies as they continue to get more complex. We'll be following up soon with a more detailed blog post on this topic.

Also in this session was Three Bits Suffice: Explicit Support for Passive Measurement of Internet Latency in QUIC and TCP, which is part of the continued discussion in the IETF on how to expose sufficient information to reliably allow RTT measurement using QUIC streams without exposing additional information. The paper discusses using three bits in the QUIC protocol header to allow two nodes to measure network-level RTT: one bit as a "spin" bit that flips back and forth as it's exchanged, and another two bits for a "valid edge counter" (VEC). The spin bit on its own can be problematic in the presence of packet reordering, where the bit may appear to flip more rapidly at the receiver than the actual network path could physically allow. The VEC helps solve that problem, by marking the packet that actually carries the state-change on the spin bit, and it acts as a tiny counter that allows the observer to know the state the two endpoints believe they're in on the RTT estimation.

- Stephen

An IXP can be defined as a layer-2 infrastructure where networks (freely) exchange traffic among one another. O Peer, Where Art Thou? Uncovering Remote Peering Interconnections at IXPs investigates the spread of remote peering in the Internet Ecosystem. The authors designed an algorithm composed of four modules to do so. It is based on port capacities from Peering DB and IXP websites, RTTs from VPs within IXPs to IXPs IPs and facility infos, Traceroutes and facility information, and finally Traceroutes + AS-facility mappings. Their validation of the said algorithm revealed 94% of accuracy. Among 30 top IXPs, 1/3 of members peers remotely. IXPs growth is mainly driven by remote peering. Their tool will soon be released, along with a Rest API.

- Roderick

2 November 2018, 10:30 EDT

Day 3: Tracking and Scanning

Tracing Cross Border Web Tracking, one of the three winners of the Distinguished Papers Award, measures the amount of tracking flows (flows between an end user and a Web tracking service) that cross data protection borders, national or international, such as the EU28 border within which the General Data Protection Regulation (GDPR) applies. They find that majority of tracking flows cross national borders in Europe but stay within the GDPR jurisdiction. There also exists a correlation between the density level of IT infrastructure of a country, mostly in terms of datacenters, and the confinnement of tracking fows within its borders. Simple DNS redirection and PoP mirroring, which cn be implemented at a rather low cost, can increase national confinement. However, the authors also find that cross boarder tracking is prevalent even in sensitive and hence protected data categories and groups including health, sexual orientation, minors, and others.

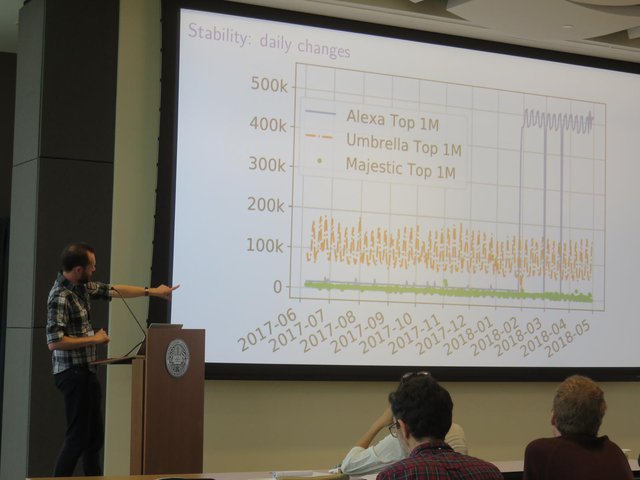

The last paper of the session, the winner of the Community Contribution Award, investigates popular hitlists such as Alexa top 1M, Cisco Umbrella 1 Million and the Majestic Million, which are often used for measurements research.

Stephen Strowes explains that top lists can undergo rapid and unannounced changes and Alexa appears to be the most unstable of them.

The authors find that, generally, top lists overestimate results compared to the general population by a significant margin. They also have significant daily variation, which could affect the results of measurement studies. The paper hopes to encourage deeper rationale in top list usage in the future and advises the researchers to include important dates in their papers.

- Gergana

1 November 2018, 17:25 EDT

Day 2: TLS

The last session of the day focused on TLS – the most widely used cryptographic protocol for securing communication on the Internet.

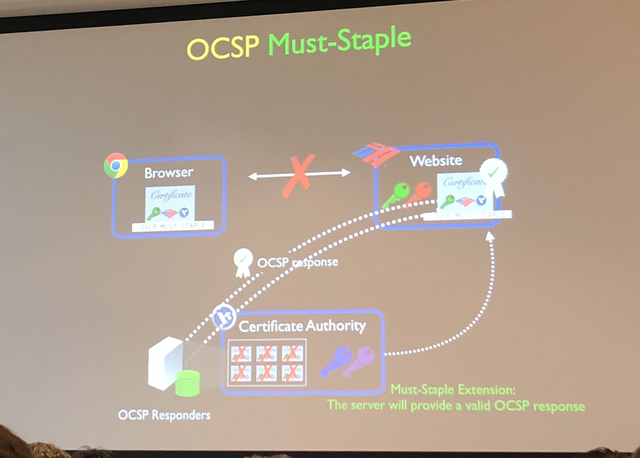

The first paper looked into OCSP, a protocol for querying a certificate’s revocation status and confirming that it is still valid.

The OCSP Must-Staple certificate extension was introduced to address issues such as slow performance, unreliability, soft-failures, and privacy issues

However, the authors show that OCSP servers are not fully reliable and OCSP Must-Staple is not fully supported by web server software and most major web browsers. Still, they hope that a much wider deployment of OCSP Must-Staple can be achieved, since only a few players should take action to implement it.

The second paper analyses the impact the increased use of Certificate Transparency (CT) on the Internet ecosystem. The authors find that majority of certificates are logged to very few logs, introducing vulnerability. Next, they show that domain names of CT-logged certificates reveal confidential or private information. And lastly, they are concerned that leaked domain names are actively used, sometimes maliciously, in Internet scanning. The authors call for more work on countermeasures to protect against the new attack possibilities introduced by CT.

The last paper, one of the three winners of the Distinguished Paper Award, examines the evolution of the TLS ecosystem in response to high-profile attacks over the last six years. On the bright side, they find that weak algorithms like DES and RC4 are replaced by stronger AEAD designs; export, anonymous and NULL cipher suites’s use is declining; use of forward secrecy, new elliptic curves and TLS 1.3 is increasing. At the same time, they show that while clients, especially browsers, are quick to adopt new algorithms, they are also slow to drop support for older ones. In addition, many client softwares continue to offer unsafe ciphers.

- Gergana

1 November 2018, 15:25 EDT

Day 2: Censorship/Routing

403 Forbidden: A Global View of CDN Geoblocking is a really interesting study on where CDN-provided censorship tools are used to block web content in particular countries. They find that in the common case, default policies from the CDN networks have a strong influence on which countries are blocked. For example, Google's AppEngine appears to block Syria, Iran, Sudan, and Cuba by default, though these countries tend to be the most likely countries to be blocked on other networks too. The sites most likely to be blocked are shopping sites or business sites, though news and media is reasonably common also, in some cases due to blanket blocking of European nations after GDPR came into effect. Such blocks, especially of the media, are concerning as a means for preventing the free flow of information.

BGP Communities: Even more Worms in the Routing Can is an interesting paper that discusses BGP communities and how they offer new attack vectors on the BGP ecosystem. BGP communities are way more common in the BGP data than they used to be, and they can be used for signalling between ASNs. For example, remote triggered blackholing and path prepending communities are common. But 14% of transit providers propagate communities, even though this is not expected behaviour. Many networks propagate the 666 community (RFC7999), for example, for remote blackholing. They detail attacks where a hijacker may be able to announce a remote-triffered blackhole, propagated by other networks, and traffic redirection attacks by propagating path prepending communities. This is work that was also presented recently at the RIPE 77 meeting in Amsterdam.

- Stephen

1 November 2018, 12:15 EDT

Day 2: CDNs

The CDNs session turned our focus mostly onto the related topics of modern content distribution and outage detection on edge networks.

Two papers caught my attention:

Characterizing the deployment and performance of multi-CDNs uses RIPE Atlas to help measure and understand Microsoft's and Apple's multi-CDN deployments across multiple regions. Although mapping IPs onto particular CDNs is not always trivial, some of the outputs of the work are interesting, such as service deployment per region. In the Microsoft case, most regions except Africa are well-served; in Apple's case, South America and Africa appear historically underserved, although that's been improving significantly. The deployments observed for each company is different, such as around 50% of Microsoft clients pull updates from within their local ISP, while 90% of Apple's updates are fetched from Apple's network.

Advancing the Art of Internet Edge Outage Detection is a fascinating talk on looking deeper into edge outages; one of the key approaches they use is to detect disruptions from dips in access patterns in CDN access logs, corroborated by active ICMP ping measurements. What's really neat is the (opt-in) cases where they have the ability to detect where a user is still able to access the CDN during the outage: in many cases, that's often via a cellular network or an entirely different network, but in many cases users apparently find an alternative route within the same ASN which is pretty confusing. They're also able to identify likely periods when disruptions happen and, for some domestic networks, these are largely confined to likely scheduled maintenance windows at the ISPs.

- Stephen

31 October 2018, 17:15 EDT

Day 1: Internet Scanning

The authors of the first paper of the session developed a new deep-learning-based approach to characterising Internet hosts into universal and lightweight numerical representations, while still maintaining the richness of the high-fidelity characteristics. A major advantage of this methodology is that it allows the extracted representations of hosts to be reused for a diverse set of applications. This means that academics no longer need to design or collect their own probes to perform quantitative studies.

If you attended RIPE 77 in Amsterdam two weeks ago, you might remember the next talk - Clusters in the Expanse: Understanding and Unbiasing IPv6 Hitlists - presented in the IPv6 Working Group by RACI attendee Oliver Gasser. For those of you who missed it, Oliver and his team show that addresses in IPv6 hitlists are heavily clustered and present new techniques pushing IPv6 hitlists from quantity to quality. From multiple sources they create the largest IPv6 hitlist to date, with more than 50 million addresses, half of which reside in aliased prefixes. Using clustering, they show that there are only six IPv6 addressing schemes. In order to help future IPv6 measurements they provide daily IPv6 hitlists and a list of aliased prefixes.

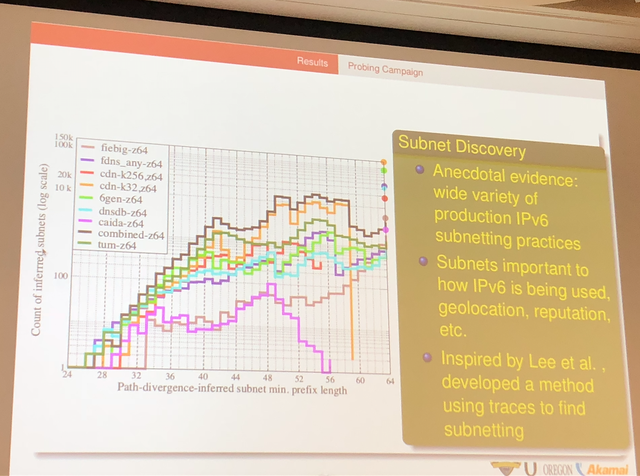

The last paper develops a technique for Internet-wide IPv6 topology mapping, adopting randomised probing in order to distribute probing load, minimize the effects of rate limiting, and probe at higher rates.

In the IP of the Beholder: Strategies for Active IPv6 Topology Discovery - The authors extensively analyse various IPv6 hitlists

Finally, the authors create a new IPv6 hitlist, which provide breadth (coverage across networks) and depth (to find potential subnetting). They discover more than 1.3M IPv6 router interface addresses.

- Gergana

31 October 2018, 15:30 EDT

Day 1: Overlays & Proxies

This session featured four papers on overlay/proxy networks, such as VPN services, Tor, and I2P. These papers are all doing important work.

One paper stood out: How to Catch when Proxies Lie: Verifying the Physical Locations of Network Proxies with Active Geolocation presented an excellent study of where VPN services host their servers, with strong analysis of network latencies and geoip services. For the networks they measured, the authors state that claims of server locations are correct probably one third of the time, possibly accurate another one third of the time, and probably false the final one third of the time. They detail how the networks use particular tricks (such as putting airport codes into reverse DNS names) to encourage widely used geoip services to assign IPs to different countries and thus open up geoblocked content for users without physically placing any servers in the target country.

- Stephen

31 October 2018, 12:10 EDT

Day 1: Attacks and Abuse

The first paper presented was the award winning paper on the origins of memes (see below). The authors use a processing pipeline based on perceptual hashing and clustering techniques to measure the spread of memes in web communities such as Twitter, Reddit, 4chan’s /pol/, Gab and The Donald. For just over a year they gathered over 160 million images from 2.6 billion posts. They obtained meme metadata from Know Your Meme to annotate all the memes. Their results show that racist memes are common in fringe communities, while political memes are common in both fringe and mainstream communities. Finally, using Hawkes processes, the authors assess the influence communities have among each other. They find that /pol/ has the greatest influence and The Donald is the most successful at pushing memes to other communities.

The next paper focused on Account Automation Services (AASs). These are services in which users provide their Instagram credentials to third party actors who use them to perform actions on the user’s behalf to artificially manipulate their social standing (in violation of Instagram’s Terms of Use). Using independent “honeypot accounts” the authors engaged with five large account automation services: Instalex, Instazood, Followersgratis, Boostgram and Hublaagram. They identifed two techniques these AASs use. Reciprocity abuse uses the fact that some users follow or like an unknown user in response to that user following them or liking their content. The second method, collusion networks, uses the AASs own network, so that each customer account is used to follow or like other customers. The authors find that AASs can attract and maintain long-term customers and generate revenues between $200k-900k per month. They also show that interventions, such as blocking actions from a given AAS, quickly provokes adaptation. Deferred removal (removing service actions a day later) on the other hand, are more likely to go unanswered.

The last paper focused on phishing websites, which are used to deceive users and penetrate their accounts or critical networks. The authors study squatting phishing domains, where the websites impersonate trusted entities at the page content level and the web domain level. They scanned five types of squatting domains (homograph, typo, bits, combo and wrong TLD) over 224 million DNS records and identified 657K domains that are likely impersonating 702 popular brands. Their machine learning classifier is built on measuring evasive behaviours of phishing and introduce new features such as visual analysis and optical character recognition (OCR) to overcome the heavy content obfuscation from attackers. The authors show that the 1175 squatting phishing pages they discovered are used for various targeted scams and are highly effective in evading detection – more than 90% evaded popular blacklists for at least a month.

- Gergana

31 October 2018, 10:00 EDT

Day 1: Opening Plenary

The conference started with a room packed to the brims. The chair explained we can expect to see around 250 attendees today, making it the largest IMC to date. Most of the participants, unsurprisingly, come from academia, and about 80 – from industry. Out of the 108 students attending, 36 have received travel grants.

You can find more information about the conference, including live streaming on the conference website.

Awards

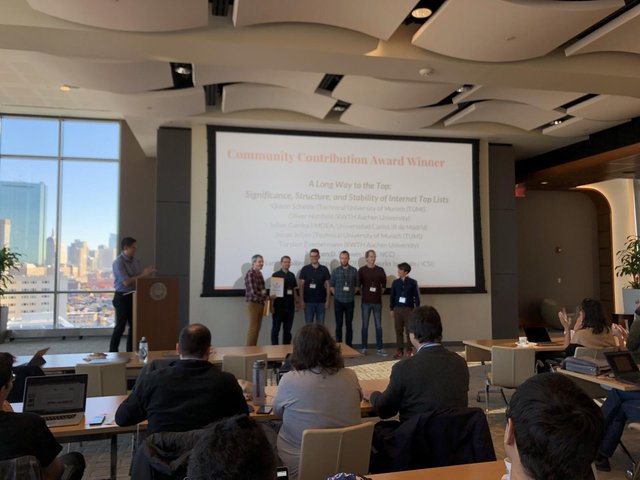

Out of the 174 submitted papers for the conference, only 43 were accepted. There were several awards distributed during the opening plenary. Community contribution award went to A Long Way to the Top: Significance, Structure, and Stability of Internet Top Lists. The chair commended the paper for analysing lists of target domains such as Alexa and showing that rank manipulation is possible for some list and suggesting steps to avoid bias.

Among the authors of the paper which won the Community Contribution Award are RIPE NCC's own Stephen Strowes and former RACI attendee Julien Gamba, IMDEA

The Distinguished Paper Award was split three-way between Coming of Age: A Longitudinal Study of TLS Deployment, Tracing Cross Border Web Tracking and On the Origins of Memes by Means of Fringe Web Communities.

- Gergana

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.