Last year we discovered a DNS vulnerability that, combined with a configuration error, can lead to massive DNS traffic surges. Since then, we've studied it carefully, carried out responsible disclosure, helped large operators as Google and Cisco in fixing their services, and submitted an Internet Draft to the IETF DNS working group to mitigate it.

The DNS is one of the core services of the Internet. Every web page visit requires multiple DNS queries and responses. When it breaks, the DNS ends up on the front pages of prominent news sites.

My colleagues and I at SIDN Labs, TU Delft, IE Domain Registry and USC/ISI, recently came across a DNS vulnerability that can be exploited to create traffic surges on authoritative DNS servers – the ones that know the contents of entire DNS zones such as .org and .com. We first came across it when comparing traffic from The Netherlands .nl zone, and New Zealands’ .nz authoritative servers, for a study on Internet centralisation.

Digging in to what we saw, we recognised that a known problem was actually much more serious than anticipated. The vulnerability, which we named tsuNAME, is caused by loops in DNS zones – known as cyclic dependencies – and by bogus resolvers/clients/forwarders. We scrutinise it in this research paper.

Importantly, current RFCs do not fully cover the problem. They prevent resolvers from looping, but that does not prevent clients and forwarders to start looping, which will then cause the resolver to flood the authoritative servers.

In response to this, we’ve written an Internet draft to the IETF DNS working group on how to fix it, and developed CycleHunter, a tool that can prevent such attacks. Further to this we’ve carried out responsible disclosure, working with Google and Cisco engineers who mitigated the issue on their public DNS servers.

Below is a brief summation of tsuNAME and our research thus far.

What causes the tsuNAME vulnerability?

To understand what causes this vulnerability we first need two DNS records to be misconfigured in a loop, such as:

#.com zone

example.com NS cat.example.nl

#.nl zone:

example.nl NS dog.exmaple.comIn this example, a DNS resolver that attempts to resolve example.com will be forwarded to the .nl authoritative servers so example.nl can be resolved, from which it will learn that example.nl authoritative servers is dog.example.com. This causes a loop, so no domains under example.com or example.nl can be resolved.

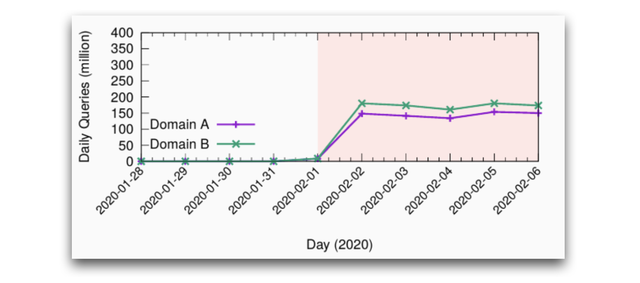

Why is this even a problem? Well, when a user queries this name, their resolvers will follow this loop, amplifying one query into several. Some parts of the DNS resolving infrastructure – which covers clients, resolvers, forwarders, and stub resolvers – cannot really cope with it. The result may look like Figure 1, which shows an example of two domains under the .nz zone, which had barely no traffic, starting to receive more than 100m queries a day, each.

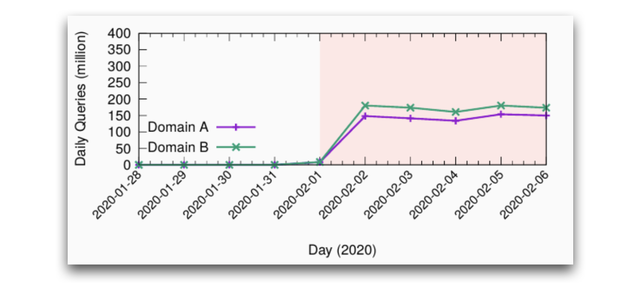

In this example .nz operators fixed the loop in their zones, and the problem was mitigated. In the meantime, the total .nz traffic increased by 50% (Figure 2), just because of two bogus domain names.

Now, what would happen if an attacker holds many domain names and misconfigures them with such loops? Or if an attacker makes many requests? In these cases a few queries can be amplified, perhaps overwhelming the authoritative servers. This risk is a new amplification threat.

So why does this traffic surge occur?

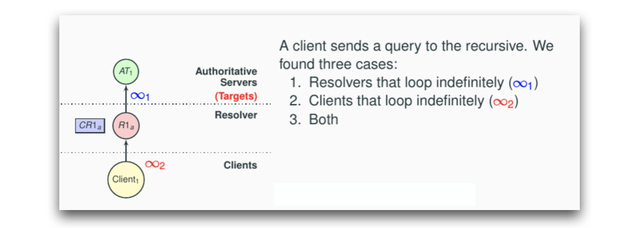

We found three root causes behind tsuNAME surges:

- Old resolvers (such as MS Windows 2008 DNS server) that loop indefinitely

- Clients/forwarders that loop indefinitely – they are not happy with SERVFAIL responses returned by resolvers (Figure 3)

- Or both of the above

This all may seem rather obvious but as mentioned, this is something that has not been considered at an IETF level, as per the following relevant RFCs:

- RFC1034 says: “resolvers should bound the amount of work 'to avoid infinite loops'"

- RFC1035 (§7.2) says that resolvers should have “a per-request counter to limit work on a single request”

- RFC1536 says loops can occur, but offers no new solution

As you can see, current RFCs prevent only resolvers from looping. But what happens if the loop occurs outside resolvers?

Imagine a client looping, sending new queries every second to the resolver, after receiving SERVFAIL responses from the resolver (Fig. 3). By following the current RFCs, every new query would trigger a new set of queries from the resolvers to the authoritative servers, even if they are limited.

This is what Google’s Public DNS experienced in February 2020, as per our previous study on Internet centralisation, in which we found that Google’s Public DNS sent far more A/AAAA queries to .nz compared to .nl. They followed the RFCs, and yet looping clients caused GDNS to send large volumes of queries to the .nz authoritative servers.

Fixing the tsuNAME problem

A fix to tsuNAME depends on what part of the infrastructure you are located.

If you are a resolver operator, the solution to the problem is simple: resolvers should cache these looping records, so every new query sent to clients/forwarders and stubs can be directly answered from cache, protecting authoritative servers. That is what we have proposed in this IETF draft, and that is how Google Public DNS fixed it.

If you are an authoritative server operator, then you need to make sure there are no misconfigurations in your zone files. To help with this, we have developed CycleHunter, an open-source tool that can be used to detect such loops in DNS zones. We suggest operators regularly check their zones against loops.

Responsible disclosure

We were able to reproduce tsuNAME in various scenarios and vantage points – including RIPE Atlas and a sinkhole – you can check our paper for details. We found more than 3,600 resolvers from 2,600 Autonomous Systems that were vulnerable to tsuNAME.

As such, we decided to carry out responsible disclosure to notify operators. We notified Google and Cisco and multiple order parties in early 2021, and on 6 May we publicly disclosed it – giving the parties enough time to fix their bugs, which they had.

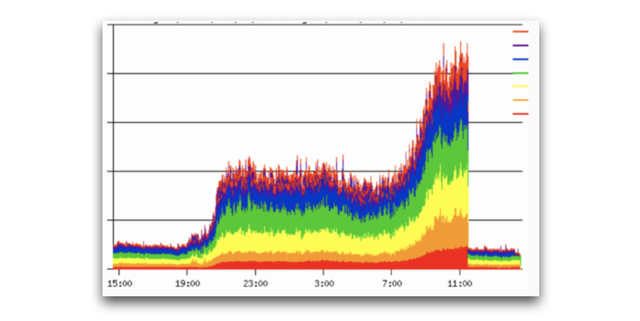

After the disclosure, several TLD operators approached us and said they have been victims of tsuNAME related events in the past. One of them was kind enough to share a time series of their traffic (Figure 4). This is a EU-based ccTLD operator, which saw 10x more traffic during a tsuNAME event:

Each line corresponds to a different authoritative server. The event starts around 19:00 UTC and ends at 11:00 UTC the next day, when the cyclic dependencies were removed from the zone.

What’s next

We’ve shown that despite being old, loops in DNS zones are still a problem. A and current RFCs do not fully cover it. We have also scrutinised tsuNAME, a type of event seen previously by many operators, but not known to the public. We have shown how it can be weaponised and its root causes.

We will continue to work on a revised version of our Internet Draft, which incorporates feedback from the community. Regardless of what happens to the draft, we are happy to have helped Google and Cisco to mitigate their public DNS servers against this vulnerability, contributing to make the Internet slightly safer.

Further reading:

- Peer-reviewed paper (ACM IMC 2021)

- https://tsuname.io website

- IETF 112 presentation @ MAPRG: (video,slides)

- IETF draft draft-moura-dnsop-negative-cache-loop-00

- DNS OARC 34 private disclosure

- DNS OARC 35 public disclosure

- RIPE83 presentation on responsible disclosure (video, slides)

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.