Our recent cloud migration is one of the most significant changes to RIPE Atlas in recent years, aimed at modernising our infrastructure and reducing costs. In this article, we give you a look at what’s been happening behind the scenes, some of the complexity involved, and how we’ll be improving the service now that this work has been completed.

RIPE Atlas has been in constant operation since it was launched at RIPE 61 in 2010. In the 14 years since then, the supporting infrastructure and code base have gone through several large evolutions. More recently, we have been rethinking how we store and serve measurement results, how to optimise the infrastructure, and where to use cloud technologies for this. A big factor in prioritising these tasks has been the clear ask from our membership to reduce costs, which this change should allow us to do.

As with any large-scale transition, this process brought some unexpected challenges, which were visible externally as significant network instability. In this article, we're taking a closer look at some of this, focusing on two key areas of this work: the overhaul of our measurement result storage system and the renewal of our probe controlling infrastructure.

Result storage renewal

RIPE Atlas's long-term datasets (results, latest, aggregations) have been stored in an Apache HBase on-premise cluster since the early days of the service. Data was stored multiple times, to allow different retrieval queries and redundancy, providing a convenient interface for the API implementation. This HBase cluster has grown over time, which made it a good place to start in terms of reducing our data centre footprint. The storage, totalling 1PB (3PB with HBase replication) was mostly taken by measurements results (97.6%).

Our RIPE Atlas controllers accept measurement results with a maximum delay of two weeks from the time they are taken, allowing for latecomers with temporary connectivity issues affecting their probes. After two weeks the data may be considered static, therefore these "older" measurement results provided an ideal candidate for an alternative storage solution that could vastly decrease the amount of data stored in HBase.

An extensive analysis of RIPE Atlas API query patterns provided several insights on usage. The load is pretty much constant throughout the day, with an average of 45 read requests per second and 25 write requests per second. Most requests target relatively small HBase tables, such as the latest results of each measurement. Regarding the endpoint for the retrieval of measurement results, a significant percentage of queries are for latest or most recent data, with decreasing interest for historical data that drops significantly for anything older than two years.

The opportunity to use a different storage approach than HBase for this historical static data was very clear, while at the same time we wanted to maintain the same functionality provided by the API without any disruption to the service, and of course keeping all the data we have collected since the beginning of the project.

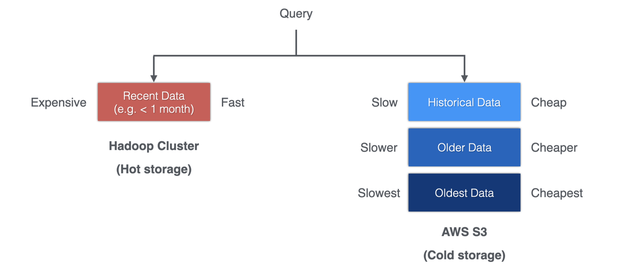

Cloud storage (namely AWS S3) was considered the ideal candidate for these static datasets. However, we wanted to avoid relying on proprietary interfaces for querying, which would have increased costs and created additional dependencies.

We decided that results of periodic measurements could be archived on a daily basis, once the window for late results had passed. In the new era, these are stored as compressed (bzip2) objects in the cloud, named by date to allow quick retrieval. To allow filtering by probe, the actual compressed daily objects are constructed as a concatenation of individual compressed files, each representing the results provided by a probe. We chose bzip2 precisely for its support of concatenation.

The position and length of each compressed probe segment is stored in a dedicated index that allows us to directly retrieve the results by a specific probe using a HTTP Range request to the compressed object in cloud storage. If further time filtering is required, the daily results' stream is parsed in-memory by the API servers to exclude results out of the requested range.

One-off measurements have a slightly different implementation, as they are much smaller and do not usually require filtering by probe. They are therefore aggregated in a single compressed archive on a daily basis, again storing their position in the archive in a dedicated index. The rarely-used probe or timestamp filtering is executed in-memory during their retrieval.

Given that measurements and API requests can span across a large time range, we have to support the retrieval from both backends (HBase for "hot" recent data, cloud storage for the historical "cold" results) and provide a seamless streaming experience. Whenever a request for measurement results hits the API, a planner component evaluates the measurement metadata and the index of the archived results to determine where to retrieve the data from. Recent data is streamed first, and historical data is subsequently attached to the stream. (It’s worth noting that as a side-effect of this, result downloads spanning multiple days don’t necessarily come in chronological order any more.)

This planning component can support multiple data backends and combinations of time ranges, though at present we use only two backends (HBase and S3) with three possible combinations (recent data only, historical data only, and recent + historical).

Once the API codebase was updated with support for the retrieval of historical data from cloud storage, the data itself was gradually migrated over several months. This migration consisted of extracting, compressing and concatenating the results, verifying the outcome to make sure no data was lost in the process. This migration was successfully completed at the end of July.

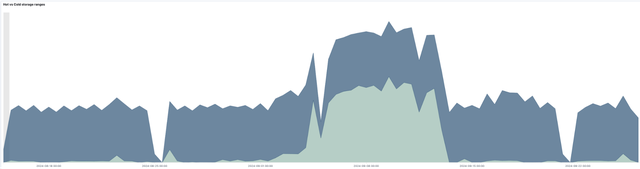

In the following diagram we can see retrieval patterns for the month of September 2024. Occasional research campaigns may target historical data, but on average its demand is low in comparison to recent measurements.

The expected increase in CPU utilisation in the API servers, due to in-memory decompression of the stream from the cloud storage, and the occasional processing and filtering, has proven to be negligible. Response times have slightly increased for historical data, given the additional latency in initiating the data transfer from cloud storage, in comparison with the previous on-premises solution. However, once the data stream begins (usually within a few hundred milliseconds), it remains stable even for large datasets, while in the past glitches in HBase would have inevitably interrupted the data stream.

Nowadays, HBase remains in place for recent results (two weeks) and for all other RIPE Atlas tables, including the index for the compressed objects. An additional two weeks of measurements are also kept in HBase to cater for potential issues in the archival process.

On a daily basis, results older than two weeks are indexed and archived, processing approx. 200GB of data, with the generation of 50GB compressed objects that are uploaded to cloud storage. The total size for the archived results in AWS S3 is 114TB, as of the end of September 2024.

For the future, further cost optimisations could be achieved with different storage classes for objects with infrequent access. Given that we use cloud storage as-is, without any additional application layer from the cloud provider, we are open to consider alternative storage providers, as long as they support HTTP Range requests.

In the future, we may have to restrict time filtering capabilities for historical data if the current in-memory time filtering proves to be too demanding (and costly) in response to malformed query patterns. The ideal time-filtering query should request results from 00:00 to 23:59, anything else will trigger internal processing. At present, we discard approximately 20% of the data retrieved from S3. For now the access to this S3 bucket is not open to the public, but we are also considering providing direct access to S3 on demand.

Infrastructure renewal

Until recently, all of the RIPE Atlas system components were hosted on a mix of self-managed (virtual) machines in data centres, and rented VPS services.

While containers were used for automated testing and other parts of the continuous integration pipelines, all of these machines were provisioned using SALT. This works well for day to day operations, but requires considerable investment in time when it comes to major upgrades — for example, upgrading between major OS versions, which can require multiple changes to the formulas.

Although many parts of the RIPE Atlas system have generally predictable load, almost all of the parts can experience peaks in demand during extraordinary events, such as a big influx of new measurements or probes reconnecting after a network outage. The barriers to adding and removing machinery (measured in time and labour) mean that there needs to be sufficient capacity held in reserve to handle these peaks. In practice, there have been times over the years where some parts would struggle for a while to catch up with a backlog, causing delays or otherwise impacting service, before eventually recovering.

As part of the effort to reduce our data centre footprint, the RIPE NCC has started building Kubernetes clusters for running some of our applications. These clusters use a suite of supporting parts to provide the "plumbing" universally required by the applications that run on them, including:

- Argo CD for continuous delivery

- Karpenter for node autoscaling

- VictoriaMetrics for observability

- fluent bit for processing container logs

- Loki and Grafana for exploring and visualising logs

Care has been taken to avoid vendor lock-in (see our Cloud Strategy Framework) by largely using open tools and standards.

Applications running on these clusters are able to be scaled up and down as demand requires, and because they are run as containers there is a much looser dependency between them and the machinery they run on. Changing things like the Python version or supporting libraries is much easier than before. Given the footprint of the RIPE Atlas infrastructure, and the fact that some of the hardware and software involved was reaching end-of-life status, it was seen as a good candidate for moving to a new Kubernetes cluster.

Recent work on controllers (by now: “kontrollers”)

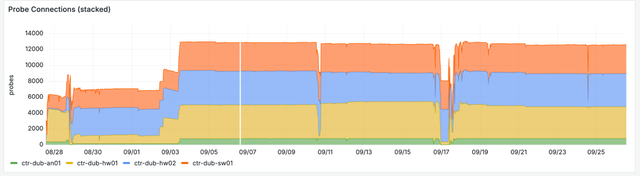

The first target for renewal was the RIPE Atlas probe (and anchor) control infrastructure, specifically the machines known as the "controllers". These are machines that probes connect to in order to receive measurement instructions, and that process results and forward them to the central storage backend. There were around 40 of these controllers running as on-prem hardware or VMs on VPS providers in six locations around the world. Importantly, each controller was self-contained and capable of managing its sub-population of probes for some time independently, even without communication with the central RIPE Atlas systems.

As can be expected, this decentralisation came with an efficiency cost. A sizable amount of the global controller capacity was sitting idle most of the time, but there were often times when some or all of the controllers were overwhelmed by processing backlogs, leading to spikes in the time it took to schedule measurements and process results. These spikes could be helped along with various techniques, such as manually redistributing probes between controllers, but this needed to be handled carefully to avoid thundering herds of probes leaving one part of the network and overwhelming another part.

The new controller infrastructure aims to improve on efficiency, while allowing more or less decentralisation and redundancy as needed. The controllers now run as collections of workloads in Kubernetes namespaces, from the server processes that accept the probe connections, to consumers of probe result queues. Each of the parts with variable load is capable of scaling up or down as needed using the Horizontal Pod Autoscaler. If there is a large increase in collected results, more result processors are automatically started. If there are many new probes connecting to the system, processes are started to prepare the system to accept them.

There were design challenges to moving the controllers to Kubernetes. The command and control protocol between the controllers and the probes is based on long-running SSH connections, with port forwarding in both directions to provide a full duplex communication channel. There were two major challenges when moving to the new world.

First, what is essentially a highly locked down and secure multi-user Unix auth system is not a natural fit for a containerized world. The initial prototype of the "new" controller was an attempt to directly port the old implementation in as secure a way as possible. With a bit of finessing, it’s possible to run SSHD in an unprivileged container as an application user. By using weird and wonderful techniques, like strict (and temperamental) SELinux pod security contexts, or more classical (and terrifying) techniques such as painstakingly-written setuid wrappers, it was even possible to nearly completely isolate multiple SSH sessions running as the same application from each other and from the SSH daemon. But there were loose ends that still needed tying up, and this all felt like a lot of complexity for a new and unproven system.

The solution we opted for involves a pure Python server, which still speaks the SSH protocol without the complexity and security considerations of having to deal with the Linux auth system or TCP port forwarding. From the probe’s point of view, the controller behaves identically to its predecessors, except that everything takes place within a single well-defined server. The probe can request a HTTP connection to submit results, except in reality there is no real TCP connection created. The probe asks to expose its telnet port, but no listening port is created anywhere on the Kubernetes node. Each container in this setup can handle hundreds of RIPE Atlas probes, and acts as their gateway to the backend. This gateway is transparent to the application-specific logic of measurements and results, so that the vast majority of functional changes to the system do not require probes to be disconnected, and in principle they can stay connected for days, weeks or months.

The second major challenge was exactly due to these long-lived connections. A classical Kubernetes application consists of workloads which can be considered transient in nature, handling a number of requests before possibly being stopped or moved. In our case, any termination of the probe’s TCP connection is undesirable, even if followed by an immediate reconnect. Care had to be taken to make sure the cluster treated the SSH containers as permanent residents, so they wouldn’t be replaced at a moment’s notice, which contributed to some of the system instability experienced recently. Moreover, the load balancing provided by the built-in Kubernetes network proxy (kube-proxy) is not well suited to persistent connections, tending to distribute sessions and, therefore, memory usage, in a highly imbalanced fashion. We have a workaround for this issue now that involves containers themselves limiting incoming connections, but are looking into alternatives such as service meshes and in-cluster load balancing to provide a firmer solution.

The first probes were moved to the new infrastructure in mid-May 2024, and by September they had all migrated. The new systems are now stable, but there have been several periods of instability as they matured. Some of this was caused by issues described in the design considerations above, but there were other more general challenges too. A particular class of issues that were primary causes of two of the more serious outages were burst limits on the nodes, once for CPU and later for storage IO. The general lesson for managing a Kubernetes cluster is to carefully inspect the suitability of the underlying machinery for the given workloads.

Even though we were careful in scaling up the new system, some major issues were not foreseen and only revealed when thundering herds of probes attempted to (re-)connect. We are working on expanding our test environment to make it easier to run large-scale non-functional / load testing, although this is non-trivial given the nature of the load and the protocols involved.

Overall, the controllers are now functioning well. While the Kubernetes clusters are running on Amazon EKS, and use a small number of EKS-specific features, such as Service resources satisfied by Network Load Balancers, we do not foresee medium-term obstacles to moving to a competing vendor, or a RIPE NCC-managed in house Kubernetes cluster, if necessary.

To address the degradation in service of the past month, all hosts whose probes were disconnected due to system instability will receive a one-off transfer of RIPE Atlas credits to compensate for the uptime rewards they would have received. We will also be contacting hosts of specific probes that we believe were put into a malfunctioning state by repeated disconnects, and that may benefit from being rebooted on site.

What we’ll be working on next

Now that the controllers are migrated, the other RIPE Atlas components will all transition from managed machines to containers in some form. Work on migrating the atlas.ripe.net website and API is well underway, and the other components will follow.

Conclusion

While this is only a sample of what we’ve been up to with RIPE Atlas over the past year or so, we hope this write-up provides some insight into this work and some of the challenges that come with it. We appreciate users’ patience while things got a little wobbly recently, but we are all working very hard to get things back on track. We aim to provide a reliable service that performs to our users’ satisfaction within the given requirements and controls that are available to us.

Comments 0

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.