“It's fine to celebrate success but it is more important to heed the lessons of failure.” (Bill Gates)

As we all know, RPKI is getting a lot of attention and traction nowadays. At the RIPE NCC, we operate one of the five Trust Anchors, a hosted RPKI service, and one of the Validator software packages. A big responsibility that we don’t take lightly. We’re constantly improving code and procedures to ensure we’re following the latest RFC and best practices. Also, security is of key(!) importance.

Where people learn and a product matures, mistakes are made. We’re not happy with that, especially if users are impacted. But we learn and improve and that’s why I’m writing this article, so you can learn along with us. At RIPE NCC, we strongly believe in transparency, that’s why we write a post-mortem after every outage but in this article, I’ll dig a bit deeper into the lessons we learned from the RPKI outages we had recently.

What should be the ”correct” behaviour of validators?

The first issue we encountered this year started on Saturday 24 February with a disk problem, resulting in an inability to write newly created, modified or deleted ROAs to our rsync server - this is where Validators fetch information from. From that moment on, the data were kept in the RPKI Database, but not published. This incident impacted 176 ROAs. The user running the application hit a quota and the disk didn’t report an error to our monitoring system, so our 24/7 engineers weren’t notified of this issue. But then things got worse. Because the information could not be refreshed, on Sunday morning, our Certificate Revocation List (CRL) expired. This is an important piece of information in RPKI that basically tells you if you can trust the information that is published. A CRL has a limited lifetime for security reasons, so when it expires, you know something might be wrong.

On Monday morning, we received a notification from Job Snijders that our CRL had expired and our engineers quickly found the disk problem. The disk problem was resolved in a few minutes, but then we had to make sure that the stalled objects got republished. At first, we attempted to do a republish of all objects. Unfortunately, this didn’t work and we had to perform a full Certificate Authority (CA) key roll to update all objects. This took about seven hours to complete. You can find the post-mortem at: https://www.ripe.net/ripe/mail/archives/routing-wg/2020-February/004015.html

What was interesting about this CRL expiration was that not all Validators noticed it. Our own RIPE NCC Validator 3.1 for example only issued a warning, while OpenBSD's rpki-client dropped the CRL and all underlying objects. This sparked a lengthy discussion in the IETF SIDROPS Working Group about the behaviour that the different types of Validators have and what the correct behavior should be as this was not defined clearly in any RFC.

After this outage, we improved the monitoring on our disks and CRL to avoid another unnoticed expiration. On 28 April, the SIDROPS Working Group of the IETF agreed that RFC6486 needs an update, in particular section 6.5, that defines what should happen in case of a mismatch between a CRL and publication point.

What happens if RPKI objects are not published in the right order?

There are a number of objects in RPKI: CAs, CRLs, Manifests, ROAs and if they are published in a wrong order, this could lead to a drop in Verified ROA Payloads (VRPs).

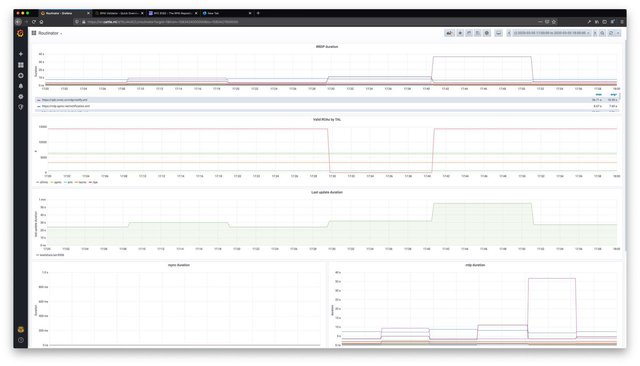

On 6 March, we encountered one of these interesting issues. While we deployed an update to our RPKI infrastructure, the release caused the publishing of RPKI objects to be out of order. For a short period, we didn't update CAs and the corresponding Manifest at the same moment, which causes inconsistencies in the repository data.

In this particular case, we were notified by one ISP that had issues because they ran two old instances of validation software, resulting in a mismatch of data, and route dampening on their routers.

We rolled back the update and are still working on a fix before we roll out a new release.

The day the ROAs were deleted

On 1 April, we suffered our most visible outage - one that we will not lightly forget as this impacted many parties. It started on Wednesday evening during our regular maintenance window for updates on registry software.

Our RPKI infrastructure needs to know which resources are eligible for certification. For example, Provider Aggregatable allocations, Provider Independent assignments, and AS Numbers. We have our internal registry software that informs the RPKI system periodically about updates. After the registry software was upgraded, it was rebooted and while the system was still booting, our RPKI software queried the registry system. Because the registry system had not finished loading, the RPKI system missed a lot of PI assignments.

It is normal practice that when a resource is deleted from our registry, the ROAs are cleaned up automatically. And this is exactly where things went wrong. In this process, the system deleted 2669 ROAs from PI assignments. Because it is normal operation that ROAs are cleaned up, and we had quite a high threshold for alerting, this was not noticed immediately by our engineers.

Some holders of PI assignments had set up alerts in our LIR Portal and received a notification that their ROAs were deleted. They reported this to our Customer Services team and the following morning they alerted the engineers.

They immediately started investigating which ROAs were deleted by our system, which ROAs were re-created in the meantime, and which ROAs were still missing. We restored the missing ROAs in 4 hours, individually informed all impacted users, and published a post-mortem: https://www.ripe.net/ripe/mail/archives/routing-wg/2020-April/004072.html

Unfortunately, during this incident, another big event occurred - RosTelecom had a big route leak that affected roughly 8800 prefixes: https://habr.com/en/company/qrator/blog/495260/

Immediately, we conducted an internal search on which routes were leaked, and if any of our PI holders prefixes were impacted by this route leak (now that they suddenly didn’t have a ROA). We discovered that there was no direct relationship between our incident and the route leak, but there were three PI holders (12 prefixes) impacted. Job Snijders also posted an independent analysis of this incident to the Routing Working Group mailing list that agrees with what we found ourselves.

Of course, we learned many important lessons here, and we adjusted our alerting metrics for both the registry software, and the RPKI software. Apart from that, we started a broader project to collect a wider set of metrics from all systems, not only RPKI, to ensure a better resiliency.

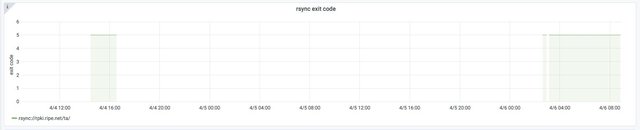

Is rsync necessary?

A few days later, on 4 and 6 April, we received notifications that the RPKI rsync repository ran out of connections. This means that any validators using rsync couldn’t establish connections to our repository at that time. Our engineers found out that a broken rsync client sent an invalid rsync packet every 20 seconds, and the connection wasn’t kept alive. We have since then limited the number of connections per IP in our load balancer and increased the connection limit in rsync from 100 to 300. At the moment there is a draft in the SIDROPS Working Group that we support and want to move forward. Almost all repositories and relying party software support the alternative RRDP protocol, so we want to move away from rsync in due time. Again, we reported this incident to the RIPE Routing Working Group: https://www.ripe.net/ripe/mail/archives/routing-wg/2020-April/004084.html

Can RPKI be a “kill switch” to the Internet?

As I mentioned at RIPE 79, we are currently working on a large project called “RPKI Resiliency” that will take a deeper look in how we work and operate RPKI. Another article will dive deeper in that project.

On a slightly unrelated note, I would like to provide some clarity on a question that comes back regularly about the trust level of the five RIRs in RPKI and what happens if for example, by court order, we are mandated to revoke a certificate.

It’s a common misconception that the RIRs now have unprecedented power (a “kill switch”) to the Internet. The way RPKI works by design is “fail open”. This means that if a certificate gets revoked, the BGP announcement for those resources gets the status “Not Found”. This is the current state in BGP today for around 80% of the prefixes (https://rpki-monitor.antd.nist.gov/) so this is practically business as usual. The only downside is that those resources are not protected by RPKI anymore. These routes will not be dropped, as only routes that appear as “Invalid” will get dropped by parties who perform Origin Validation. In short - RPKI isn’t a “kill switch” and was never designed to be one.

I hope this article provides clarity and a good look “behind the scenes”. Of course, in case you have any questions, we’re more than happy to answer them. You can reach us on rpki@ripe.net. Of course, we’re also available during RIPE80.

Comments 6

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.

Daniel Rösen •

The "kill switch" mechanism isn't "revoking ROAs" but intentionally publishing bad ROAs. This makes the announcements "invalid" to validators and thus getting killed by more and more operators worldwide. RPKI is only "fail open" in case of "does not exist", but not in cases of "ROA broken", intentionally or not.

Nathalie •

Thanks for your comment Daniel. While I agree there is a risk in becoming compromised (and hence our effort in the "RPKI Resilience" project), what we see at the moment is that ROAs sometimes are created with different values than the intent in BGP, causing the BGP announcement to become "invalid" by mistake.

Mark •

You say "by mistake". One of the drivers for RPKI deployment was to avoid them (fat fingers in BGP configs)

Nathalie Trenaman •

Hi Mark, the value "max length" is very confusing for people who are new to creating ROAs. So, I was not referring to "fat fingers", but more about lack of knowledge in creating ROAs that generate invalid RPKI BGP announcements.

Dan •

From Assisted Registry Check message: "You may like to consider using RPKI" I did considered once... NO, I WON'T CONSIDER IT ANYMORE! I don't trust RIPE's management team, I don't trust RIPE's transparency and I do think there are details you are telling... RIPE should be independent, non-profit etc. etc. but it looks just like a company in which some people just get money (more than 50% personal expenses and you kill RPKI system affecting everyone?!?!?) and have other interests... VERY BAD LOOKING! I know I might sound like that Elad (or how the name was) but even if many of his accusation were stupid, about transparency and some people having interests, he is right!

Nathalie Trenaman •

Hi Dan, I understand that you are not happy with the RPKI outages, neither were we. We are all human and mistakes are made to learn from and I am confident we are on the right track to improve the resiliency of RPKI. I’m curious how you would like to see the transparency on our RPKI service improved. I’m very open to hear your suggestions, either here or per mail on nathalie @ ripe.net.