A Dive Into TLD Performance

• 8 min read

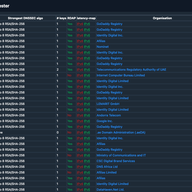

DNS is a complex set of protocols with a long history. Some TLD's go as far back as 1985 - but not all of them are built equal. And not all of them have the same performance depending on where the end user is situated in the world. So just how different are they?

Deadline is getting closer. From what I see, at this point, there are still 526 delegations left on the original 3,358. 526 remaining /857,448 total objects. This is 0.06% left in total! Good job. Almost there

“I know ICANN is doing the gTLD program's Next Round. Then i read this post and it made me wonder: How IPv6-ready is the domain infrastructure? Which systems still rely solely on IPv4 and would break when IPv6 is the only thing available? Therefore i do a suggestion: Register a new TLD during the program that is going to only use IPv6. All related systems, including the DNS servers would run v6, and you can't register "A" records at all. It would be the ultimate IPv6-ready test for global infrastructure. While IPv6 is routable on the backbone level, it is by far not fully reliable or usable. Many end users, organisations, websites and more still need IPv4 to function correctly. Having the entire domain infrastructure v6-only will stand out wherever v6 is unavailable or broken. This would massively help with identifying and resolving such compatibility issues. Since i proposed this idea, i should probably also suggest a name: .VERSIONSIX Using this name, any connectivity issues would stand out. I'd LOVE to do this myself, but as a lone (graduating) IT student, i could never afford the fees. So handing the idea over to someone who can would be the most beneficial for the IPv6 adoption. Please let me know whether this is a stupid or a smart idea, and/or if it's worth considering.”

The problem with that is that someone would have to manage the TLD and pay for it. Not only that, but how many domains would you realistically expect to host on it (knowing that people will have to pay for every domain they register while other TLD's offer dual stack for the same price or less...)? It's an interesting experiment to make. You would be surprised at the amount of domains that would just continue to work. The fact that most companies now use cloud services for everything has the benefits that most authoritative DNS's in them are dual stack. So you might not access the website over IPv6, but you will get a response from the DNS. You can test that on your side. If you run a self-hosted recursive resolver on a v6 only network, you will have interesting results. Some TLDs will just not work (because they don't have v6 authoritative DNSs). We did that at the HSBXL once. It was both surprisingly usable (at least in our part of the world because our most common used TLDs work in IPv6) and breaking some stuff in bizarre ways that we couldn't observe differently.

“"This was a precursor to DNS." whois, a precursor of the DNS??? "IDN TLD's (Internationalised Domain Names in Unicode) were defined much later on in RFC5890 in 2010." No, seven years before (RFC 3490) "Some TLDs even need a registrar to send an email to the TLD management organisation to create register a new domain. A human then has to manually edit the zone" There is nothing wrong with that, if that suits their constituency. The whole point of decentralisation (a strong feature of the DNS) is the ability to have different policies. "the amount of servers" It is an useful information, yes, but less important than the "strength" of the servers. bortzmeyer.fr has eight name servers but cannot be compared to .de (six servers) "It [ICANN] sadly can't enforce it on the legacy ones" See my point above about the freedom brought by decentralisation.”

Hello Stéphane, About the precursor to DNS, I guess this was bad phrasing on my end. About the IDN TLD's, indeed. I missed the first RFC. Both points are now corrected. Thank you for the heads up. About the DNS decentralisation, we agree that this is a good thing. However, I would assume that having it working in a standardised way (yet still decentralised) would be a good thing. The specific example I gave about the manual editing of the zone file has allegedly be a problem (mentioned in the MCH2022 talk) for some TLDs that have manually edited their zone and left errors inside of them. From what I understood, making the standard is the purpose of the IETF, but enforcing TLDs to actually implement them is the purpose of ICANN. About the amount of servers, there to, we agree. I was also thinking about that when writing tldtest. I haven't figured out yet a way to measure the specific "strength" of those servers in an objective way. I know that some TLDs have a better reputation than others when it comes to that and other signs around the TLD itself might give clues. (if the TLD implements DNSSEC algo 13 and RDAP for example, you might get a clue that the team behind it spent more time and more budget into making it solid compared to a TLD that has 2 servers, no DNSSEC, no RDAP and no IPv6). I would be very interested if you have an idea of a way to objectively measure that. I'm open to discussion if you have time

“I like the IP address 2610:a1:1072::1:42 since the name is an IDN. But, alas, no DNSSEC.”

Indeed, I never saw an IP that has an IDN as reverse-dns entry. DNS feels like a rabbit hole

“Even though the percentage of DNSSEC reverse delegations is low, it actually grew twice since my similar measurement in 2018 :) https://ripe77.ripe.net/presentations/135-DS_updates_in_RIPE_DB.pdf”

That's very nice to hear :-) I'm busy on another project involving TLD's and DNSSEC right now. I've uncovered a rabbit hole. Maybe I should re write an article at some point to go even deeper...

Will it soon be available to test IPv6?

Showing 6 comment(s)