Not all of the RIPE 80 attendees were able to use Zoom to participate in the remote meeting for different reasons. We therefore provided an alternative way, which did not rely on Zoom, for people to join in the meeting.

RIPE 80 ended a few weeks ago. It was the first time we've run a completely virtual RIPE Meeting, and with that came a lot of new challenges. Our meeting team was tasked with ensuring that there was a way for attendees to participate in the meeting, without needing to install or connect via Zoom.

The Problem

After a lot of internal research, we decided that Zoom would be the best platform for hosting RIPE 80, as it could handle the number of attendees we anticipated would attend, and covered pretty much everything we needed as far as video conferencing software goes. Our Chief Information Officer, Kaveh Ranjbar, explained our decision to use Zoom in his own RIPE Labs article.

There was still one big hurdle to overcome - providing participants with a way to attend RIPE 80 without forcing them to use Zoom. We did this for two reasons:

- Zoom does not have worldwide coverage, as seen in their list of restricted countries or regions.

- Some participants may have preferred not using Zoom for their own personal reasons, and we didn't want that to affect their choice to attend RIPE 80.

With these two reasons in mind, the obvious solution for us was to provide participants with a mirror video feed of the Zoom webinar, which any video player can play, in an open-source format. This video feed was accompanied by an IRC server, and a RIPE NCC staff member relayed questions from participants on IRC onto the Zoom platform.

Our Previous Setup

Before RIPE 80, our live streaming was done on-premise with Wowza Streaming Engine. I will be the first to confess that 'live streaming' is nobody's speciality inside the RIPE NCC, and with that came wasted time from system engineers and developers, who could otherwise be doing what they were actually specialised in.

We decided that as it currently stood, it wasn't suitable to provide the high-quality live stream experience that we needed if all participants were to attend remotely, for a multitude of reasons:

- Latency of 30-45 seconds vs. "live" (which would be the Zoom webinar in RIPE 80's case)

- Difficult to debug and optimise. As I said, we're not video streaming gurus by profession.

- Cost: we were paying for a Wowza license which was only used for a few weeks per year. Wowza did not provide a "just a week" plan.

Cloud Approach

As part of our approach to the cloud, we decided to explore offloading the live streaming part of our RIPE Meetings to Amazon Web Services. AWS already provided all of the necessary services. It was just a matter of configuring the parts to match our needs:

- Low latency distribution across the world, with no sanction restrictions (our goal was sub-10 seconds)

- Easy configuration for production-ready streaming

- Cost-efficient processing and distribution of the stream

Exploring AWS

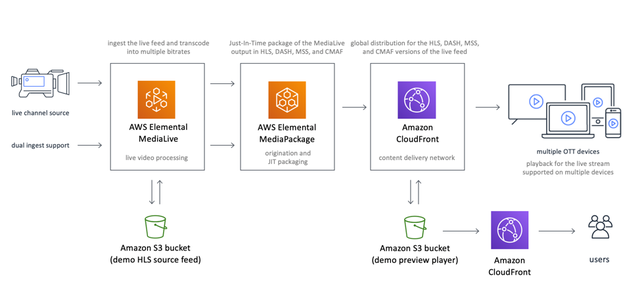

AWS provides a lot of architecture templates which cover their best practice recommendations, which seemed like a good place to start. We took a look at their Live Streaming Solution, deployed it in a test environment and this is what we got:

Once fully deployed, we had a working demo media file streamed (from an S3 bucket) through all of the above services.

After playing around and working out what we did and didn't need, while still making sure that we covered all of our requirements, we ended up with a solution which AWS didn't provide a template for at the time, but now does!

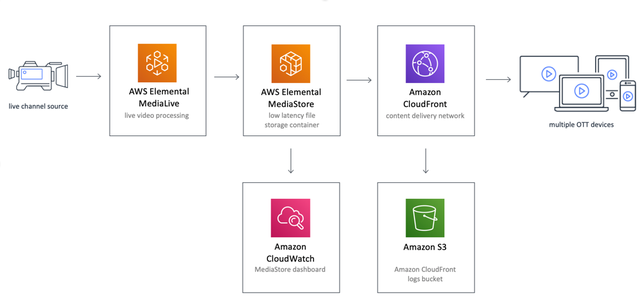

The main difference was our decision to opt for MediaStore versus MediaPackage. As MediaLive did all of the packaging to the multiple bitrates that we needed, there was no need for MediaPackage, which is mainly for adding caption tracks or other format live streams.

Putting it together

We needed to make use of three different services, which when set up properly, provided us with the best configuration. Each service plays an important part in the live streaming process:

Elemental MediaLive

MediaLive has two core roles to play in our setup.

Zoom has built-in functionality to mirror a webinar to a third-party service, which in our case, was AWS. We created an RTMP Push Endpoint in MediaLive, which Zoom would directly send data to when configured. This meant that we played no middleman role in mirroring the stream from Zoom to AWS, ensuring that the latency of the video feed arriving at AWS was as low as possible.

MediaLive is also responsible for converting the feed that comes from Zoom into three different bitrates for clients. We decided to use the HTTP Live Streaming standard for its native cross-platform compatibility. One of the key benefits of HLS over RTMP is the fact that the client can decide which bitrate stream to consume. If the client is trying to watch the live stream with limited bandwidth available, the player will automatically fallback to a bitrate which it can stream smoothly. The player we embed on the RIPE Meeting websites automatically selects your best available quality.

Elemental MediaStore

MediaStore is a file storage service, optimised for media files and live streaming. In our case, it is only used as a place to dump the video segment files created by MediaLive.

CloudFront

CloudFront is AWS's CDN service. We configured it with a custom hostname (livestream.ripe.net) and defined our MediaStore instance as the origin. CloudFront is designed for high-throughput, request caching and worldwide distribution, which is why it's necessary to have between MediaStore and the end clients.

How did it go?

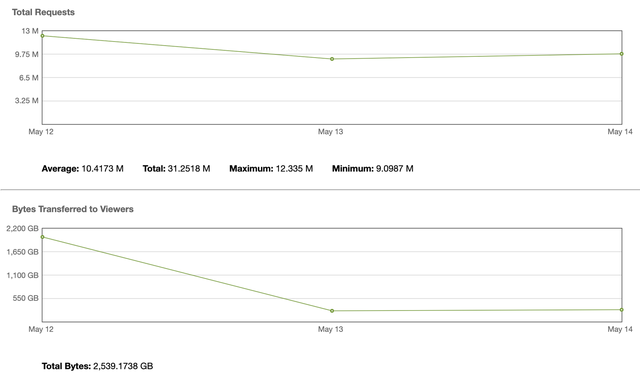

As this was the first time we streamed a RIPE meeting via AWS, we were on high alert and constantly monitoring the services we had set up. At the end of the first day of RIPE 80, we had streamed over 2 terabytes of video data to our meeting attendees! With an average time delay of 7 seconds compared to the Zoom webinar, it was a whole different level of 'real time' compared to before, which was about 45 seconds behind the live sessions.

We quickly identified however, that there was at least one big improvement that could be made, especially when it came to the bandwidth needed by the clients to watch the stream.

Constant Bitrate or Variable Bitrate?

By default, MediaLive is configured to process each video segment at a constant bitrate (CBR). When the stream is mostly static (slide changing only every so often, the only moving part being the speaker's camera at the top right), it is a huge waste of data. Instead of sending just the difference of each frame from the previous, it re-sends the whole frame, even if nothing has changed.

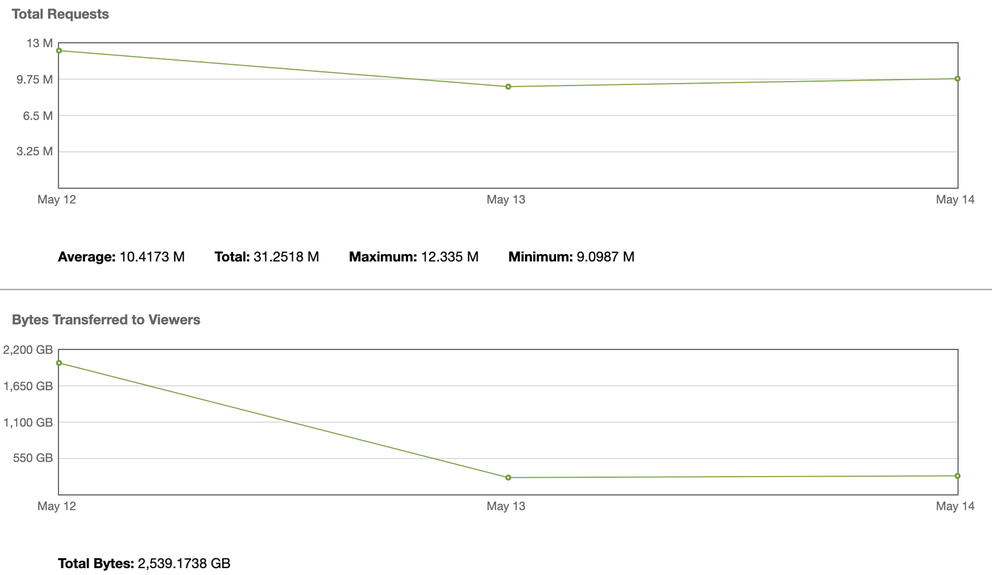

After re-configuring MediaLive to use a variable bitrate, the amount of data delivered to MediaStore (containing all three bitrate streams) nearly halved in size, all without affecting the quality of the actual stream itself.

This meant that even though we had a similar number of requests by viewers, the actual number of bytes dropped significantly, after day one:

This graphs shows an average of 162Kb/request for the first day, whereas for the second and third days we managed to tweak that to an average 20Kb/request. Bear in mind that these requests also included the HLS manifest file, which were consistently a few bytes in size.

In CloudFront, the cost of the service is largely based on the amount of data sent externally to the viewers. Accompanied by the fact that every viewer would now have more of their own Internet connection bandwidth available, this is a big win for everyone.

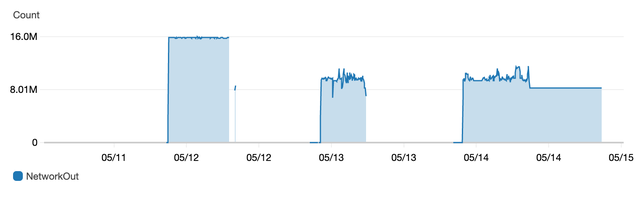

Dependence on Zoom

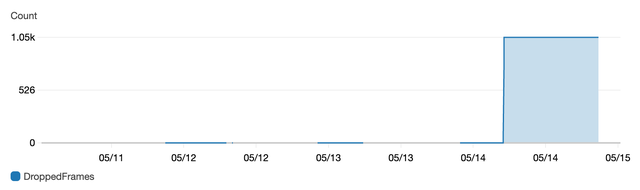

On the third day of RIPE 80, we started receiving alerts that the RTMP stream coming from Zoom was malformed. This unfortunately was completely out of our control and resulted in a large number of dropped frames while MediaLive tried to recover as much data from the RTMP feed as possible. This resulted mostly in choppy video frames, while the audio continued to stream smoothly.

The feed recovered very shortly after (the graph is cumulative). Some attendees also experienced issues in the Zoom webinar at the same time, so we can only assume that something there wasn't working quite as intended. The total duration of degraded quality was ~45 seconds.

Caching is great, until it's not

We had CloudFront caching very aggressively, for a few reasons:

- Requests to the origin (MediaStore in this case) are much more expensive compared to serving a cached response

- Viewers have no 'customised' data, everyone worldwide should receive exactly the same frames

- Serving a cached response from CloudFront's "Edge" locations means a much shorter response time as the response is served from a nearby AWS datacenter, as opposed to the origin in Europe.

In theory, this is all great. However after re-configuring our stream to use VBR on the second day, this caused an unexpected side-effect.

CloudFront had cached all of the video segments as told. But not only that, it had told clients to cache these files too.

HLS video segments by default are just an auto-incrementing filename as the stream progresses, and you end up serving files like 000001.ts, the next being 000002.ts and so on.

When we restarted MediaLive, the auto-increment counter reset to 000001.ts again. This is where our problems began.

Viewers would request these segments, and CloudFront would respond with "Here you go, I've had this cached for ages now!", and serve the original 000001.ts.

We quickly realised what was going on, and proceeded to invalidate the entire CloudFront cache. This only solved half the problem, because some (arguably smarter clients) cached these segments themselves, resulting in them not even requesting the segments from CloudFront again.

In the end we configured MediaLive to prepend the current timestamp to these segment files, to ensure that the cache was completely busted.

The end result was video segments went from something like 000001.ts to 2020-05-13_13-33-27_000001.ts.

Conclusion

Although initially a steep learning curve, AWS has proven to be a valuable member of our RIPE Meeting arsenal. It allowed us to offload a lot of the time and cost associated with maintaining a service which is only used twice a year, while still keeping the live stream within our control and to the high level of quality that we expect from RIPE Meetings.

We're gearing up for RIPE 81, and we're looking forward to optimising our setup even more!

Comments 6

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.

Job Snijders •

I think it’s a must-have to support presenters and chairs using open standards to stream their content. An open internet is not governed through propriety interfaces.

Daniel Karrenberg •

Definitely! Any pointers to an open standard solution with similar or even acceptable performance? I am sure the RIPE NCC meeting team is all ears.

Daniel Karrenberg •

Great work. Thanks for sharing including what went wrong! It is really good to hear that we can deliver a stream with <10s delay. I am just curious how much AWS cost per viewer per day. A very rough estimate is fine.

Oliver Payne •

Very roughly it came out at about $0.15/viewer/day. Having identified a few rookie mistakes, it can easily be done for less than $0.10/viewer/day without any compromise.

Rumples •

Can you go into a little more detail about how you configured Zoom to push RTMP to MediaLive? In theory I should be able to but Zoom keeps reporting failure. How did you secure the Input for MediaLive? It seems to be protected only by an IP address Whitelist which I even temporarily tried 0.0.0.0/0 in but Zoom still reported it could not connect. For the Streaming URL in Zoom I used rtmp://theIPaddress:1935/live/ For the Streaming Key in Zoom I used the last part of the Endpoint URL for the MediaLive's Input (basically everything after "/live/" The Input for MediaLive in AWS is set to RTMP_PUSH I realise this is a bit above and beyond for your article but as you're one of the few people I can find who has successfully done this I hoped you might be able to confirm if I was on the right tracks.

Oliver Payne •

We whitelisted 0.0.0.0/8 since we could never ascertain where Zoom would be piping the stream from. If the MediaLive RTMP Push input is configured like so - rtmp://<ip>:1935/appname/appinstance then in Zoom you set up the Custom Live Streaming Service with a Stream URL of 'rtmp://<ip>:1935/appname' and a Stream Key of 'appinstance'. That's what worked for us!