The DNS Server That Lagged Behind

• 3 min read

Around the end of October and beginning of November 2024, twenty six African TLDs had a technical problem - one of their authoritative name servers served stale data. This is a tale of monitoring, anycast, and debugging.

“DNSDB link mentioned is only for account holders. Can we get the information about previous usage of 9.9.9.9 from any other source, which don't need an account ?”

DNSDB is subscription-only. But there are some gratis "passive DNS" databases such as https://passivedns.cn/ or https://www.circl.lu/services/passive-dns/

I modified a bit my tools (following Vesna Manojlovic's excellent talk at RIPE NCC Educa yesterday https://www.ripe.net/support/training/ripe-ncc-educa) to implement the options required for "TCP ping". See https://labs.ripe.net/Members/stephane_bortzmeyer/using-ripe-atlas-to-debug-network-connectivity-problems for a description of these tools/. Here is an example: % atlas-traceroute -f -r 3 --protocol TCP --size=0 --port=43 --first_hop=64 --max_hops=64 $(dig +short +nodnssec AAAA whois.ripe.net) Measurement #9721999 Traceroute 2001:67c:2e8:22::c100:687 uses 3 probes 3 probes reported Test #9721999 done at 2017-10-06T14:34:57Z From: 2601:646:8d00:6951:fa1a:67ff:fe4d:6a0c 7922 COMCAST-7922 - Comcast Cable Communications, LLC, US Source address: 2601:646:8d00:6951:fa1a:67ff:fe4d:6a0c Probe ID: 12908 64 2001:67c:2e8:22::c100:687 3333 RIPE-NCC-AS Reseaux IP Europeens Network Coordination Centre (RIPE NCC), NL [160.926, 161.434, 163.041] From: fd00:8494:8c40:8c12:16cc:20ff:fe48:cf02 None None Source address: Probe ID: 27041 Error: connect failed: Network is unreachable [Note added manually: probably fascist firewall. Outgoing whois is often blocked.] From: 2003:6:21f8:7957:a62b:b0ff:fedf:fd2c 3320 DTAG Internet service provider operations, DE Source address: 2003:c3:e3e5:a57:a62b:b0ff:fedf:fd2c Probe ID: 28991 64 2001:67c:2e8:22::c100:687 3333 RIPE-NCC-AS Reseaux IP Europeens Network Coordination Centre (RIPE NCC), NL [35.646, 35.717, 36.512] TODO: aggregate results to show median and average, as in "ordinary ping" tests: % atlas-reach -r 3 -g 9721999 $(dig +short +nodnssec AAAA whois.nic.fr | tail -1) 2 probes reported Test #9722014 done at 2017-10-06T14:40:01Z Tests: 6 successful tests (100.0 %), 0 errors (0.0 %), 0 timeouts (0.0 %), average RTT: 111 ms

Strange problem with a Knot resolver: only orange (open padlock) dots. The resolver validates, I'm pretty sure of it: % dig A labs.ripe.net ; <<>> DiG 9.9.5-9+deb8u11-Debian <<>> A labs.ripe.net ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 56289 ;; flags: qr rd ra ad; QUERY: 1, ANSWER: 2, AUTHORITY: 8, ADDITIONAL: 9 ;; OPT PSEUDOSECTION: ; EDNS: version: 0, flags: do; udp: 4096 ;; QUESTION SECTION: ;labs.ripe.net. IN A ;; ANSWER SECTION: labs.ripe.net. 600 IN A 193.0.6.153 labs.ripe.net. 600 IN RRSIG A 8 3 600 ( 20170726090003 20170626080003 31785 ripe.net. jMr0wyDGah/o4e4yV5slTEwqlk6KK11NkWDaiagnIdJp vDO4z9U+MoGWHkdR2rX94kt+eLWgy+U48ygPwY0vyChn xnjcEZ+4DZ8ZfzsSQ+BHodKksujfiCdTeiw6HsrcwtEq AuvdJ19EF9v8c0lVjil46HUhfltgPT7/p+B2Ct0= ) ;; AUTHORITY SECTION: ripe.net. 2131 IN NS a3.verisigndns.com. ripe.net. 2131 IN NS sns-pb.isc.org. ripe.net. 2131 IN NS a1.verisigndns.com. ripe.net. 2131 IN NS manus.authdns.ripe.net. ripe.net. 2131 IN NS sec3.apnic.net. ripe.net. 2131 IN NS tinnie.arin.net. ripe.net. 2131 IN NS a2.verisigndns.com. ripe.net. 2131 IN RRSIG NS 8 2 3600 ( 20170726090003 20170626080003 31785 ripe.net. UlsAmef8O5ls8BHV4Is81uw5q4iWWN82SEgk5DVvT5t7 yN03+/Cl6B8XxcfhymW+/5ndBl2R673GKfamb3Xm2nVd g8JT27ScD6kpYLWTqqITb1+/4PXXyTcYhRVrrfBmjgFG NyyKreTi1mrfV5KConADv2PKk1ixuU8hMxdFnhk= ) ;; ADDITIONAL SECTION: sec3.apnic.net. 171330 IN A 202.12.28.140 sec3.apnic.net. 171330 IN AAAA 2001:dc0:1:0:4777::140 manus.authdns.ripe.net. 171330 IN A 193.0.9.7 manus.authdns.ripe.net. 171330 IN AAAA 2001:67c:e0::7 tinnie.arin.net. 171330 IN A 199.212.0.53 tinnie.arin.net. 171330 IN AAAA 2001:500:13::c7d4:35 manus.authdns.ripe.net. 2131 IN RRSIG A 8 4 3600 ( 20170726090003 20170626080003 31785 ripe.net. PUJXvQ3gFUNXBMgf4Rs5OuuAhLhlOVYMrL1vDKkeZRmY awgwQOJKkRiASVERXIWiyabTxTW+ziORa28QKsDhjRgv OLhPyBO6XJ4ol4bY1Yiecc78vi8lGBYq7Onnn6YYgHdm kAYzGp16ggAd6EitKsz+ymzkDF+HS1Jen7ZcNkI= ) manus.authdns.ripe.net. 2131 IN RRSIG AAAA 8 4 3600 ( 20170726090003 20170626080003 31785 ripe.net. lkXqjQBWK1WkEJUnD7SvzCKd8vpRKzBxWap69Ia4WiHS F8D99749X9NklhDtthD3R7c1umOwoAi7R6OIwjUnVFH8 Q+PBahvmJCefnj/RAEYw4H7HQyvPkSjGhlQ27/vN2ApL p8IzQ+Ym6G1cuxSAVG9NKq6WrgXON3I17JKE0mY= ) ;; Query time: 457 msec ;; SERVER: 127.0.0.1#53(127.0.0.1) ;; WHEN: Tue Jun 27 20:56:19 CEST 2017 ;; MSG SIZE rcvd: 1035

It's with BIND that I get the most green dots: even GOST works fully: https://framapic.org/7UIoLRIEQelV/ci1qZP1I94FA.png The only missing stuff is the Bernstein crypto.

With my Unbound resolver, I get results similar to yours but with Google Public DNS, it's more fun: https://framapic.org/HpnX0WIfdiMl/An1vwwGtuDXb.png Google handles GOST DS but not GOST signatures. Also, it SERVFAILs for RSA-MD5 signatures.

@Chris Your site cannot be visited with some browsers. A recent Firefox says "www.chaz6.com uses security technology that is outdated and vulnerable to attack. An attacker could easily reveal information which you thought to be safe. Advanced info: SSL_ERROR_NO_CYPHER_OVERLAP" And if I try to proceed anyway, I get a SSL_ERROR_INAPPROPRIATE_FALLBACK_ALERT Then, many of the resolvers you publish are not *open* resolvers but *public* resolvers, resolvers *intended* to be queryable by anyone (such as Google Public DNS). We can therefore assume they have good protections against being used a reflector (monitoring, rate limiting, etc). Also, I tested at random some of the addresses and most seem to timeout or to return REFUSED. Open resolvers come and go.

Regarding the women participation in dnsop, there is also the co-chair, Suzanne Woolf. Regarding NSEC5, it provides indeed "good protection against zone enumeration" but not with "a better rate of online key signing", but with a cute cryptographic hack, the VRF (Verifiable Random Functions). Unlike NSEC3, VRF requires on-line signing (but it provides a better protection). (And there is also NSEC3 with white lies, but I stop here.)

Sunday was also the second day of the IETF hackathon, which started on Saturday. Two days of hacking, fourteen teams, something like fifty or sixty people locked in a room, with a lot of coffee and good meals appearing from time to time, thanks to the sponsors. During the hackathon, people were able to develop recent IETF techniques, sometimes published in RFC, sometimes still at the draft stage, where actual implementation experience helps a lot to sort out the good ideas from the bad. For instance, the WebRTC people were working on end-to-end encryption of the media (a touchy subject, for sure, after the recent anti-encryption stances of the british authorities), DNS people developed various stuff about DNS-over-TLS, TLS people were of course busy implementing the future TLS 1.3 (even in a Haskell library), CAPPORT people programmed their solution to make evil captive portals more network-(and user)-friendly, LoRaWAN people started to write a Wireshark dissector for this IoT protocol, etc. That was my first hackathon and I appreciated the freedom (you can hack on what you want but is is of course more fun if you do it with other people), and the efficiency (no distractions, just coding and discussions between developpers). Doing a bug report to a library developer by shouting over the table is certainly faster than filing it in Gitlab :-)

Great (and a good answer to the people who would like the packets to stay inside human borders) but small typo: the IETF working group on DNS privacy is DPRIVE, not DPRIV.

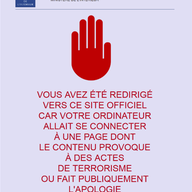

“This is a very important issue that researchers should keep in mind when running RIPE Atlas measurements. They may want to select their probe origins carefully, keeping in mind that some DNS or HTTP requests would cause trouble for the probe host. While RIPE Atlas already has relevant measures to prevent eventual misuse, but some normal requests may trigger alerts in some networks that is undesired for probe hosts. On the other hand I believe people hosting probes should also be aware of such potential issues, when deploying in networks with strict policies (or have IDS monitoring their traffic) or in regions with restrictive regulations.”

@Babak HTTP requests cannot create a problem today, since they are directed only to the Anchors. Indeed, one of the reasons of this limitation is precisely the risk for the probe owner. DNS requests can be more dangerous. Today, they are less often monitored than HTTP requests in most cases, so they are often "under the radar", but this may change in the future (NSA's MoreCowBell and so on). Warning probe owners of the potential risks is certainly a good thing. We must be aware that it will mean, in the future, less probes in what are precisely the most interesting countries :-(

Showing 61 comment(s)