The recent allocation of the 1.0.0.0/8 and 27.0.0.0/8 not only triggered a lot of media attention due to IPv4 exhaustion exceeding the 90% mark, it also sparked the curiosity of many technical folks.

The recent allocation of the 1.0.0.0/8 and 27.0.0.0/8 not only triggered a lot of media attention due to IPv4 exhaustion exceeding the 90% mark, it also sparked the curiosity of many technical folks. Specifically the NANOG mailing list triggered quite a lively discussion about the allocation of 1.0.0.0/8 ( http://mailman.nanog.org/pipermail/nanog/2010-January/017402.html ).

History

1.0.0.0/8 (1/8) was reserved by IANA since 1981. Since then is has been used unofficially as example addresses, default configuration parameters or pseudo-private address space.

The most prominent unauthorised use of 1/8 is anoNet. anoNet is a decentralised peer-to-peer network that allows users to anonymously share content. It uses 1/8 in order to hide the real IP address of its users. Up until 22 January 2010 an article about anoNet on Wikipedia stated:

"To avoid addressing conflict with the internet itself, the range 1.0.0.0/8 is used. This is to avoid conflicting with internal networks such as 10/8, 172.16/12 and 192.168/16, as well as assigned Internet ranges. In the event that 1.0.0.0/8 is assigned by IANA, anoNet could move to the next unassigned /8, though such an event is unlikely, as 1.0.0.0/8 has been reserved since September 1981." ( http://en.wikipedia.org/wiki/AnoNet )

Only recently, in 2008, 1/8 was moved from "the IANA reserved" to the "IANA unallocated" pool of addresses. In January 2010 it was finally allocated to APNIC in order to be distributed to Local Internet Registries in the Asia-Pacific region.

Announcing in 1/8

As part of APNIC's debogonising effort , carried out by the RIPE NCC upon their request, a number of prefixes out of the 1/8 range were announced from one of the RIS Remote Route Collectors (rrc03.ripe.net):

- 1.255.0.0/16

- 1.50.0.0/22

- 1.2.3.0/24

- 1.1.1.0/24

Of course 1/8 was never expected to be a "clean" prefix. In fact various prefixes out of 1/8 have been announced in the past 3 months, as seen by the RIPE NCC RIS tools . For instance, 1.1.1.0/24 was withdrawn just a few days before the RIS announced it as part of the debogonising effort.

Nevertheless, what we saw just minutes after 1.1.1.0/24 was announced on the morning of 27 January, 2010 was more than surprising:

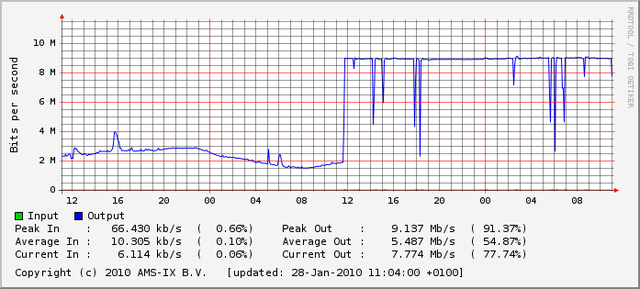

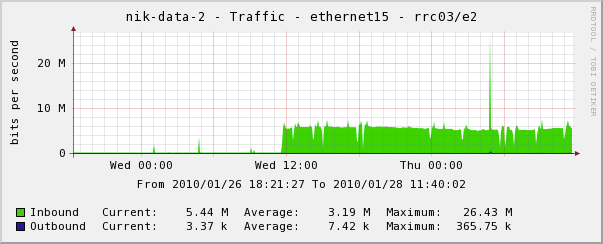

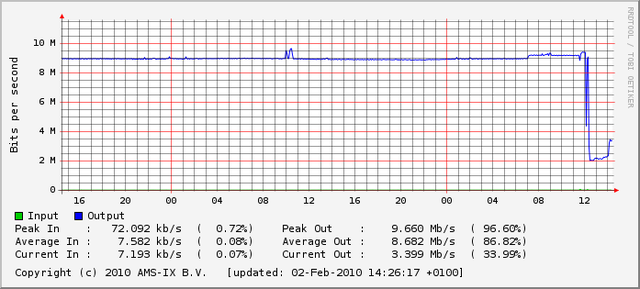

The RIS RRC from which we announced 1.1.1.0/24 has connections to AMS-IX , NL-IX and GN-IX . The above image shows the incoming traffic on the AMS-IX port (10 MBit), which was instantly maxed out, mostly by traffic coming towards 1.1.1.1. The AMS-IX sflow graphs suggested that all together our peers were trying to send us more than 50 MBit/s of traffic. Most of this traffic was dropped due to the 10 MBit limit of our AMS-IX port:

# ping 1.1.1.1 PING 1.1.1.1 (1.1.1.1): 56 data bytes Request timeout for icmp_seq 0 Request timeout for icmp_seq 1 Request timeout for icmp_seq 2 64 bytes from 1.1.1.1: icmp_seq=3 ttl=62 time=19.267 ms Request timeout for icmp_seq 4 64 bytes from 1.1.1.1: icmp_seq=5 ttl=62 time=20.503 ms Request timeout for icmp_seq 6

(Note: We are not announcing 1.1.1.0/24 anymore. Therfore 1.1.1.1 is not pingable at the moment!)

The NL-IX port (100 Mbit) also showed some excess traffic (about 3 MBit on average). The GN-IX port did not see any significant increase. The outbound traffic is routed through the RIPE NCC networks and showed an increase of 5-6 MBit.

The network run on the RRC is designed to collect BGP routing data rather than actually route any traffic. Therefore these numbers are just an indication of what might happen when these prefixes are announced in a high capacity network with multiple transit providers.

The Traffic

Although the AMS-IX port of the RRC was already maxed out by announcing 1.1.1.0/24, we still decided to do some traffic analysis. These measurements have to be taken with a grain of salt since we had no way of telling what kind of traffic was dropped before it could reach the RRC. For that reason we also decided not to include transfer rates in these measurements. We can however present a good indication of what kind of traffic is generated in the 1/8 space.

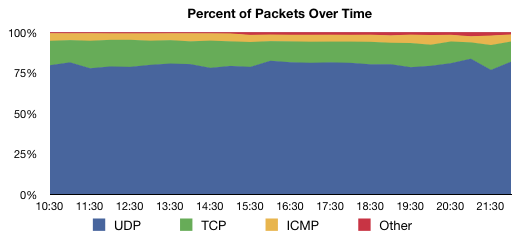

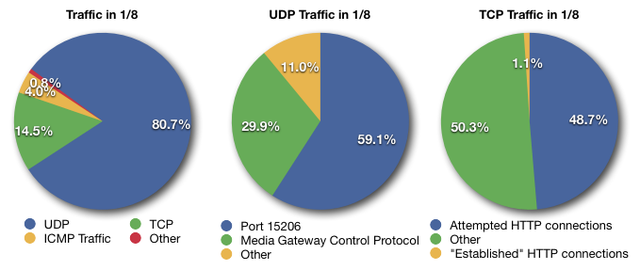

To get an idea of the stability of the traffic pattern we collected 100,000 packets every 30 minutes over a period of 12 hours on 28 January 2010. We found that the distribution is fairly stable over time, with UDP being the predominant type of traffic.

Furthermore we analysed a sample of 100,000 packets collected at 10:00 on 28. January 2010 in more detail.

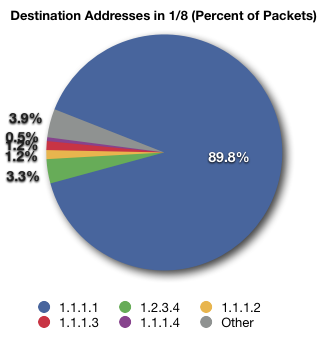

Almost 90% of the packets that we analysed were sent towards 1.1.1.1. Approximately 4% was sent towards 1.2.3.4. Other addresses out of 1.1.1.0/24 are following.

We found that almost 60% of the UDP packets are sent towards the IP address 1.1.1.1 on port 15206 which makes up the largest amount of packets seen by our RRC. Most of these packets start their data section with 0x80, continue with seemingly random data and are padded to 172 bytes with an (again seemingly random) 2 byte value. Some sources ( http://www.proxyblind.org/trojan.shtml ) list the port as being used by a trojan called "KiLo", however information about it seem sparse.

Another big portion of the packets sent towards 1.1.1.1 uses UDP port 2427 and 2727, which are part of the "Media Gateway Protocol". All of these packets seems to originate from one telecommunications provider and can probably be attributed to misconfigured VoIP equipment.

Almost 50% of the TCP packets were attempted HTTP connections on port 80. A small proportion of those packets, however, was seen as "established" HTTP connections, in particular HTTP POST requests. Some research showed that those requests looked very similar to the ones used by an Asian online game. Since the RRC is not running a webserver and should therefore not see any established HTTP connections, this can most likely be attributed to a bug in an HTTP client.

Withdrawing 1.1.1.0/24 and 1.2.3.0/24

Since the traffic patterns seemed to be stable we decided to withdraw the announcement of 1.1.1.0/24 and 1.2.3.0/24 on 2 February 2010. This was necessary in order to keep the debogon addresses pingable and to ensure overall smooth operation of the RRC. Shortly after withdrawing the announcements the traffic at our AMS-IX port dropped to the regular 2 Mbit again.

Conclusion

We can certainly conclude from this that specific blocks in 1/8 such as 1.1.1.0/24 and 1.2.3.0/24 are extremely polluted. Unless the traffic sent towards those blocks is significantly reduced they might be unusable in a production environment.

Although the limited capacity in our setup did not allow us to record accurate real-world numbers in terms of transfer rates and traffic-types, we were able to get a good indication of where the largest problems lie.

In order to collect real-world data the experiment would have to be repeated on a high-capacity network that more accurately resembles real-world scenarios in terms of transit providers and peering agreements.

We would like to hear your opinion on this subject: Do you have any experience with this kind of traffic? What should be done to reduce it? Would further research on this subject be useful? Please leave a comment after the break!

Comments 9

The comments section is closed for articles published more than a year ago. If you'd like to inform us of any issues, please contact us.

Anonymous •

Appears to be RTP and is very likely to be the result of some people scanning for SIP devices using INVITE method. More details on my blog of course .. http://blog.sipvicious.org/2010/02/rtp-traffic-to-1111.html

Anonymous •

I find this kind of analysis fascinating. It strikes me that these kinds of "polluted" zones could actually be quite useful for either security researchers or black hats. Is there any way someone could request, say, 1.1.1.0/29 if they wanted it? Some folks might even find it a sexy IP address for their home page. Of course, they'd probably want to see the load numbers with something other than a rusty old 10 Mbit connection. ;)

Anonymous •

We ended up with a 10 Mbit port is for legacy reasons, but also provides us with some protection. Return traffic (responses sent by our collector to the internet) is sent through the normal RIPE NCC network, AS 3333. In case we would receive a massive amount of response-generating traffic (ICMP pings, for example) we would put a massive load on the RIPE NCC network, potentially affecting other production services. A few months ago, we actually received a DDoS on a different debogon address, configured on that box at the time. The total volume seemed about 2 Gbit/s, according to AMS-IX's Flow graphs. However, only 10 Mbit/s made it to the collector. The result was that we didn't notice anything until one of our peers contacted us. So, the port act as a rate limiter of incoming traffic, therefore limiting return traffic through the RIPE NCC network, and therefore protecting it from harm. (Also, we don't see any interface errors, so I doubt the port is rusty ;)

Anonymous •

I would like to point out that the measurements were done in collaboration with APNIC as part of APNIC's "debogonising" efforts which are in fact applied to all blocks received from IANA.

Anonymous •

Part of the traffic to 1/8 might be explained by bogus MX records that sometimes point to 1/8. I was wondering how much of the 'other TCP' traffic to 1.1.1.0/24 was actually SMTP traffic, is that number available? Some spam is sent from domains with obvious bogus MX records, and we have been testing for that for a few years now. Using bogon space is not the most common, but it does occur (non-resolving MX records are most common, eg. "MX 10 dev.null."). In bogon space, 0.0.0.0 is frequently used, but 1.1.1.1 is also seen (the domain zaobao,com,sg seems to be used relatively often these days (replace , with . for real domain name)). I suspect that a lot of mail servers are actively trying to send bounces by connecting to 1.1.1.1 because of this.

Anonymous •

Hi Jan-Pieter, Interesting question. Of the 20% of packets which were TCP, around 5.4% appeared to be SMTP (compared to 50% HTTP). Mark, RIPE NCC

Anonymous •

Yeah you are right about TCP. Amanda http://www.voipproviderslist.com/

Anonymous •

I'm sure it's also well understood, but 1.1.1.1 is also a common address used by command and control hosts for botnets as a disable address. There will likely be a fair amount of HTTP traffic coming in to that address (as well as IRC on various ports) as zombies try and find home and get 1.1.1.1 as a response instead a loopback for C&C's intentionally taken down (either by the ISP/NSP or often by the botnet itself as it's fastfluxing around the domain space. Joe

Anonymous •

1.1.1.0/24 is used by Intel bladecenters internally for communication with its blades. You probably won't see that traffic in the net; but its still "the wrong thing to do".